Connecting via API with with Apache Kafka enables organizations to automate their real-time data streaming operations through the industry's leading distributed event streaming platform. This powerful connection allows businesses to streamline their event-driven architectures while maintaining high throughput and reliability, all through a robust API that supports complex streaming operations and event processing.

How do I connect via API?

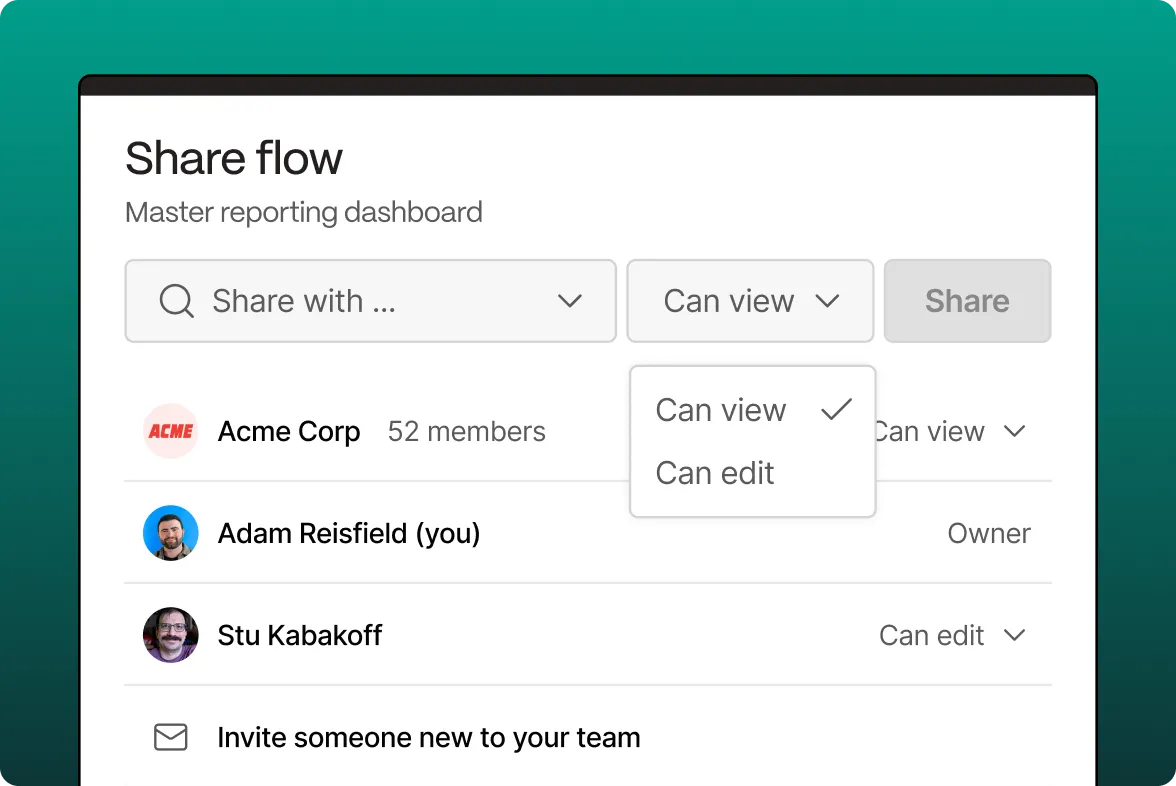

- Connect to the Kafka API through Parabola by navigating to the API page and selecting Apache Kafka

- Authenticate using your Kafka credentials and configure necessary security protocols

- Select the data endpoints you want to access (topics, partitions, consumer groups)

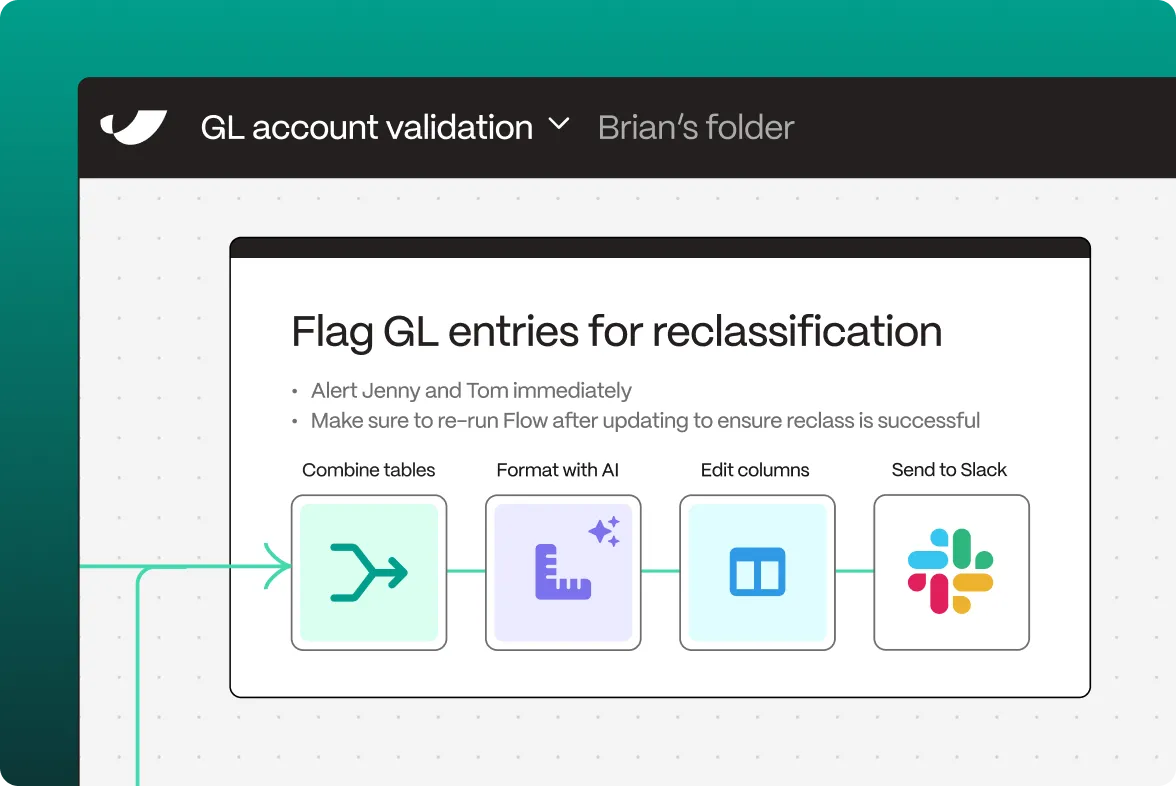

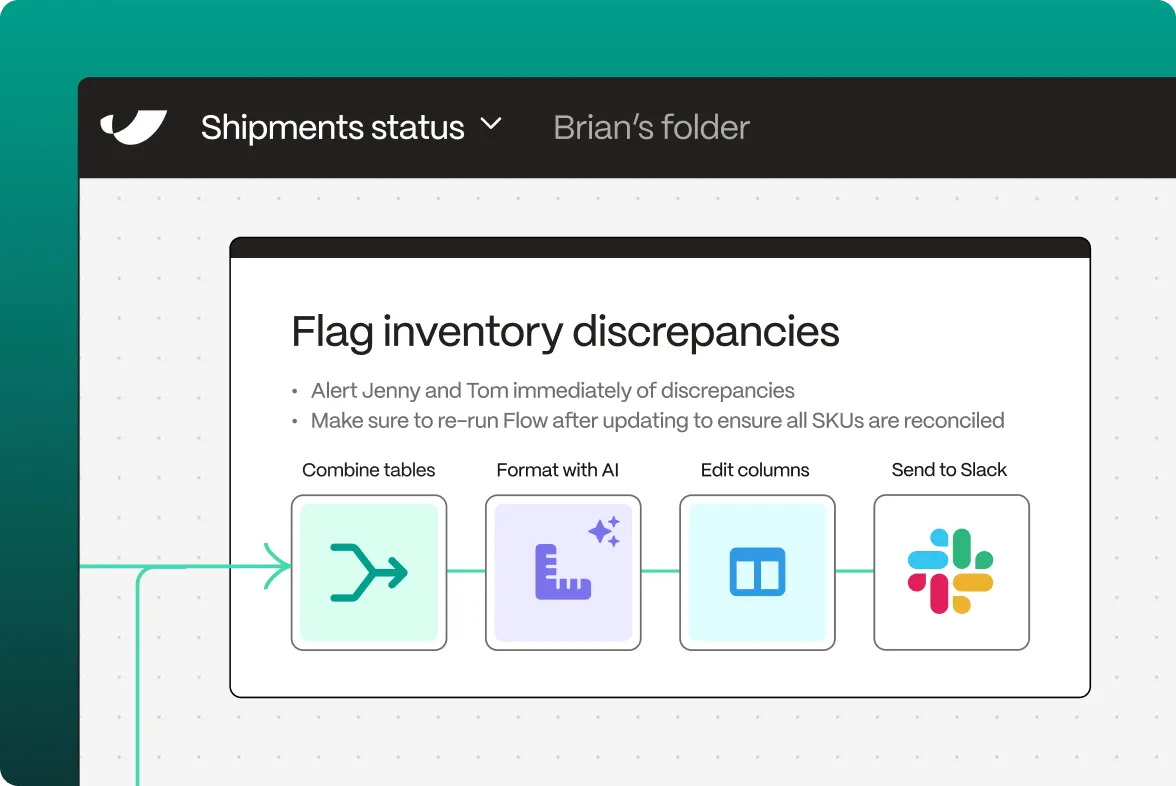

- Configure your flow in Parabola by adding transformation steps to process your streaming data

- Set up automated triggers for stream processing and event handling

What is Apache Kafka?

Apache Kafka is a distributed event streaming platform capable of handling trillions of events per day. Originally developed by LinkedIn and later open-sourced through the Apache Foundation, Kafka has become the backbone of modern real-time data pipelines and streaming applications, serving organizations across industries that require high-throughput, fault-tolerant data streaming capabilities.

What does Apache Kafka do?

Apache Kafka provides a unified platform for real-time data streaming and event processing, enabling organizations to build event-driven architectures at scale. Through its API, businesses can automate the publishing and consumption of data streams while maintaining ordering guarantees and fault tolerance. The platform excels in handling high-volume data streams, supporting everything from real-time analytics to complex event processing across distributed systems.

The API enables programmatic access to Kafka's core features, including topic management, message production and consumption, and stream processing operations. Organizations can leverage this functionality to build automated streaming pipelines, manage real-time data flows, and coordinate complex event-driven workflows while maintaining high performance and reliability.

What can I do with the API connection?

Real-time Stream Processing

Through Connecting via API with with Kafka, data teams can automate complex stream processing workflows. The API enables real-time message handling, automated data transformations, and seamless integration with downstream systems. This automation ensures timely data processing while maintaining system reliability and performance.

Event-driven Automation

Organizations can leverage the API to build automated event-driven systems. The system can react to specific events in real-time, trigger automated workflows, and coordinate responses across distributed services. This automation helps create responsive and scalable architectures while reducing manual intervention.

Monitoring and Analytics

Operations teams can automate their streaming analytics through the API connection. The system can track message flows, analyze throughput patterns, and generate real-time performance metrics. This automation helps maintain visibility into streaming operations while enabling proactive system management.

Data Integration

Data engineers can automate their data integration workflows by connecting various systems through Kafka's API. The system can manage data routing, handle format transformations, and ensure reliable message delivery across different platforms. This integration streamlines data flow while maintaining data consistency and reliability.

Fault Tolerance Management

System administrators can automate their fault tolerance mechanisms through the API. The system can monitor partition leadership, manage replica synchronization, and coordinate failure recovery processes. This automation helps ensure system resilience while minimizing service disruptions.

Through this API connection, organizations can create sophisticated event streaming workflows that leverage Kafka's powerful capabilities while eliminating manual operations and reducing operational complexity. The integration supports complex streaming operations, automated event processing, and seamless system integration, enabling teams to focus on building responsive applications rather than managing streaming infrastructure.