List of Integrations

Integration:

API

The first time interacting with an API can feel daunting. Each API is unique and requires different settings, but is generally standardized to make understanding and connecting to an API accessible.

To learn how to best use APIs in Parabola, check out our video guides.

Types of APIs

Parabola works best with two types of APIs. The most common API type to connect to is a REST API. Another API type rising in popularity is a GraphQL API. Parabola may be able to connect to a SOAP API, but it is unlikely due to how they are structured.

To evaluate if Parabola can connect with an API, reference this flow chart.

REST API

A REST API is an API that can return data by making a request to a specific URL. Each request is sent to a specific resource of an API using a unique Endpoint URL. A resource is an object that contains the data being requested. Common examples of a resource include Orders, Customers, Transactions, and Events.

To receive a list of orders in Squarespace, the Pull from an API step will make a request to the Squarespace's Orders resource using an Endpoint URL:

https://api.squarespace.com/{api-version}/commerce/orders

GraphQL API

GraphQL is a new type of API that allows Parabola to specify the exact data it needs from an API resource through a request syntax known as a GraphQL query. To get started with this type of API call in Parabola, set the request type to "POST" in any API step, then select "GraphQL" as the Protocol of the request body.

Once your request type is set, you can enter your query directly into the request body. When forming your query, it can be helpful to use a formatting tool to ensure correct syntax.

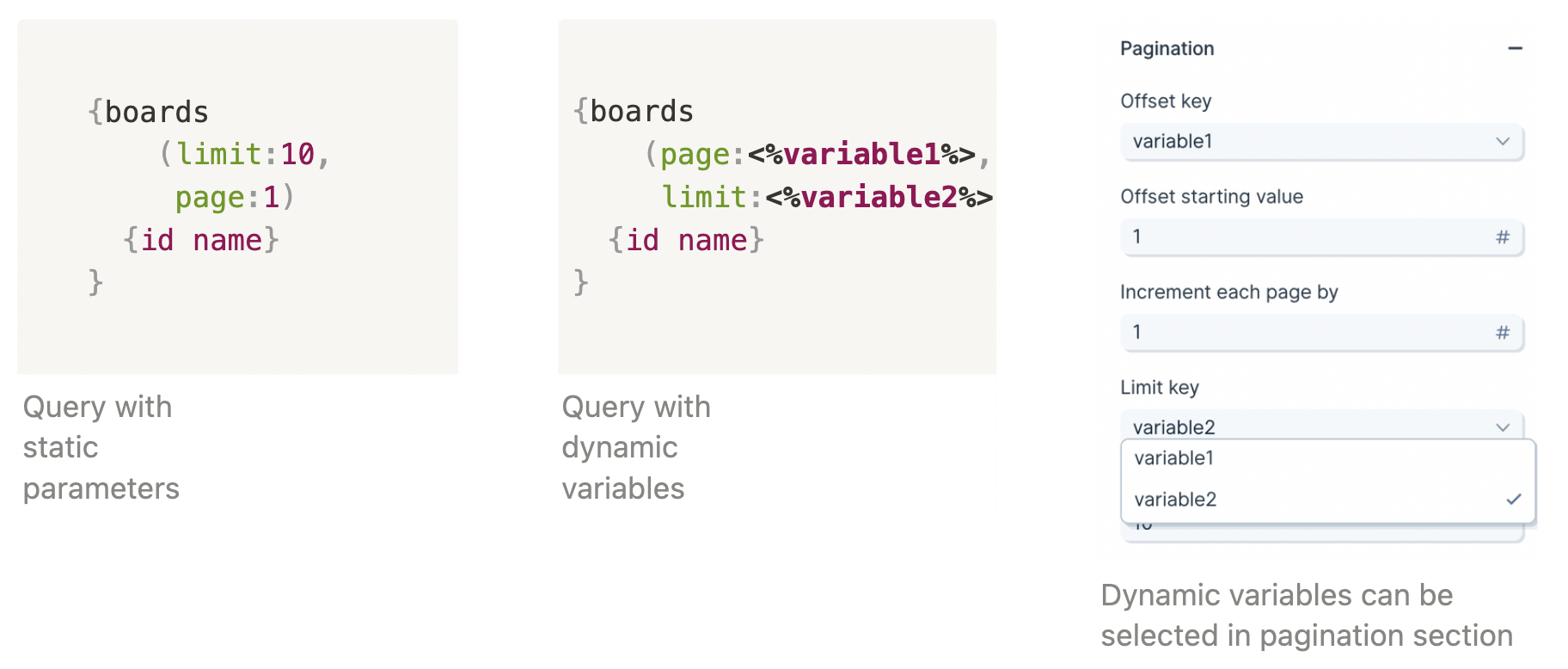

Our GraphQL implementation current supports Offset Limit pagination, using variables inserted directly into the query. Variables can be created by inserting any single word between the brackets '<%%>'. Once created, variables will appear in the dropdown list in the "Pagination" section. One of these variables should correspond to your "limit", and the other should correspond to "offset."

The limit field is static; it represents the number of results returned in each API request. The offset field is incremented in each subsequent request based on the "Increment each page by" value. The exact implementation will be specific to your API docs.

After configuring your pagination settings, also be sure to adjust the "Maximum pages to fetch" setting in the "Rate Limiting" section as well to retrieve more or less results.

GraphQL can be used for data mutations in addition to queries, as specified by the operation type at the start of your request body. For additional information on Graph queries and mutations, please reference GraphQL's official documentation.

Reading API Documentation

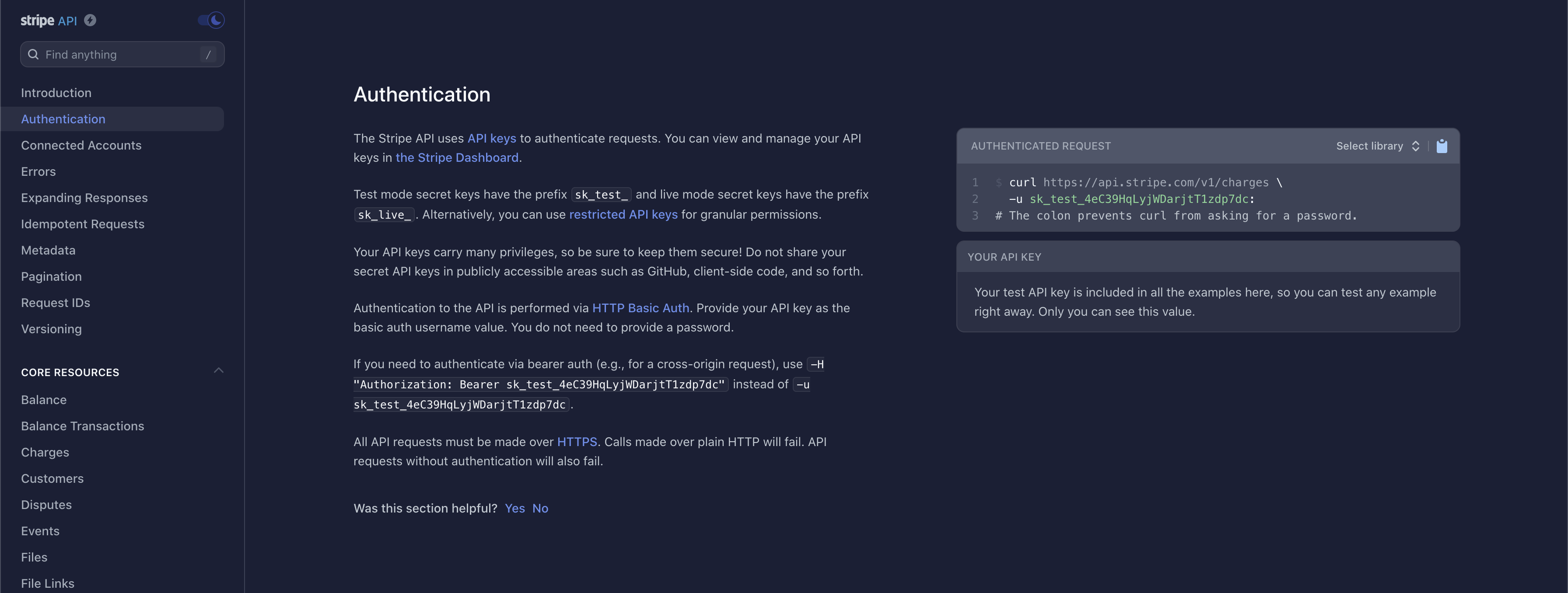

The first step to connect to an API is to read the documentation that the service provides. Oftentimes, the documentation is commonly referred to as the API Reference, or something similar. These pages tend to feature URL and code block content.

The API Reference, always provides at least two points of instruction. The first point outlines how to Authenticate a request to give a user or application permission to access the data. The second point outlines the API resources and Endpoint URLs, or where a request can be sent.

Authentication

Most APIs require authentication to access their data. This is likely the first part of their documentation. Try searching for the word "Authentication" in their documentation.

The most common types of authentication are Bearer Tokens, Username/Password (also referred to as Basic), and OAuth2.0.

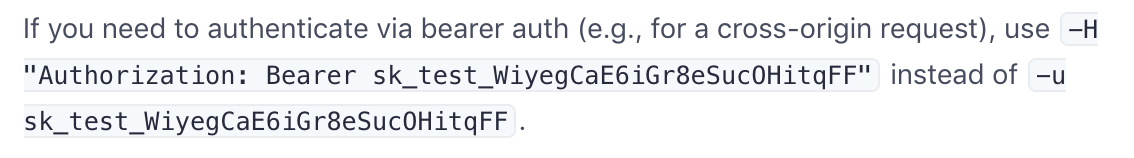

Bearer Token

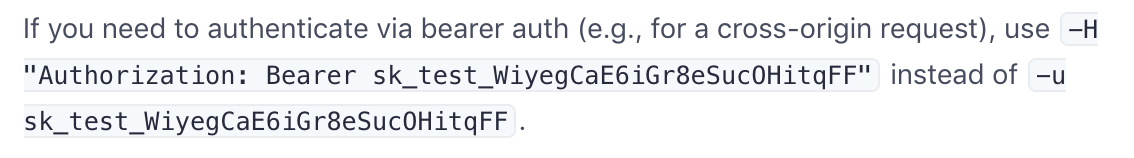

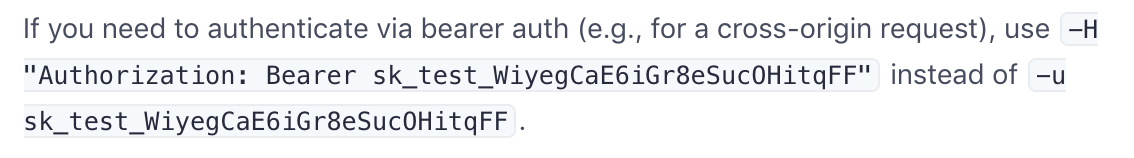

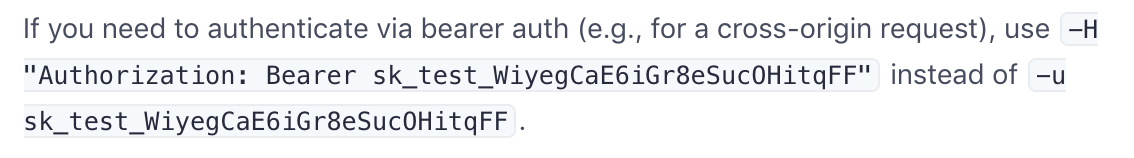

This method requires you to send your API Key or API Token as a bearer token. Take a look at this example below:

The part that indicates it is a bearer token is this:

-H "Authorization: Bearer sk_test_WiyegCaE6iGr8eSucOHitqFF"

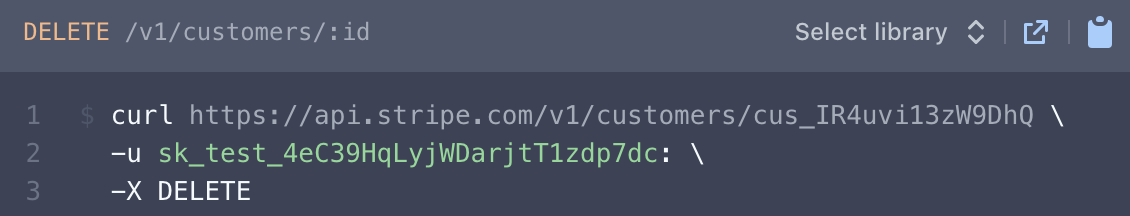

Username/Password (Basic)

This method is also referred to as Basic Authorization or simply Basic. Most often, the username and password used to sign into the service can be entered here.

However, some APIs require an API key to be used as a username, password, or both. If that's the case, insert the API key into the respective field noted in the documentation.

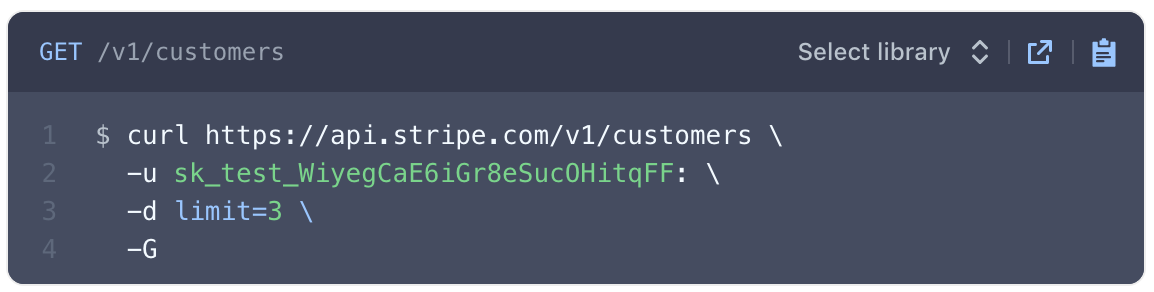

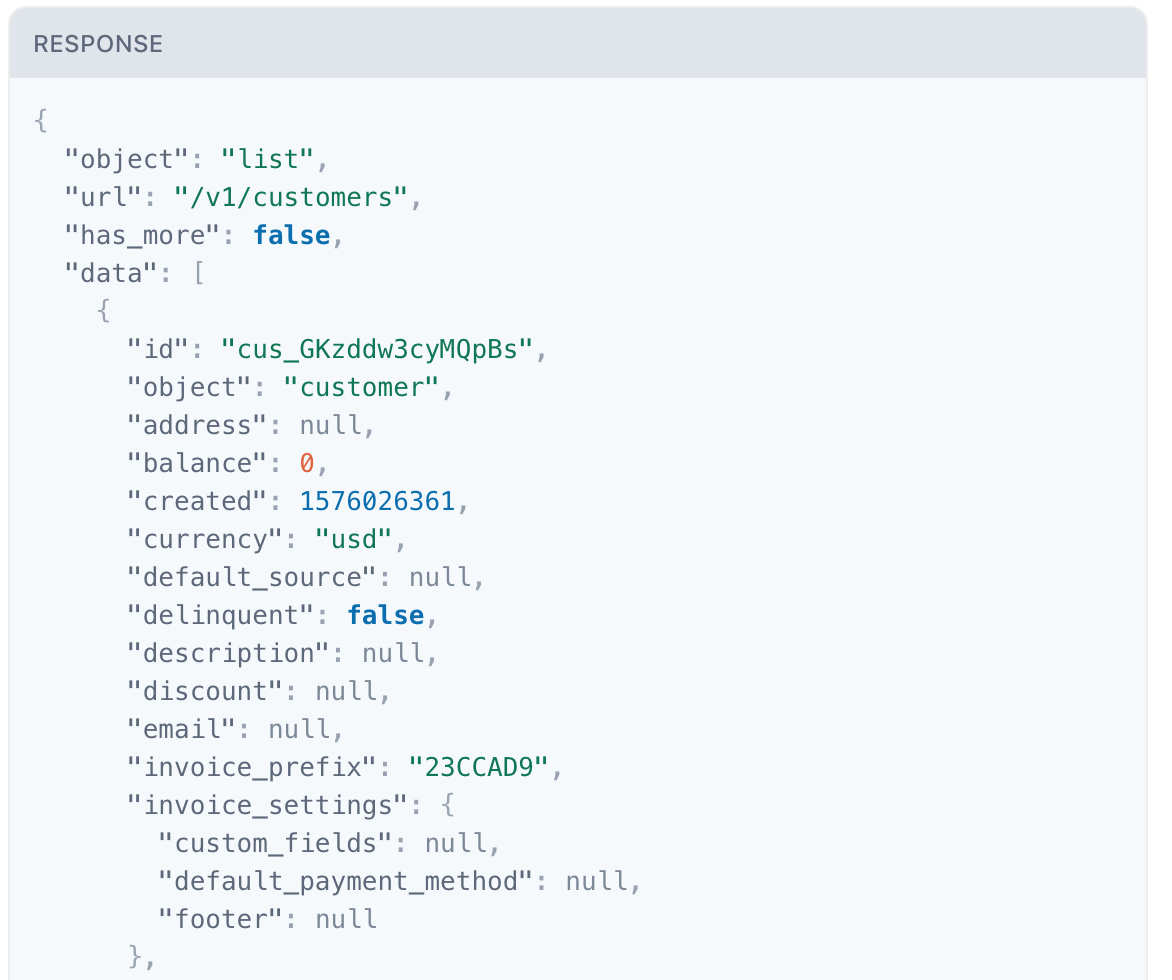

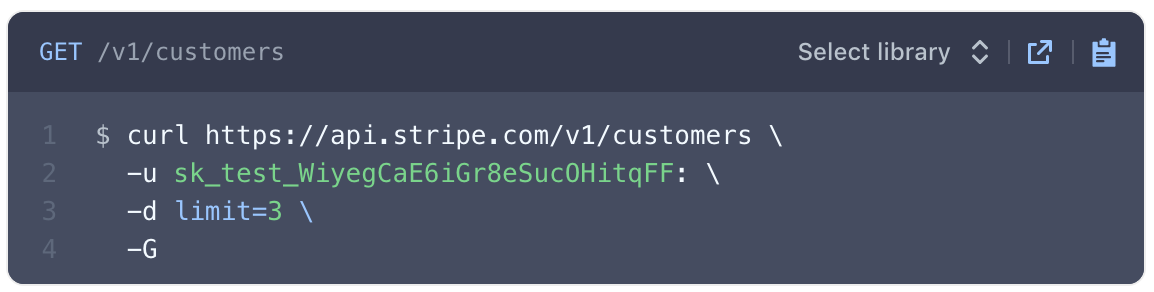

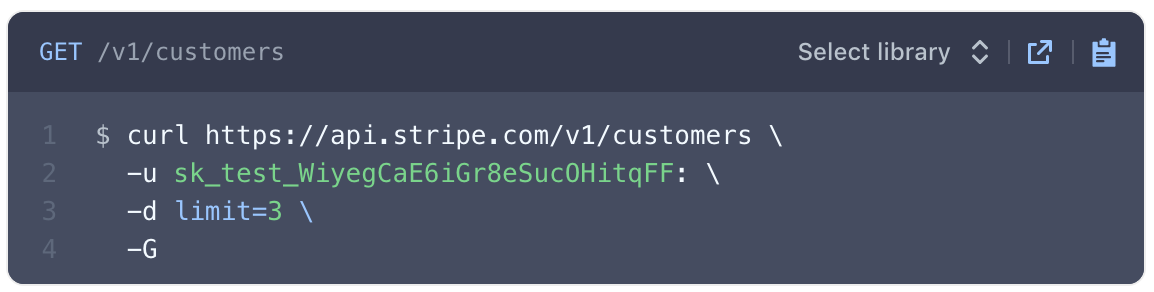

The example below demonstrates how to connect to Stripe's API using the Basic Authorization method.

The Endpoint URL shows a request being made to a resource called "customers". The authorization type can be identified as Basic for two reasons:

- The -u indicates Basic Authorization.

- Most APIs reference the username and password formatted as username:password. There is a colon : . This indicates that only a username is required for authentication.

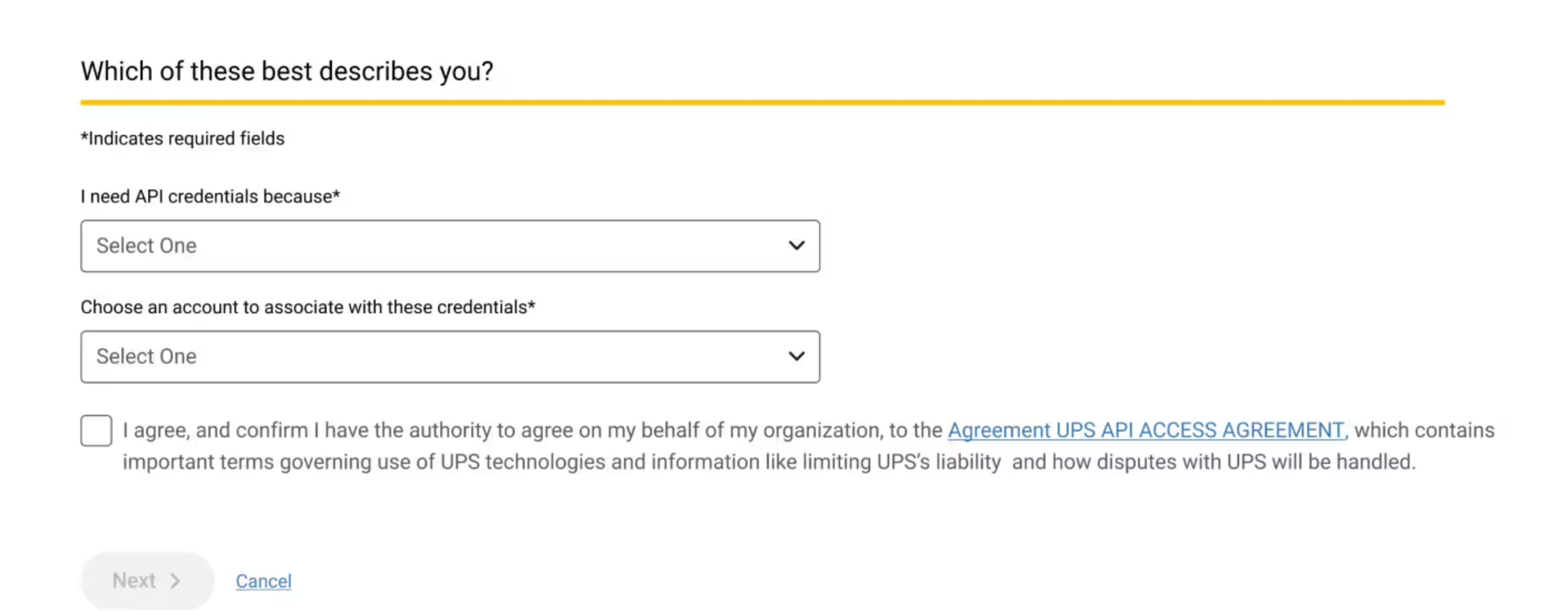

OAuth2.0

This method is an authorization protocol that allows users to sign into a platform using a third-party account. OAuth2.0 allows a user to selectively grant access for various applications they may want to use.

Authenticating via OAuth2.0 does require more time to configure. For more details on how to authorize using this method, read our guide Using OAuth2.0 in Parabola.

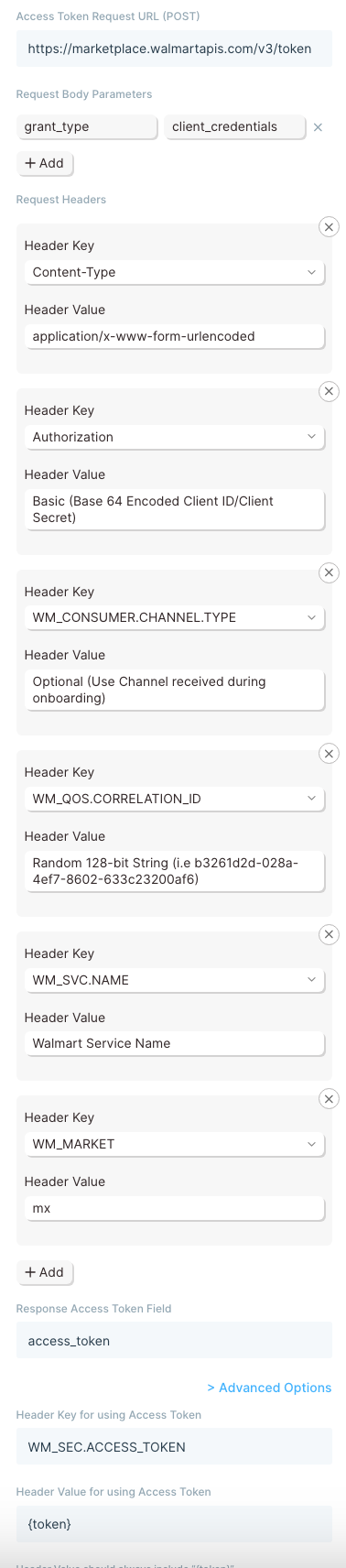

Expiring Access Token

Some APIs will require users to generate access tokens that have short expirations. Generally, any token that expires in less than 1 day is considered to be "short-lived" and may be using this type of authentication. This type of authentication in Parabola serves a grouping of related authentication styles that generally follow the same pattern.

One very specific type of authentication that is served by this option in Parabola is called OAuth2.0 Client Credentials. This differs from our standard OAuth2.0 support, which is built specifically for OAuth2.0 Authorization Code. Both Client Credentials and Authorization Code are part of the OAuth2.0 spec, but represent different Grant Types.

Authenticating with the Expiring Access Token option is more complex than options like Bearer Token, but less complex than OAuth2.0. For more details on how to use this option, read our guide Using Expiring Access Tokens in Parabola.

Resources

A resource is a specific category or type of data that can be queried using a unique Endpoint URL. For example, to get a list of customers, you might use the Customer resource. To add emails to a campaign, use the Campaign resource.

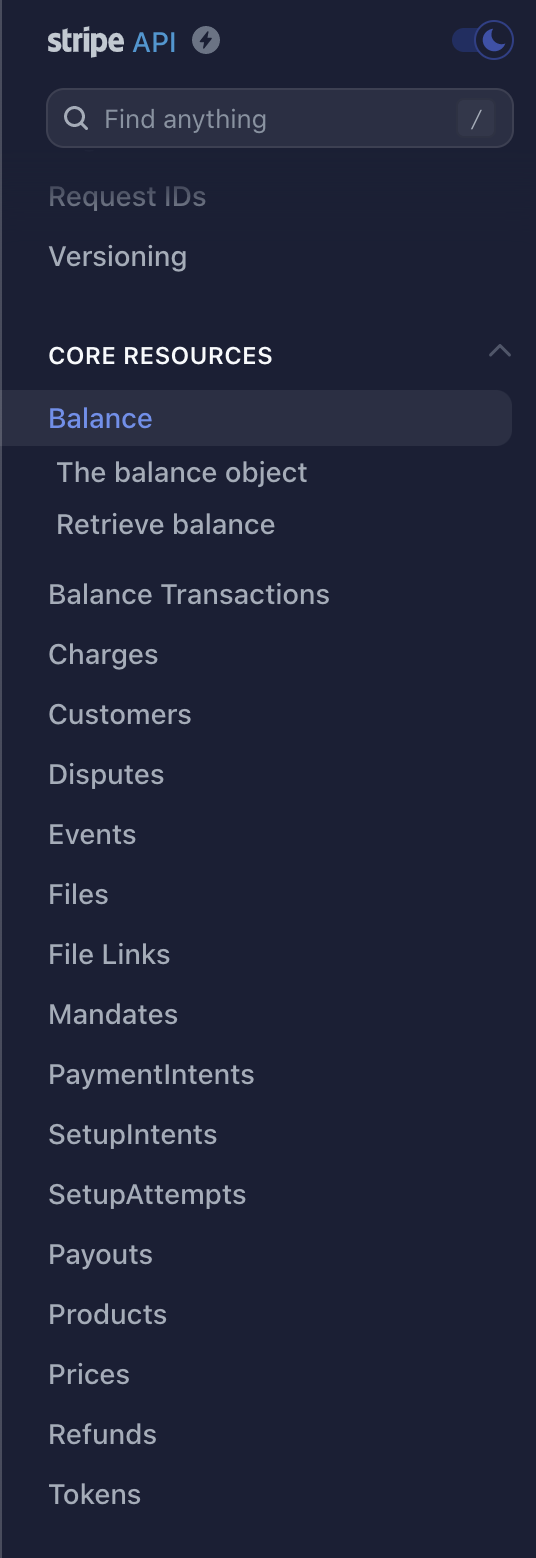

Each resource has a variety of Endpoint URLs that instruct you how to structure a URL to make a request to a resource. Stripe has a list of resources including "Balance", "Charges", "Events", "Payouts", and "Refunds".

HTTP Methods

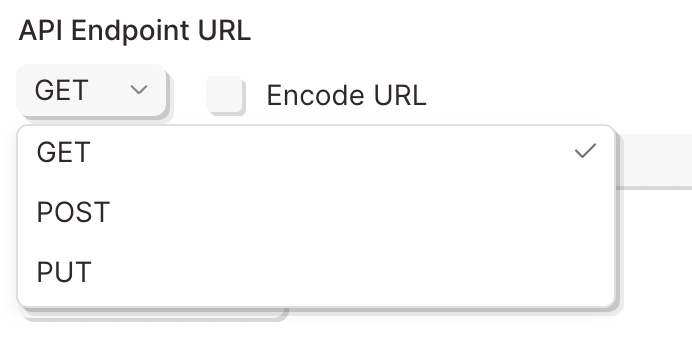

HTTP methods, or verbs, are a specific type of action to make when sending a request to a resource. The primary verbs are GET, POST, PUT, PATCH, and DELETE.

- The GET verb is used to receive data.

- The POST verb is used to create new data.

- The PUT verb is used to update existing data.

- The PATCH verb is used to modify a specific portion of the data.

- The DELETE verb is used to delete data.

Custom Headers

A header is a piece of additional information to be sent with the request to an API. If an API requires additional headers, it is commonly noted in their documentation as -H.

Remember the authentication methods above? Some APIs list the authentication type to be sent as a header. Since Parabola has specific fields for authentication, those headers can typically be ignored.

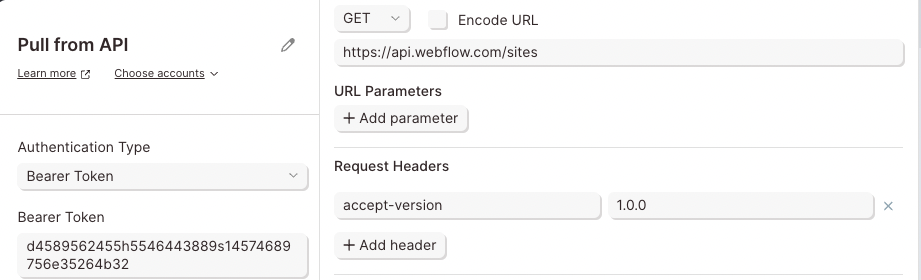

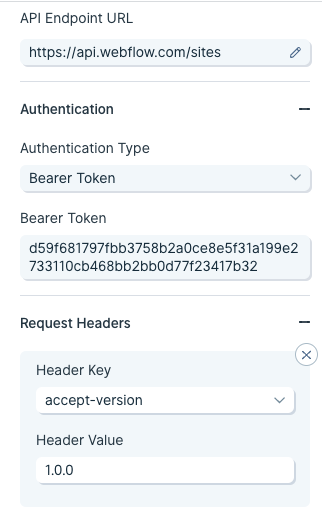

Taking a look at Webflow's API, they show two headers are required:

The first -H header is linked to a key called Authorization. Parabola takes care of that. It does not need to be added as a header. The second -H header is linked to a key called accept-version. The value of the header is 1.0.0. This likely indicates which version of Webflow's API will be used.

JSON

JavaScript Object Notation, or more commonly JSON, is a way for an API to exchange data between you and a third-party. JSON is follows a specific set of syntax rules.

An object is set of key:value pairs and is wrapped in curly brackets {}. An array is a list of values linked to a single key or a list of keys linked to a single object.

JSON in API documentation may look like this:

Interpreting cURL

Most documentation will use cURL to demonstrate how to make a request using an API.

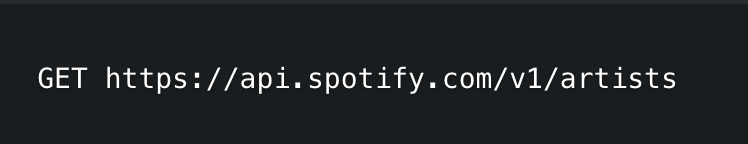

Let's take a look at this cURL example referenced in Spotify's API:

curl -X GET "[<https://api.spotify.com/v1/artists?ids=0oSGxfWSnnOXhD2fKuz2Gy>](<https://api.spotify.com/v1/artists?ids=0oSGxfWSnnOXhD2fKuz2Gy,3dBVyJ7JuOMt4GE9607Qin>)"

-H "Authorization: Bearer {your access token}"

We can extract the following information:

- Method: GET

- Resource: artists

- Endpoint URL:

https://api.spotify.com/v1/artists?ids=0oSGxfWSnnOXhD2fKuz2Gy

- Authorization: Bearer token

- Headers: "Authorization: Bearer {your access token}"

Because Parabola handles Authorization separately, the bearer token does not need to be passed as a header.

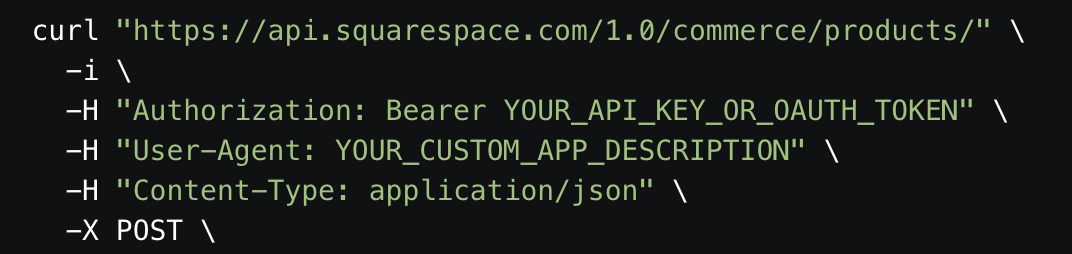

Here's another example of a cURL request in Squarespace:

This is what we can extract:

- Method: POST

- Resource: products

- Endpoint URL:

https://api.squarespace.com/1.0/commerce/products/

- Authorization: Bearer token

- Headers:

"Authorization: Bearer YOUR_API_KEY_OR_OAUTH_TOKEN", "User-Agent: YOUR_CUSTOM_APP_DESCRIPTION"

- Content-Type: application/json

Parabola also passes Content-Type: application/json as a header automatically. That does not need to be added.

Error Codes

Check out this guide to learn more troubleshooting common API errors.

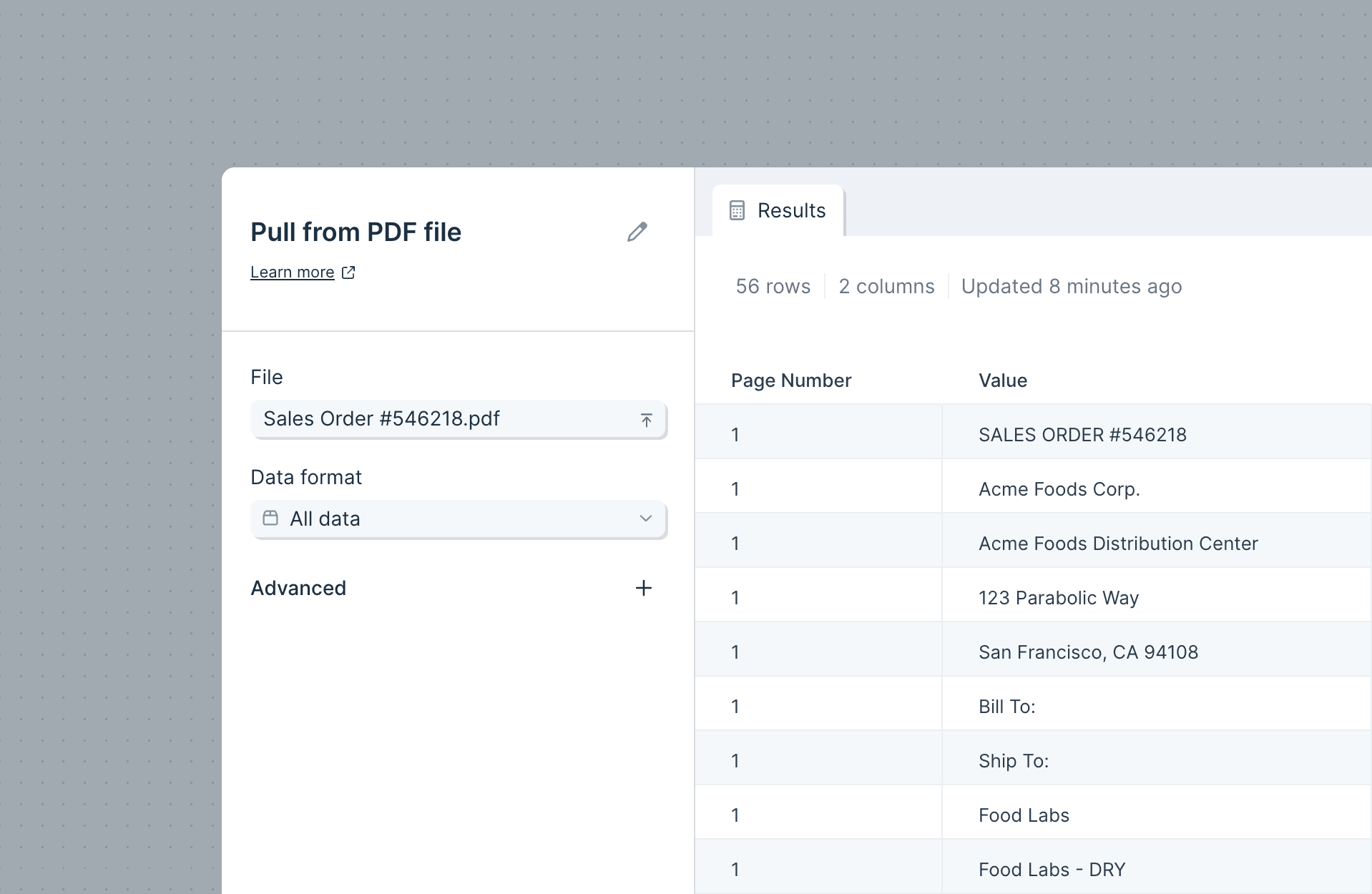

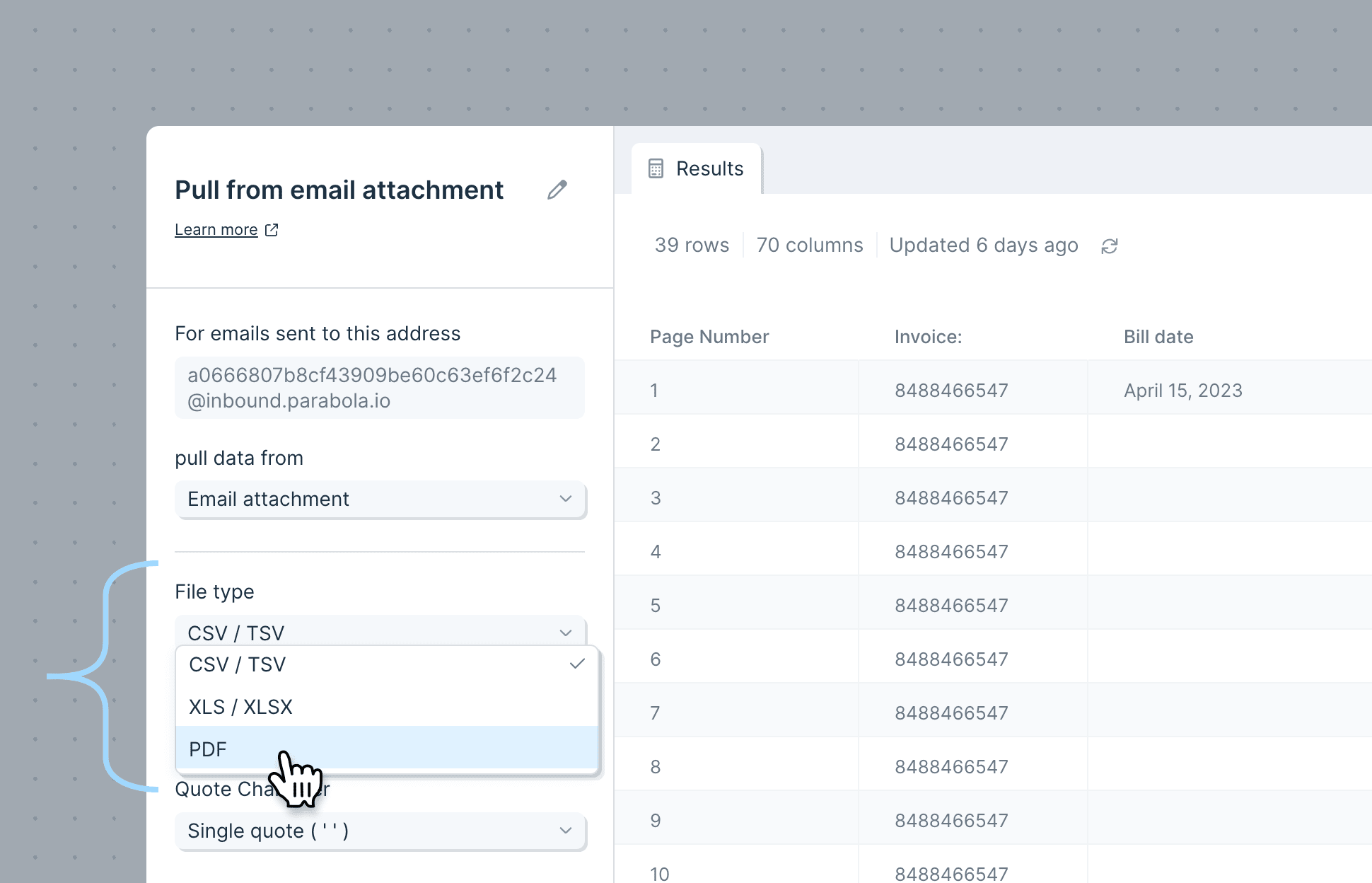

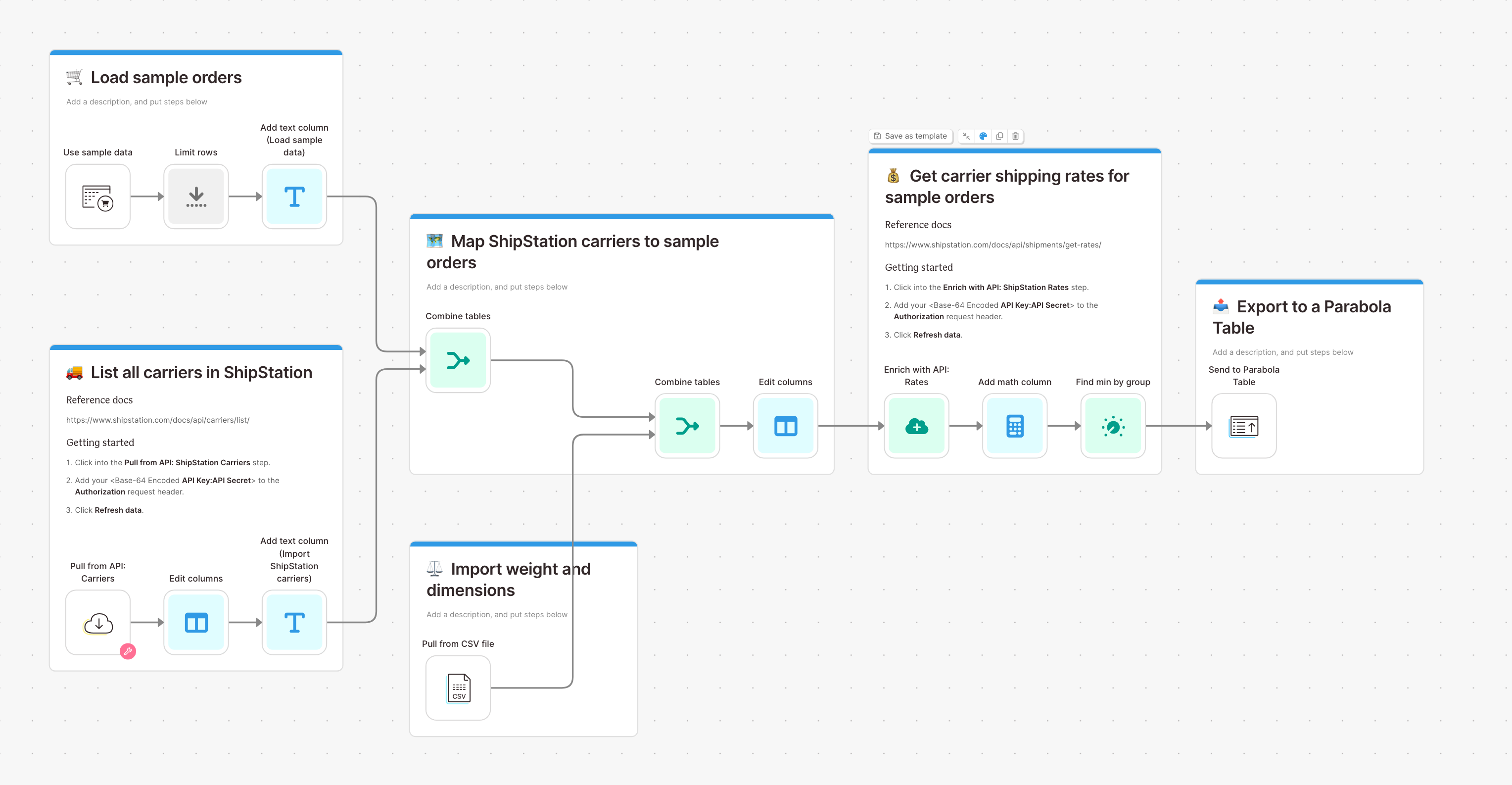

The Pull from an API step sends a request to an API to return specific data. In order for Parabola to receive this data, it must be returned in a CSV, JSON, or XML format. This step allows Parabola to connect to a third-party to import data from another service, platform, or account.

You might wonder when it is best to use the Pull from API step vs Enrich with API step. If you need to take existing data and pass it through an API, we recommend you use Enrich with API in the middle of the Flow. Enrich with API makes requests row by row. If you just need to fetch data and join it into the middle of a Flow, you could use the “Pull from API” step and then a join step.

Basic Settings

To use the Pull from an API step, the "Request Type" and "API Endpoint URL" fields are required.

Request Type

There are two ways to request data from an API: using a GET request or using a POST request. These are also referred to as verbs, and are standardized throughout REST APIs.

The most common request for this step is a GET request. A GET request is a simple way to ask for existing data from an API.

"Hey API, can you GET me data from the server?"

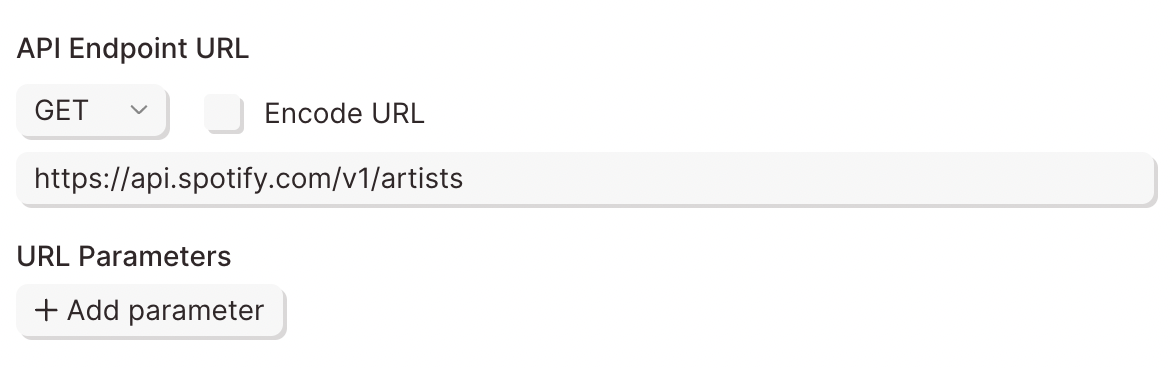

To receive all artists from Spotify, their documentation outlines using GET request to Artist resource using this Endpoint URL:

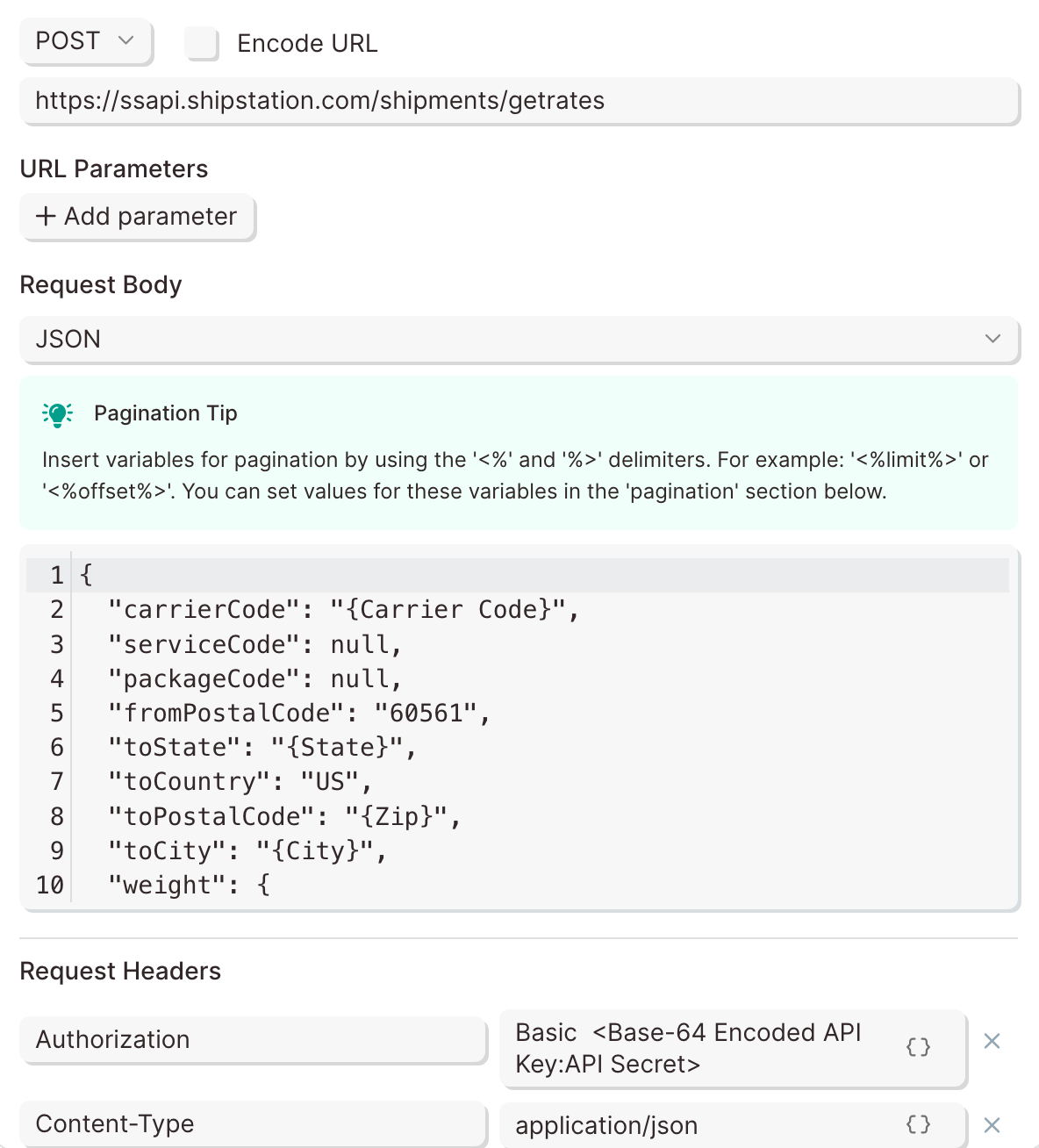

Some APIs will require a POST request to import data, however it is uncommon. A POST request is a simple way to make changes to existing data such as adding a new user to a table.

The request information is sent to the API in theJSON body of the request. The JSON body is a block that outlines the data that will be added.

Hey API, can you POST my new data to the server? The new data is in the JSON body.

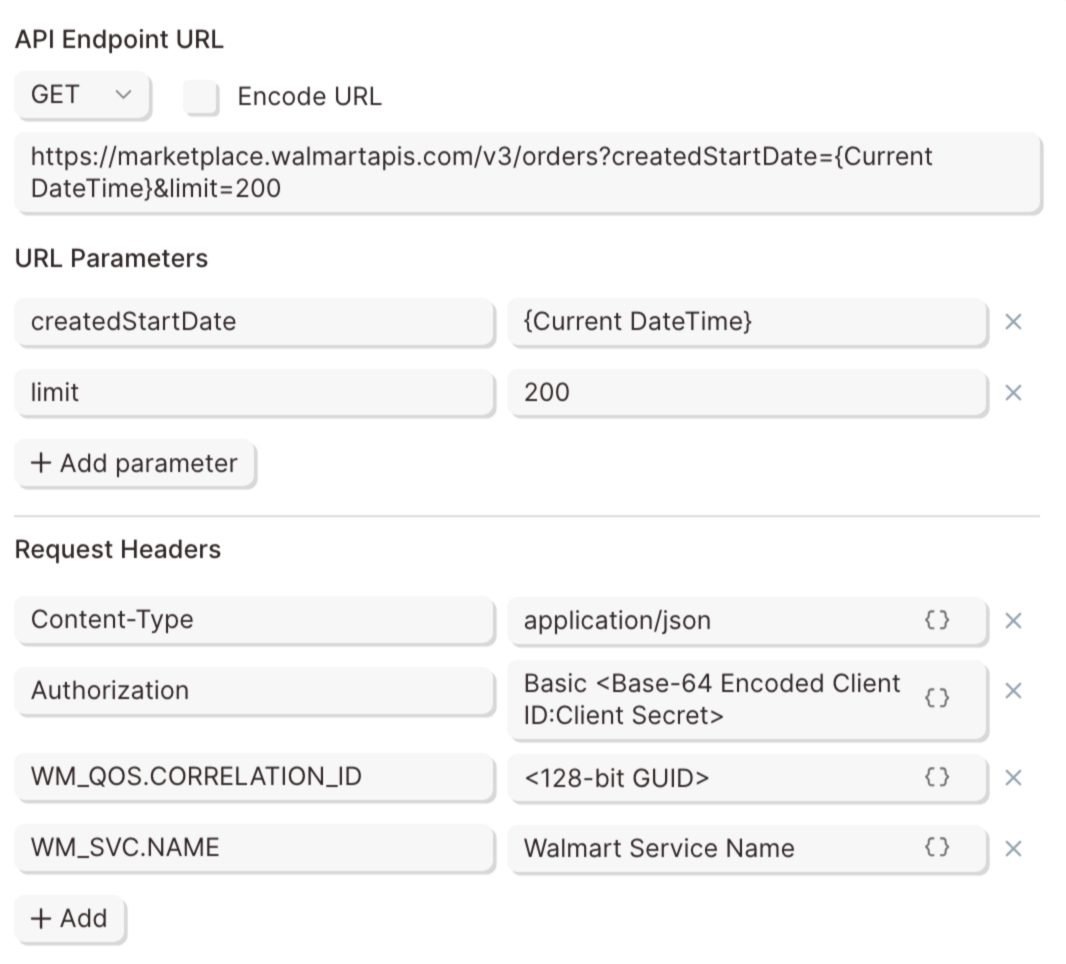

API Endpoint URL

Similar to typical websites, APIs use URLs to request or modify data. More specifically, an API Endpoint URL is used to determine where to request data from or where to send new data to. Below is an example of an API Endpoint URL.

To add your API Endpoint URL, click the API Endpoint URL field to open the editor. You can add URL parameters by clicking the +Add icon under the "URL Parameters" text in that editor. The endpoint dynamically changes based on the key/value pairs entered into this field.

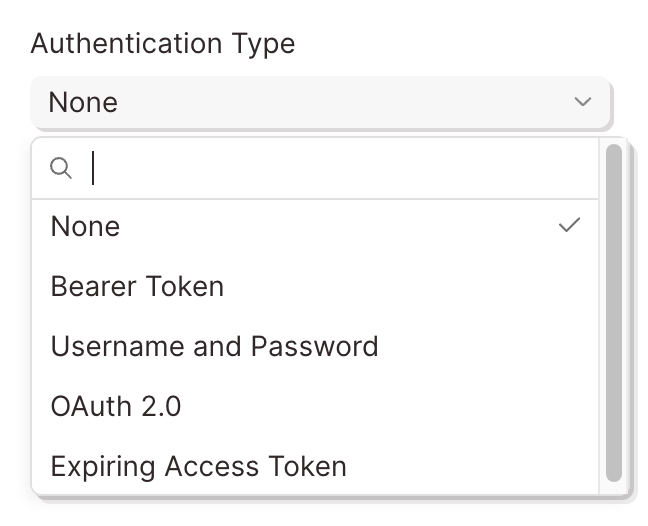

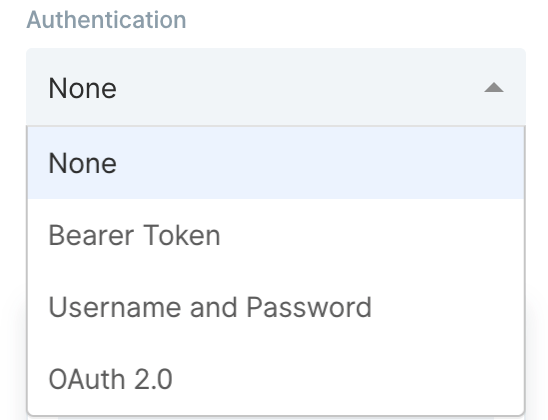

Authentication

Most APIs require authentication to access their data. This is likely the first part of their documentation. Try searching for the word Authentication in their documentation.

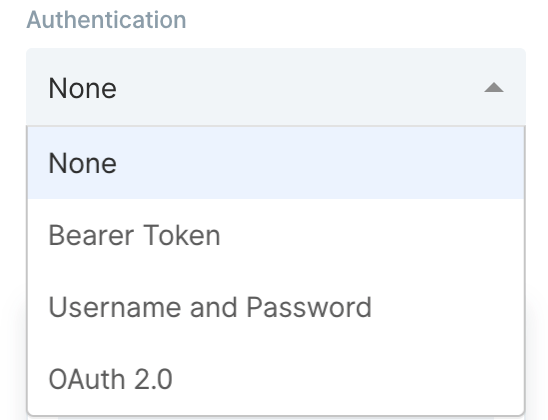

Here are the Authentication types available in Parabola:

The most common types of authentication are Bearer Tokens, Username/Password (also referred to as Basic), and OAuth2.0. Parabola has integrated these authentication types directly into this step.

Bearer Token

This method requires you to send your API Key or API Token as a Bearer Token. Take a look at this example below:

The part that indicates it is a bearer token is this:

-H "Authorization: Bearer sk_test_WiyegCaE6iGr8eSucOHitqFF"

To add this specific token in Parabola, select Bearer Token from the Authorization menu and add "sk_test_WiyegCaE6iGr8eSucOHitqFF" as the value.

Username/Password (Basic)

This method is also referred to as Basic Authorization or simply Basic. Most often, the username and password used to sign into the service can be entered here.

However, some APIs require an API key to be used as a username, password, or both. If that's the case, Insert the API key into the respective field noted in the documentation.

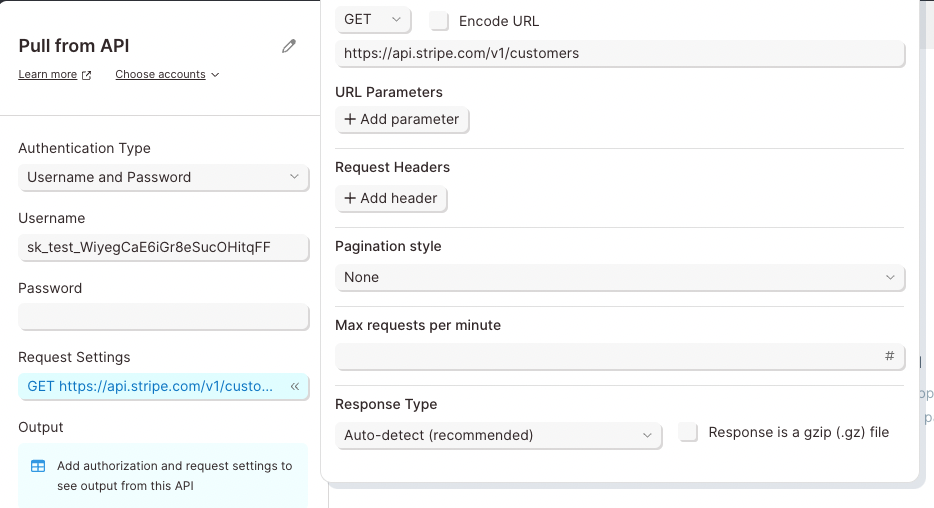

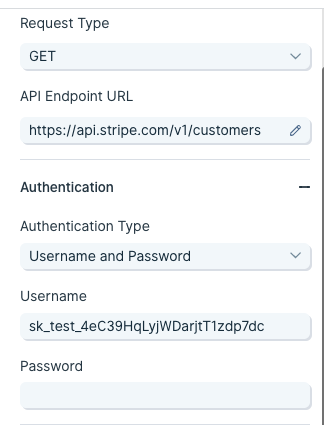

The example below demonstrates how to connect to Stripe's API using the Basic Authorization method.

The Endpoint URL shows a request being made to a resource called customers. The authorization type can be identified as Basic for two reasons:

- The -u indicates Basic Authorization username.

- Most APIs reference the username and password formatted as username:password. There is a colon, which indicates that only a username is required for authentication.

To authorize this API in Parabola, fill in the fields below:

OAuth2.0

This method is an authorization protocol that allows users to sign into a platform using a third-party account. OAuth2.0 allows a user to selectively grant access for various applications they may want to use.

Authenticating via OAuth2.0 does require more time to configure. For more details on how to authorize using this method, read our guide Using OAuth2.0 in Parabola.

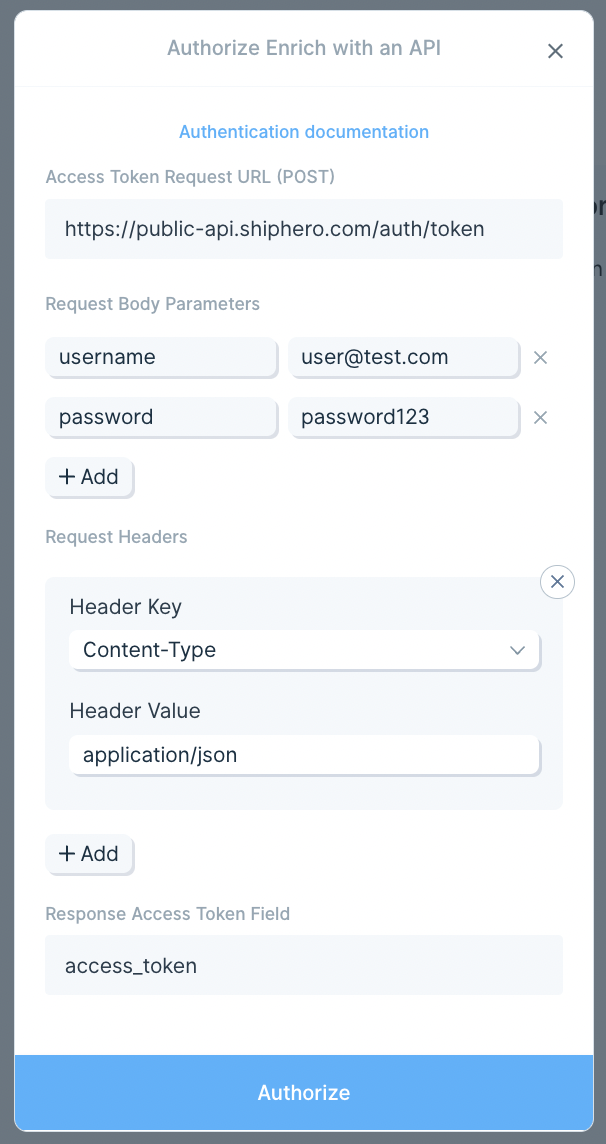

Expiring Access Token

Some APIs will require users to generate access tokens that have short expirations. Generally, any token that expires in less than 1 day is considered to be "short-lived" and may be using this type of authentication. This type of authentication in Parabola serves a grouping of related authentication styles that generally follow the same pattern.

One very specific type of authentication that is served by this option in Parabola is called OAuth2.0 Client Credentials. This differs from our standard OAuth2.0 support, which is built specifically for OAuth2.0 Authorization Code. Both Client Credentials and Authorization Code are part of the OAuth2.0 spec, but represent different Grant Types.

Authenticating with the Expiring Access Token option is more complex than options like Bearer Token, but less complex than OAuth2.0. For more details on how to use this option, read our guide Using Expiring Access Tokens in Parabola.

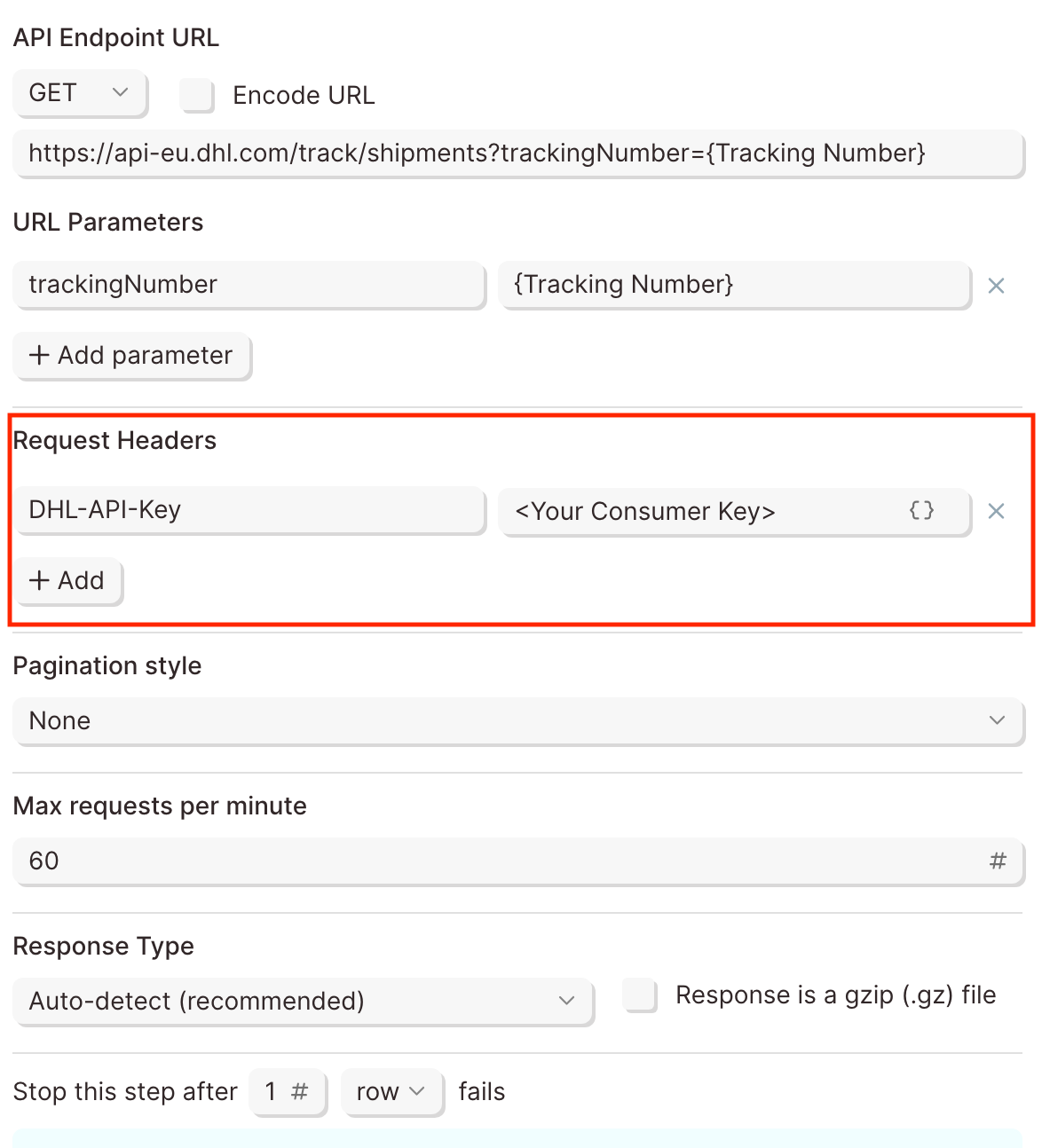

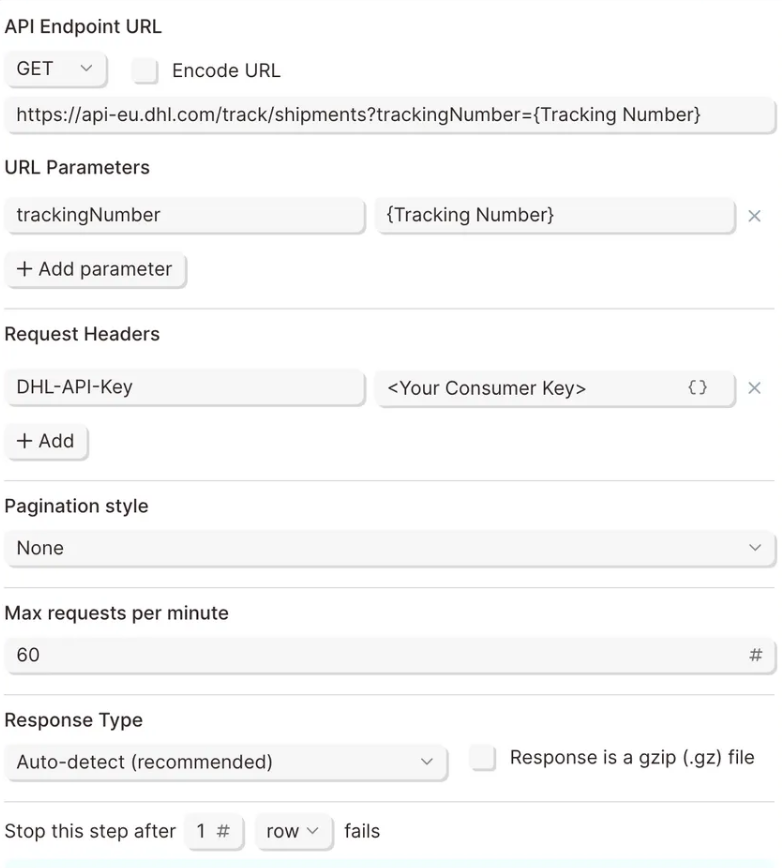

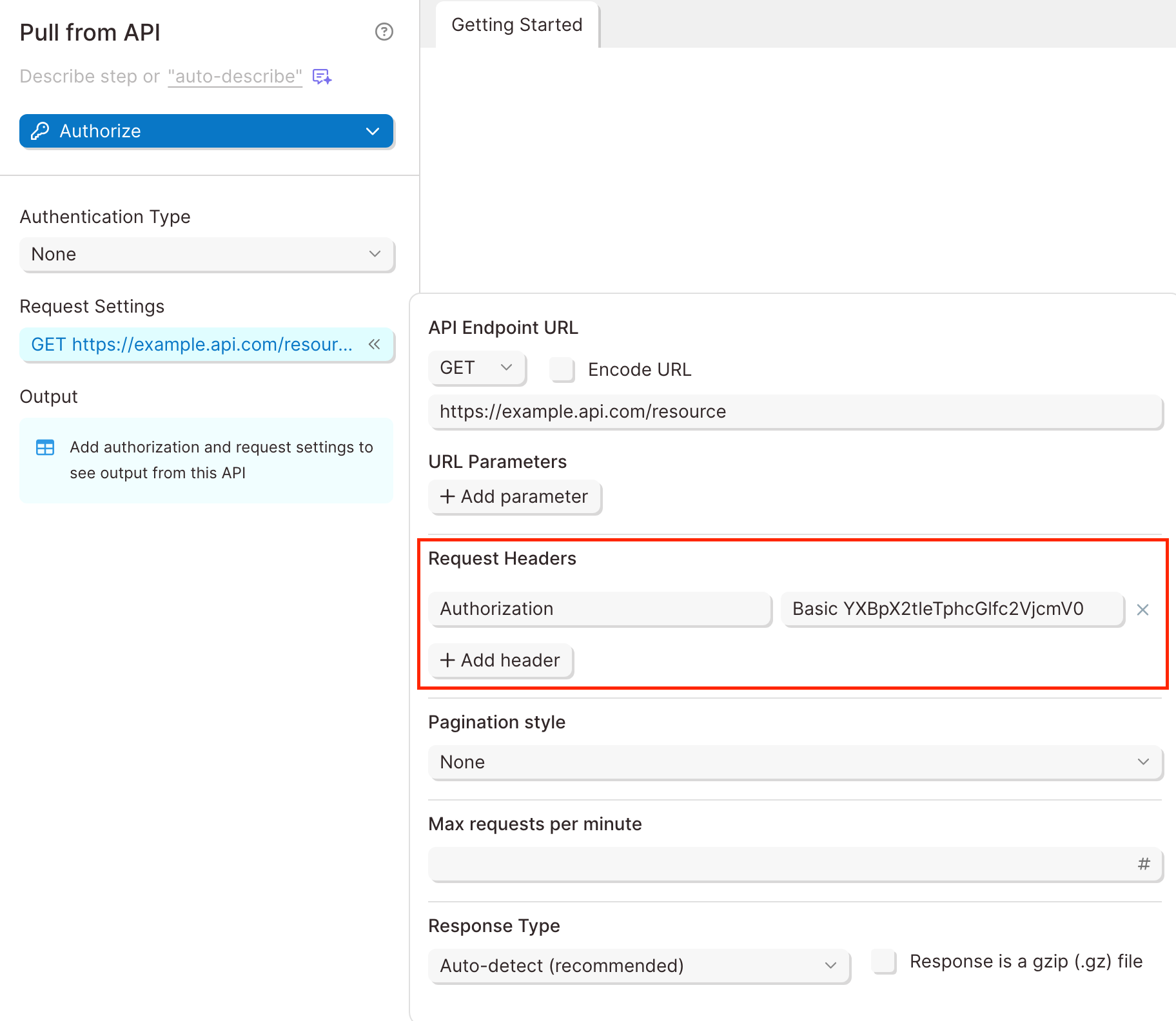

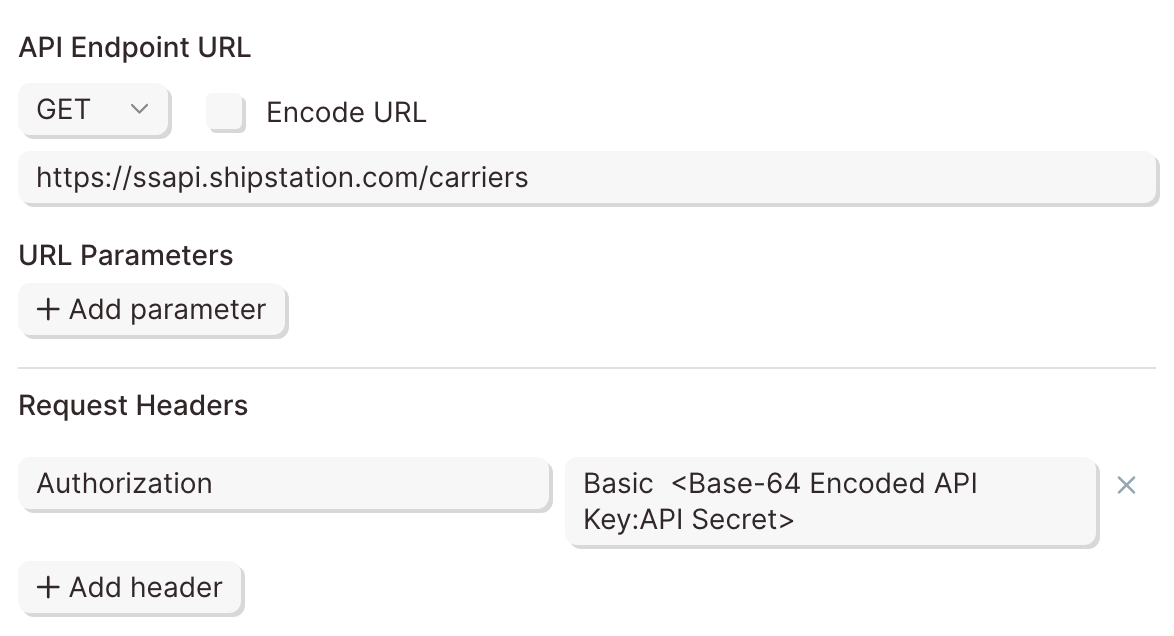

Request Headers

A header is a piece of additional information to be sent with the request to an API. If an API requires additional headers, it is commonly noted in their documentation as -H.

Remember the authentication methods above? Some APIs list the authentication type to be sent as a header. Since Parabola has specific fields for authentication, those headers can typically be ignored.

Taking a look at Webflow's API, they show two headers are required.

The first -H header is linked to a key called Authorization. Parabola takes care of that. It does not need to be added as a header. The second -H header is linked to a key called accept-version. The value of the header is 1.0.0. This likely indicates which version of Webflow's API will be used.

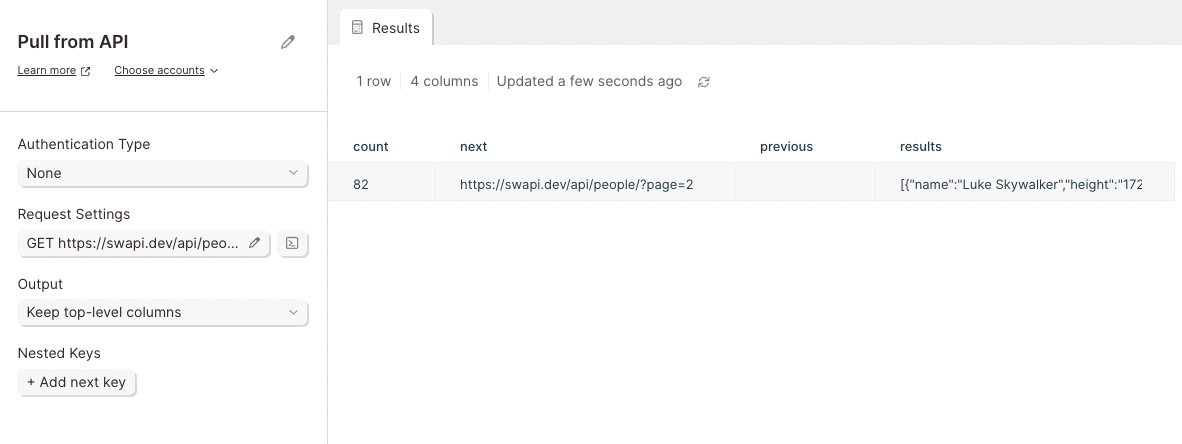

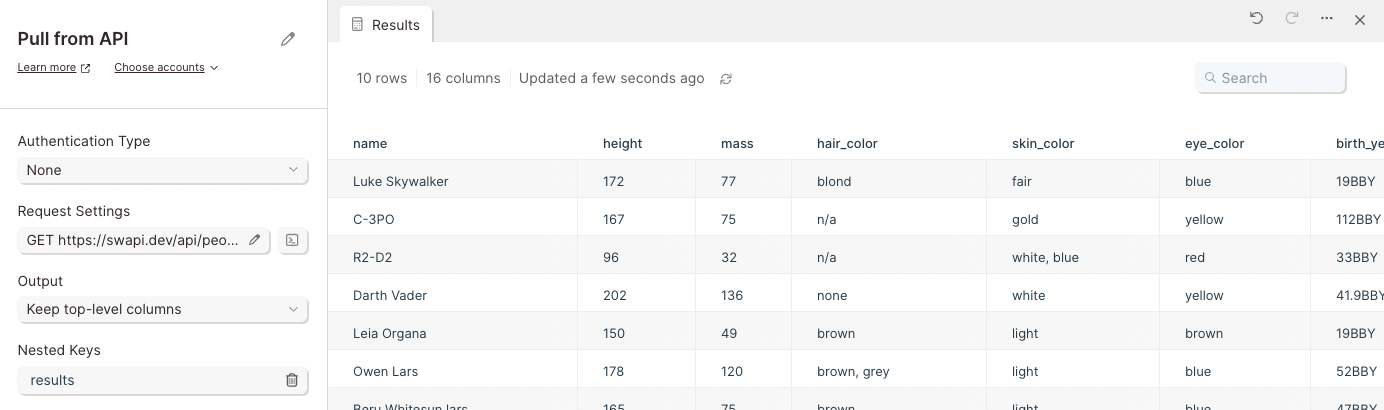

Response JSON

APIs typically to structure data as a nested objects. This means data can exist inside data. To extract that data into separate columns and rows, use the Output section to select a top-level column.

For example, a character can exist as a data object. Inside the result object, additional data is included such as their name, date of birth, and location.

This API shows a data column linked result. To expand all of the data in the results object into neatly displayed columns, select results as the top-level column in the Output section.

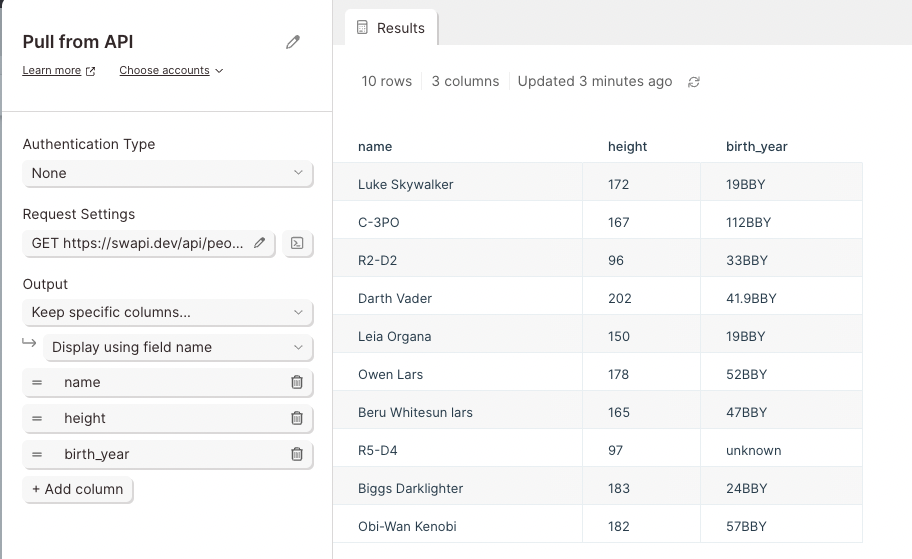

If you only want to expand some of the columns, choose to keep specific columns and select the columns that you want to expand from the dropdown list.

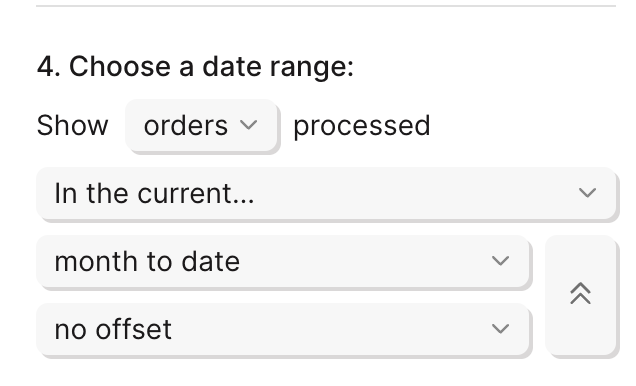

Pagination

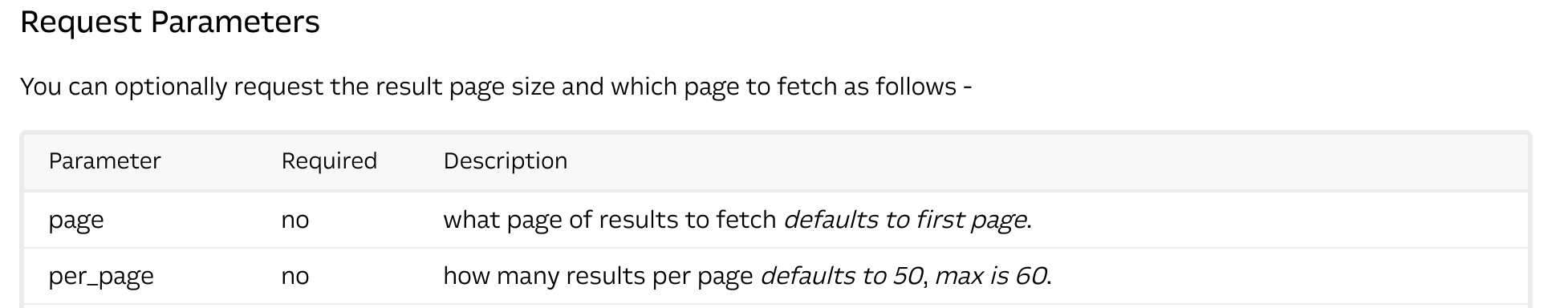

APIs return data in pages. This might not be noticeable for small requests, but larger request will not show all results. APIs return 1 page of results. To view the other pages, pagination settings must configured

Each API has different Pagination settings which can be searched in their documentation. The three main types of pagination are Page, Offset and Limit, and Cursor based pagination.

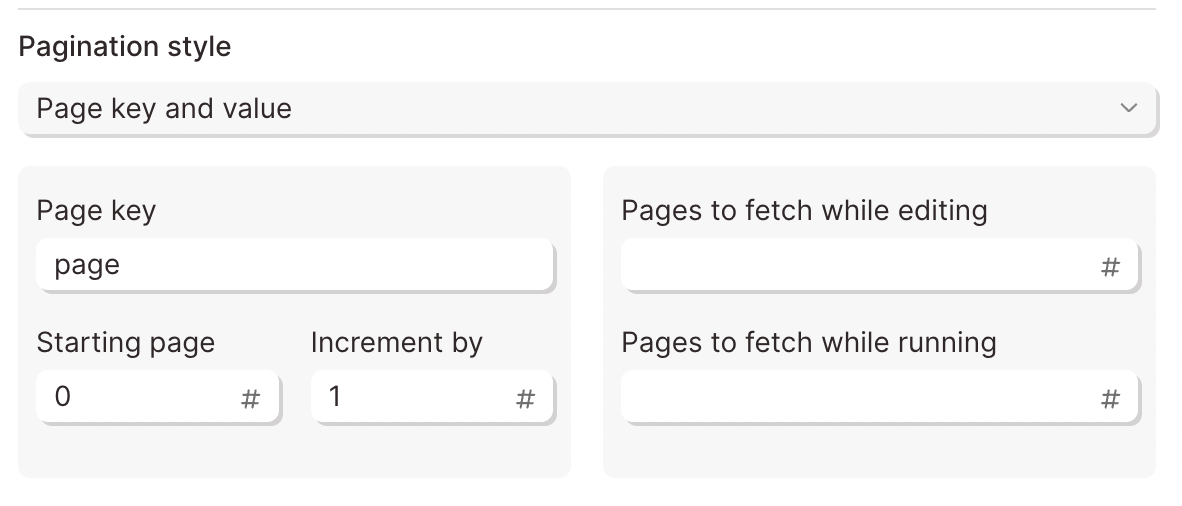

Page Based Pagination

APIs that use Page based pagination make it easy to request more pages. Documentation will refer to a specific parameter key for each request to return additional pages.

Intercom uses this style of pagination. Notice they reference the specific parameter key of page:

Parabola refers to this parameter as the Pagination Key. To request additional pages from Intercom's API, set the Pagination Key to page.

The Starting page is the first page to be requested. Most often, that value will be set to 0. For most pagination settings, 0 is the first page. The Increment by value is the number of pages to advance to. A value of 1 will fetch the next page. A value of 10 will fetch every tenth page.

Offset and Limit Based Pagination

APIs that use Offset and Limit based pagination require each request to limit the amount of items per page. Once that limit is reached, an offset is used to cycle through those pages.

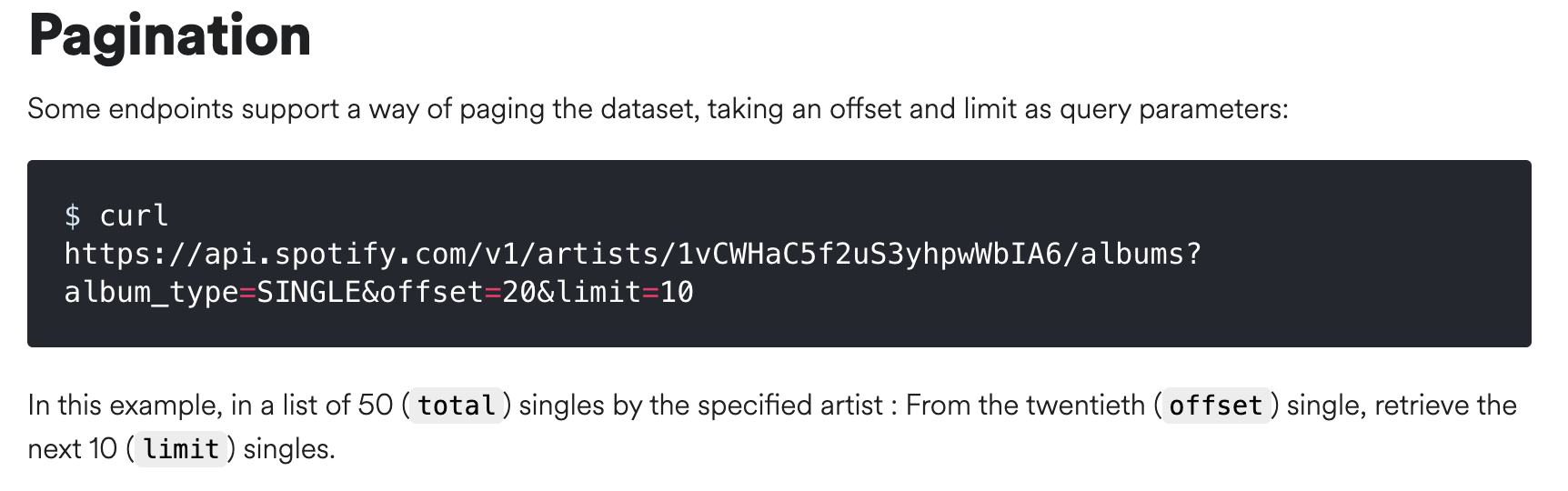

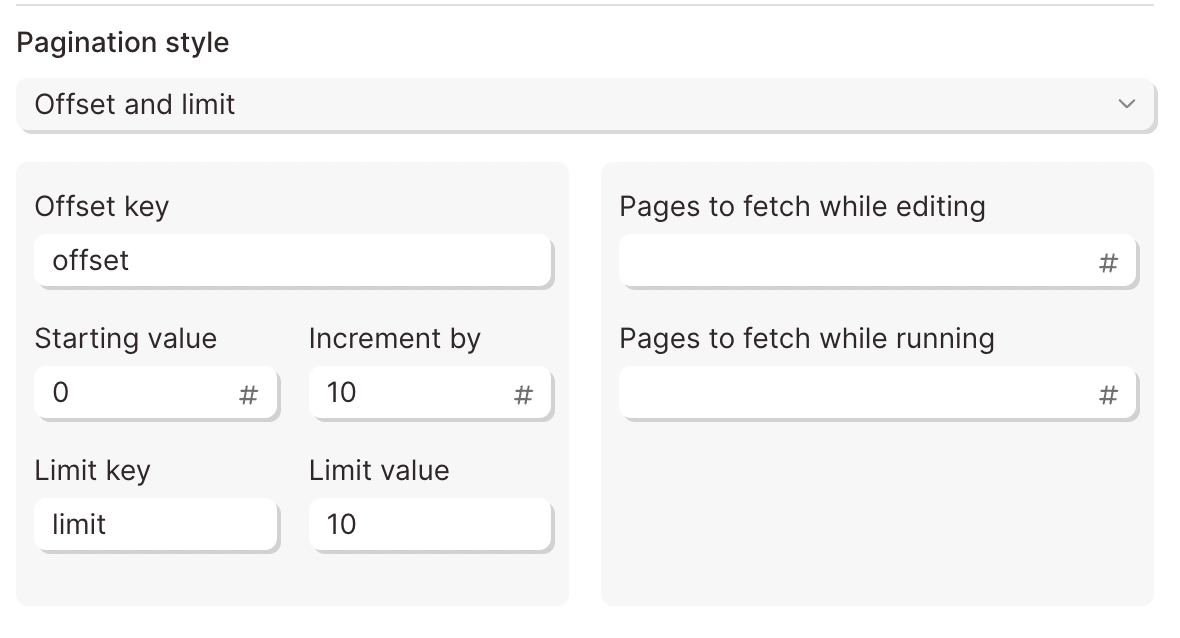

Spotify refers to this type of pagination in their documentation:

To configure these pagination settings in Parabola, set the Pagination style to offset and limit.

The Starting Value is set to 0 to request the first page. The Increment by value is set to 10. The request will first return page 0 and skip to page 10 .

The Limit Key is set to limit to tell the API to limit the amount of items. The Limit Value is set to 10 to define the number of items to return.

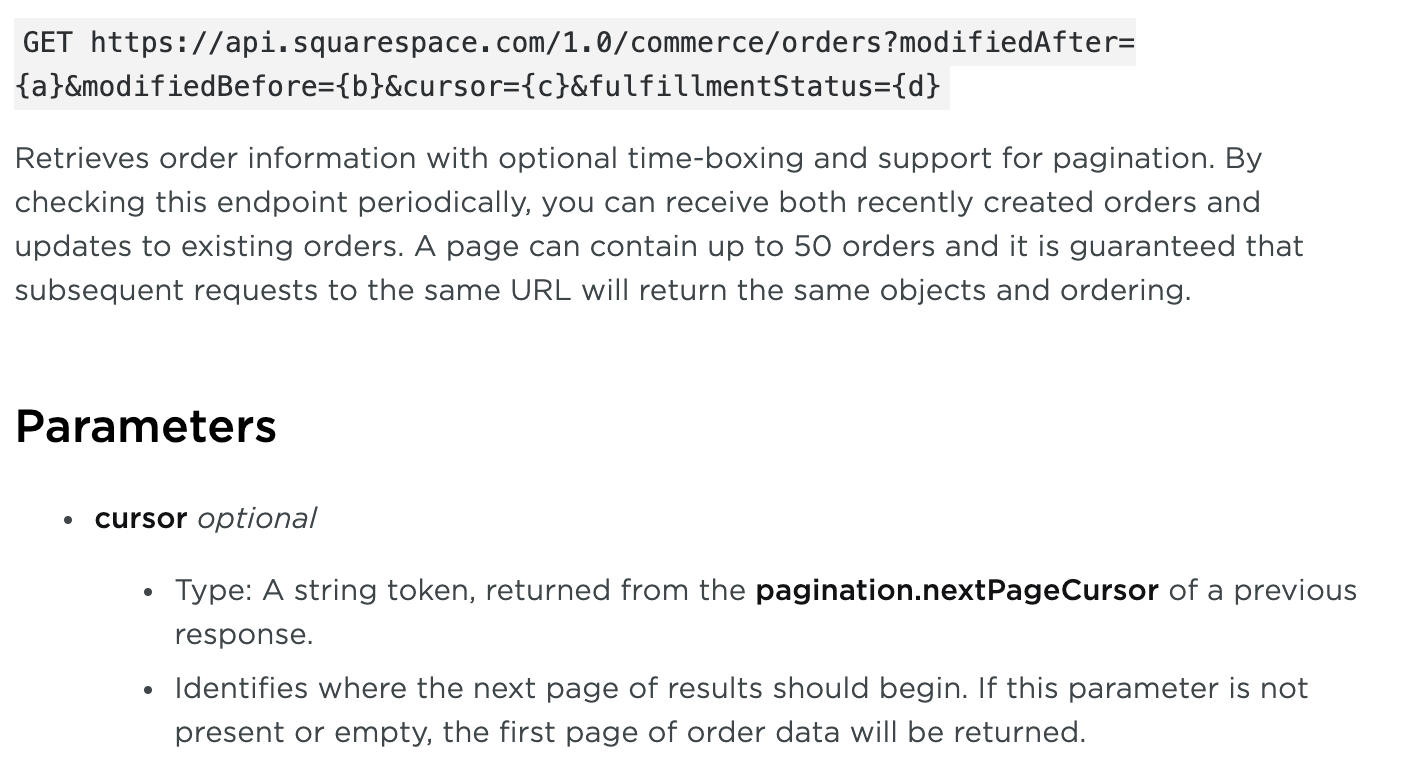

Cursor Based Pagination

Otherwise known as the bookmark of APIs, Cursor based pagination will mark a specific item with a cursor. To return additional pages, the API looks for a specific Cursor Key linked to a unique value or URL.

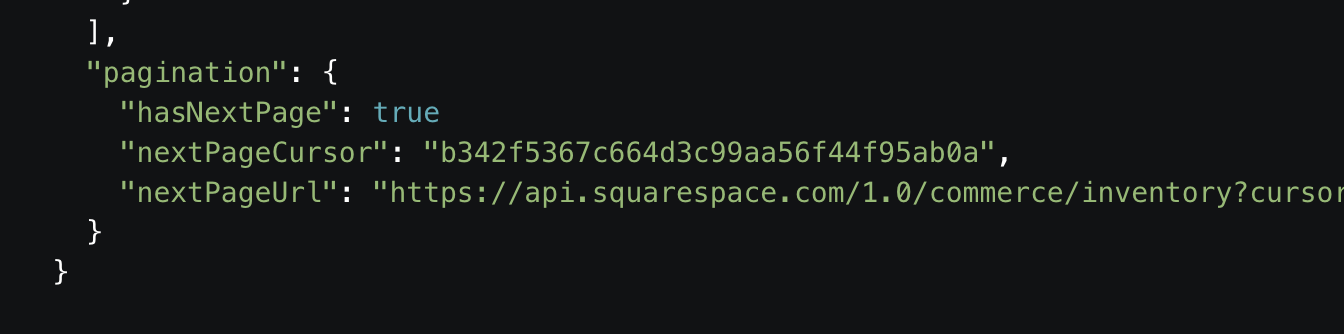

Squarespace uses cursor based pagination. Their documentation states that two Cursor Keys can be used. The first one is called nextPageCursor and has a unique value:

"nextPageCursor": "b342f5367c664d3c99aa56f44f95ab0a"

The second one is called nextPageUrl and has a URL value:

"nextPageUrl": "<https://api.squarespace.com/1.0/commerce/inventory?cursor=b342f5367c664d3c99aa56f44f95ab0a>"

To configure cursor based pagination using Squarespace, use these values in Parabola:

Replace the Cursor path in response with pagination.nextPageURL to use the URL as a value. The API should return the same results.

Rate Limiting

Imagine someone asking thousands of questions all at once. Before the first question can be answered thousands of new questions are coming in. That can become overwhelming.

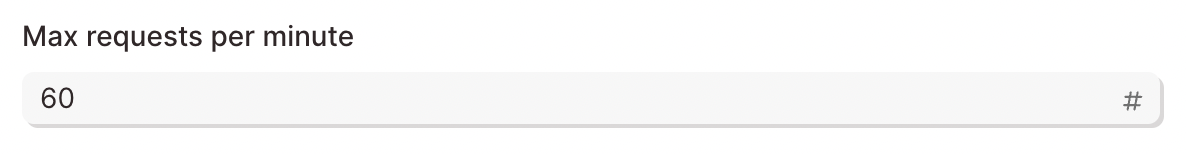

Servers are no different. Making paginated API calls requires a separate request for each page. To avoid this, APIs have rate limiting rules to protect their servers from being overwhelmed with requests. Parabola can adjust the Max Requests per Minute to avoid rate limiting.

By default, this value is set to 60 requests per minute. That's 1 request per second. The Max Requests per Minute does not set how many requests are made per minute. Instead, it ensures that Parabola will not ask too many questions.

Lowering the requests will avoid rate limiting but will calculate data much slower. Parabola will stop calculating a flow after 60 minutes.

Max Pages to Fetch

To limit the amount of pages to fetch use this field to set the value. Lower values will return data much faster. Higher values will take longer return data.

The default value in Parabola is 5 pages. Just note, this value needs be larger than the expected number of pages to be returned. This prevents any data from being omitted.

If you are pulling a large amount of data and want to limit how much is being pulled in while building, you can set the step to pull a lower number of pages while editing the Flow than while running the Flow.

Note, there is a 1000 page limit when building vs. running flows.

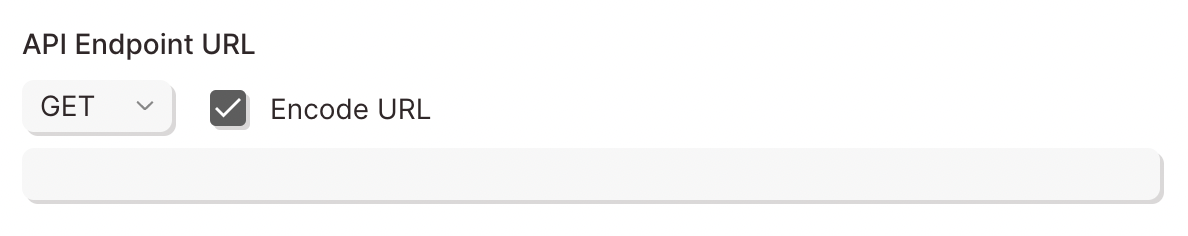

Encode URLs

URLs tend to break when there are special characters like spaces, accented characters, or even other URLs. Most often, this occurs when using {text merge} values to dynamically insert data into a URL.

Check the "Encode URLs" box to prevent the URL from breaking if special characters need to be passed.

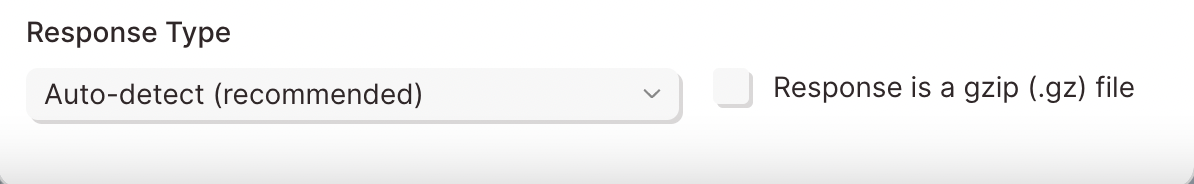

Response type

By default, this step will parse the data sent back to Parabola from the API in the format indicated by the content-type header received. Sometimes, APIs will send a content-type that Parabola does not know how to parse. In these cases, adjust this setting from auto-detect to a different setting, to force the step to parse the data in a specific way.

Use the gzip option when the data is returned in a gzip format, but can be unzipped into csv, xml, or JSON data. If you enable gzip parsing, you must also specify a response type option.

Tips and troubleshooting

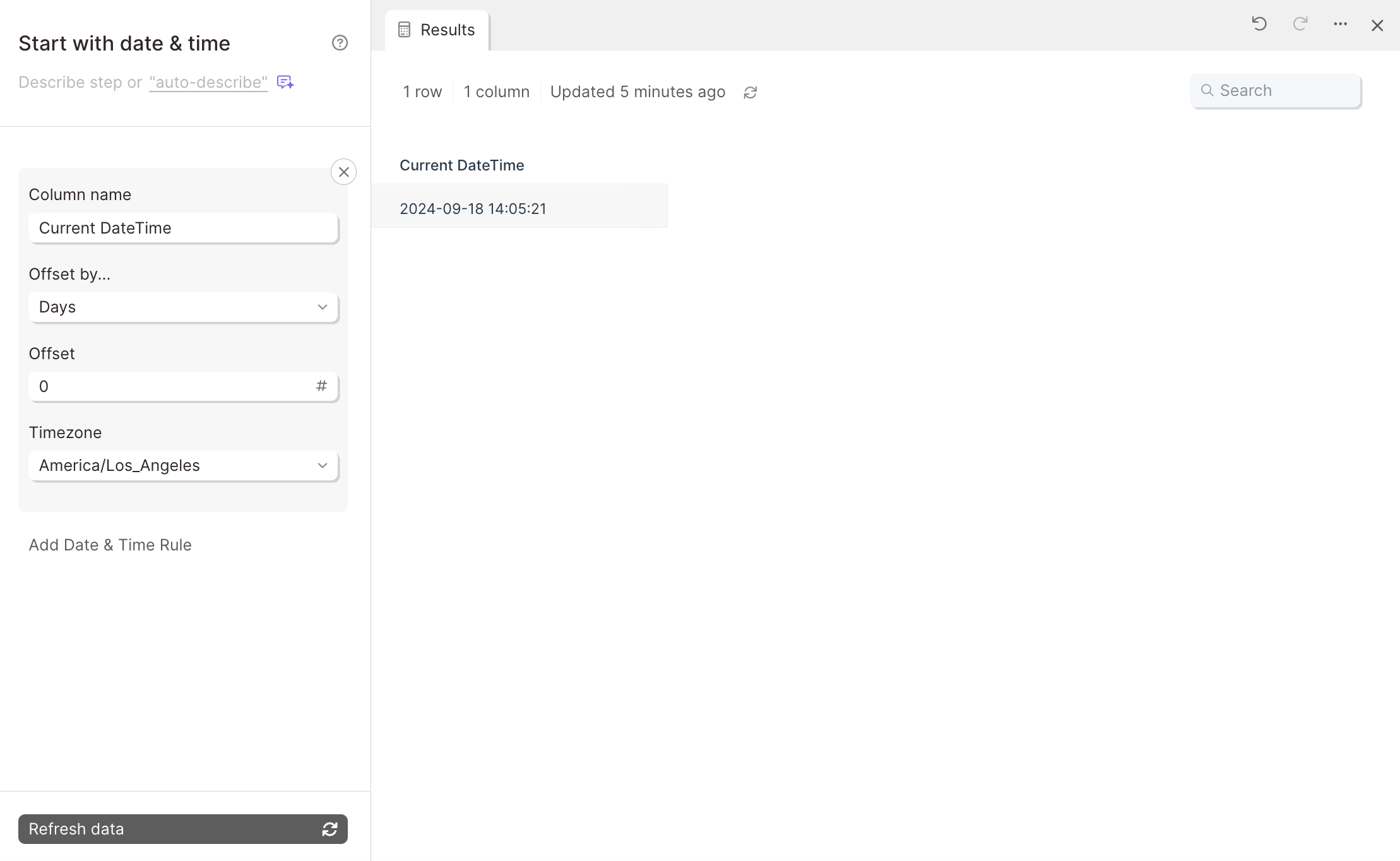

- Please note that the Pull from API step cannot extract dynamic ranges, such as date. We suggest taking existing data—even just a Start with date & time step—and using an Enrich with API step to create a Flow whose parameters update on each Flow run.

- Parabola will never limit API calls according to a user’s plan—rate limiting is at the discretion of the user, and may be restricted natively by the API.

- We recommend using an API key that is unique to Parabola. This is not strictly necessary, but it helps with troubleshooting and debugging!

Something not right? Check out this guide to learn more troubleshooting common API errors.

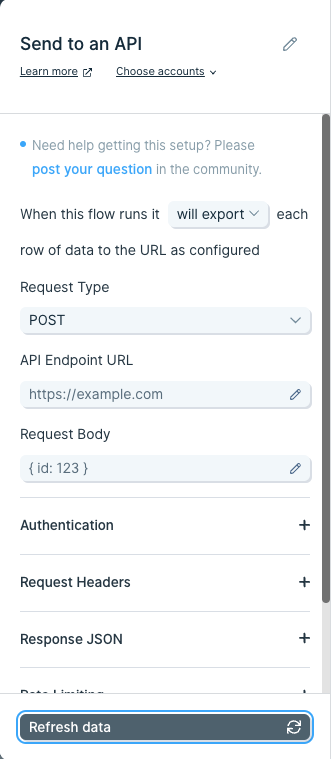

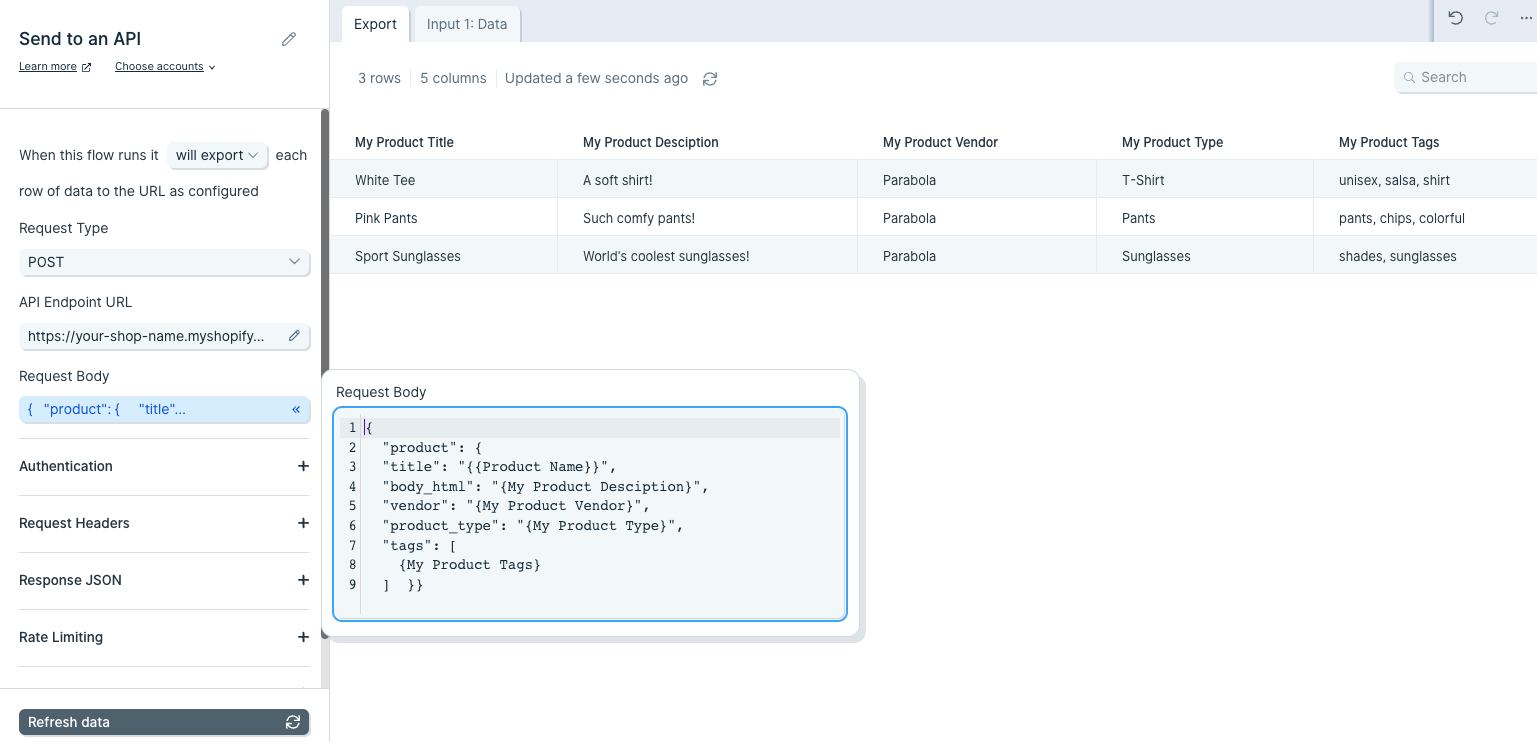

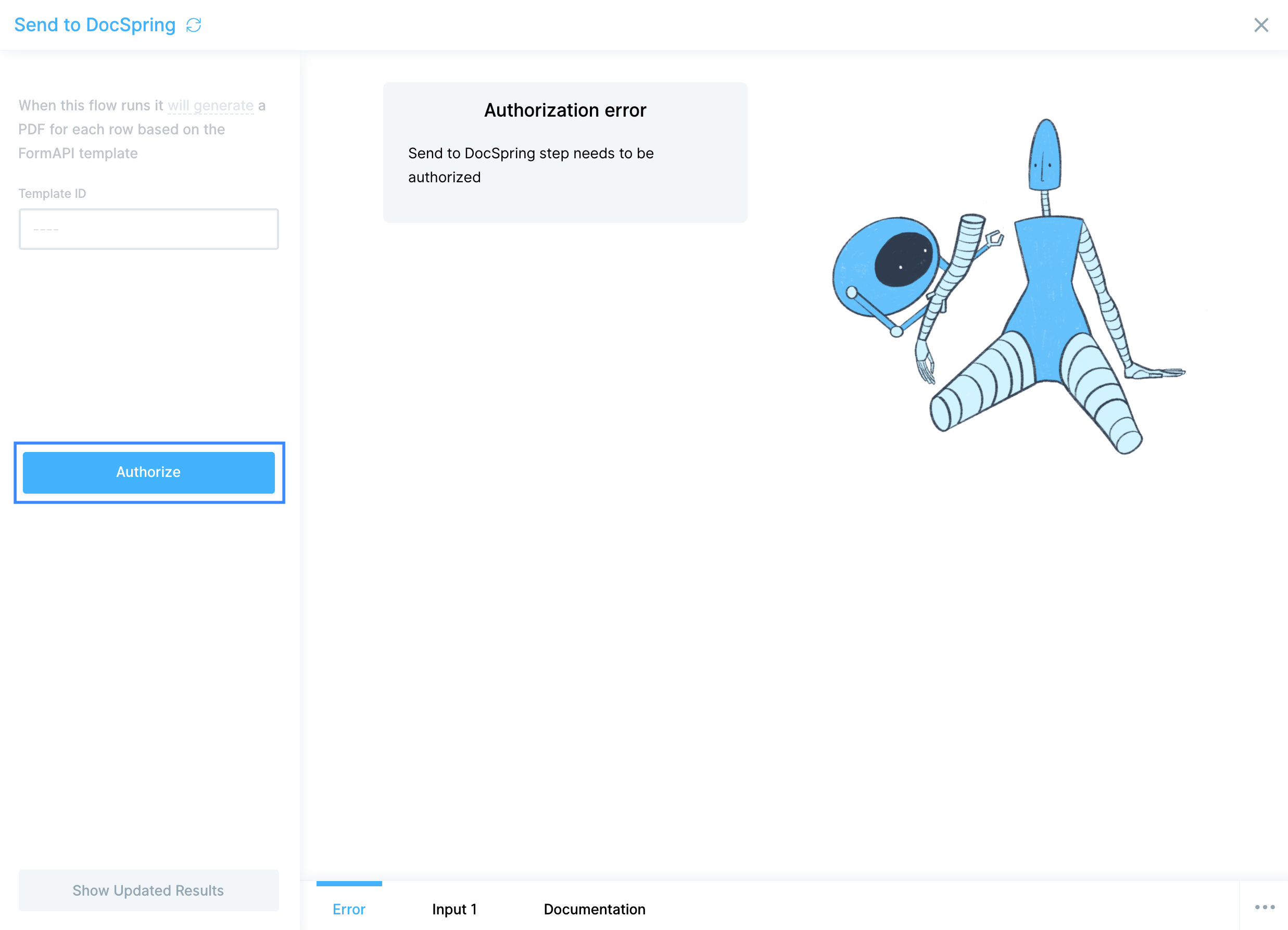

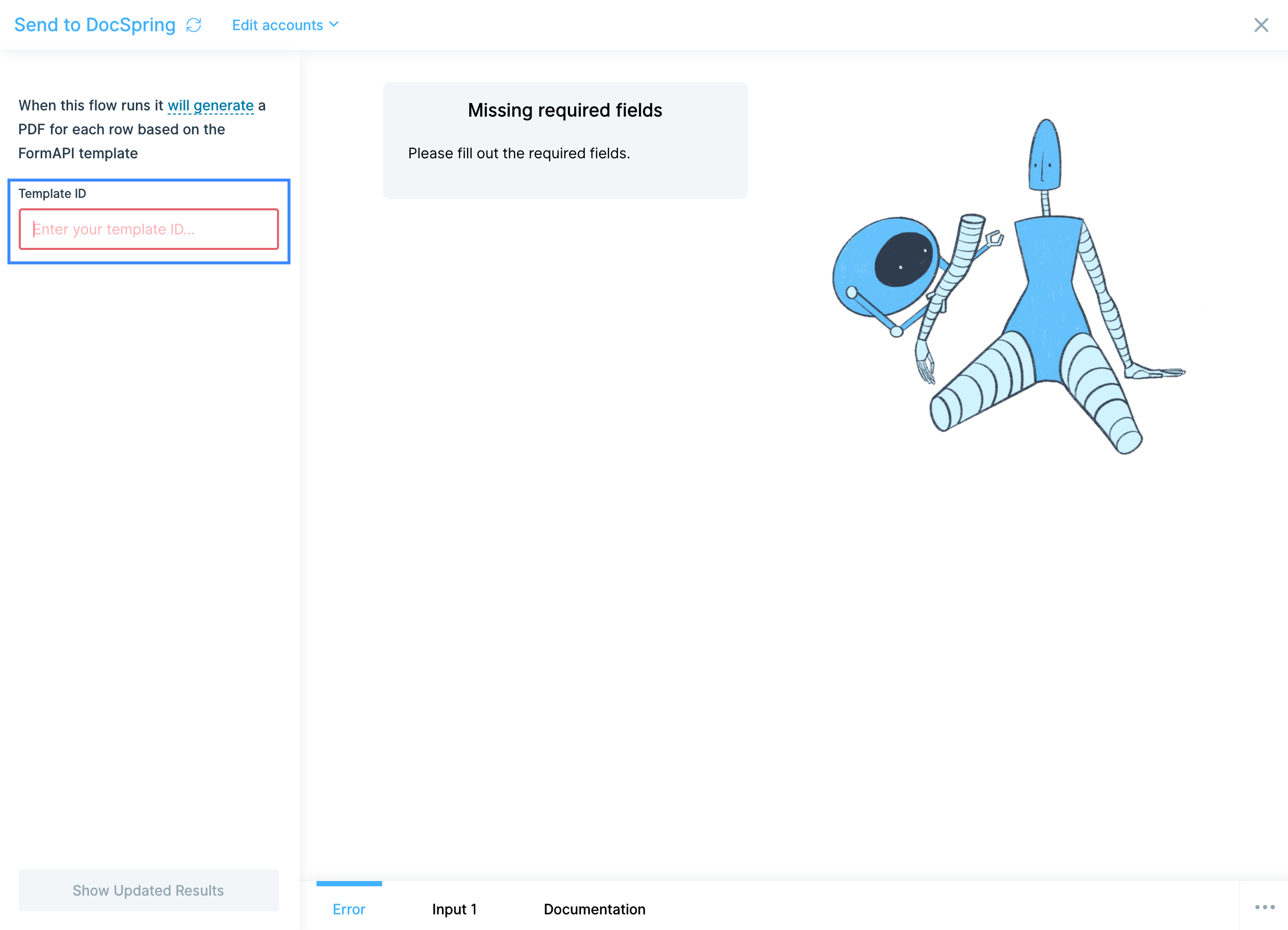

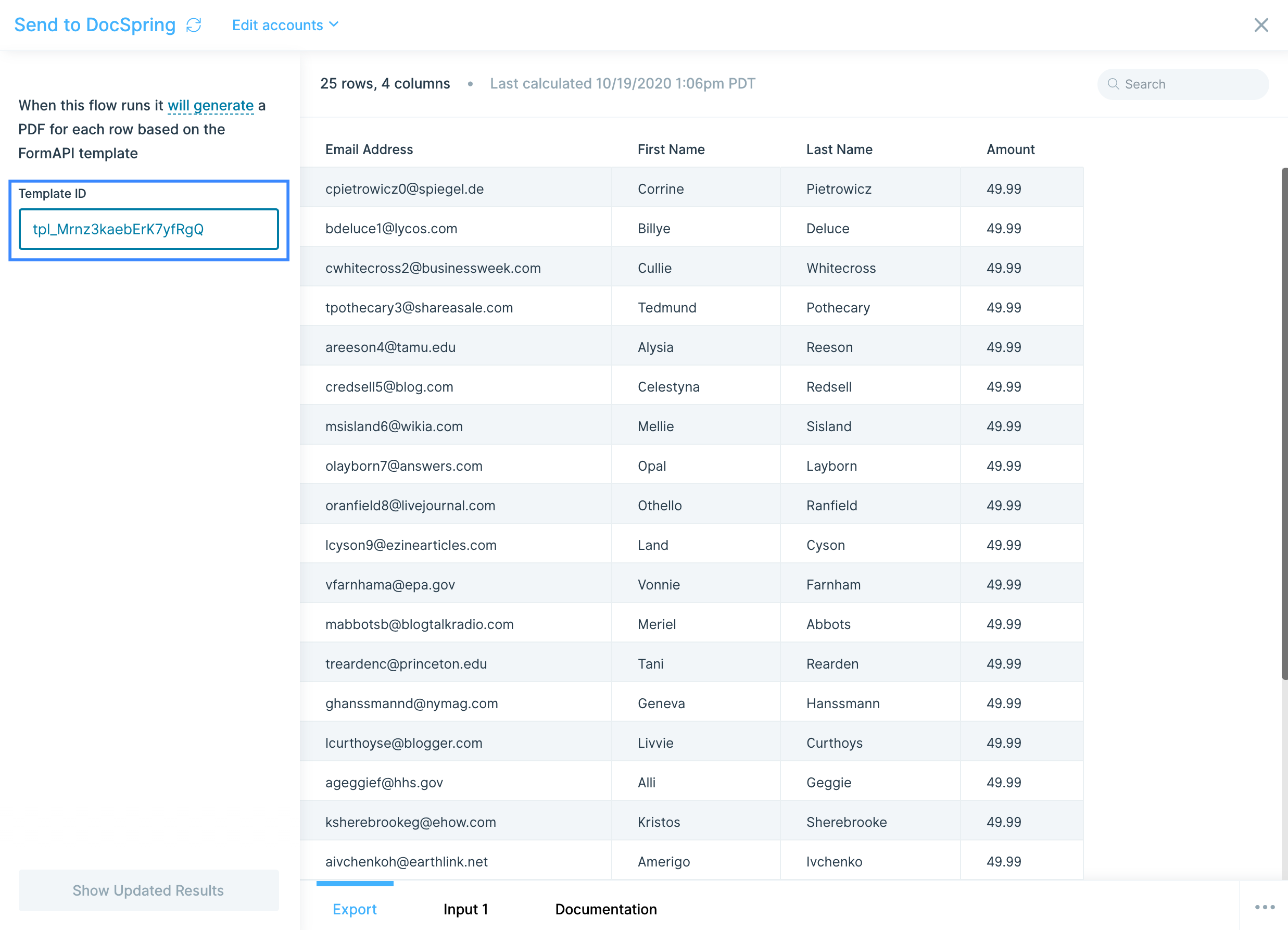

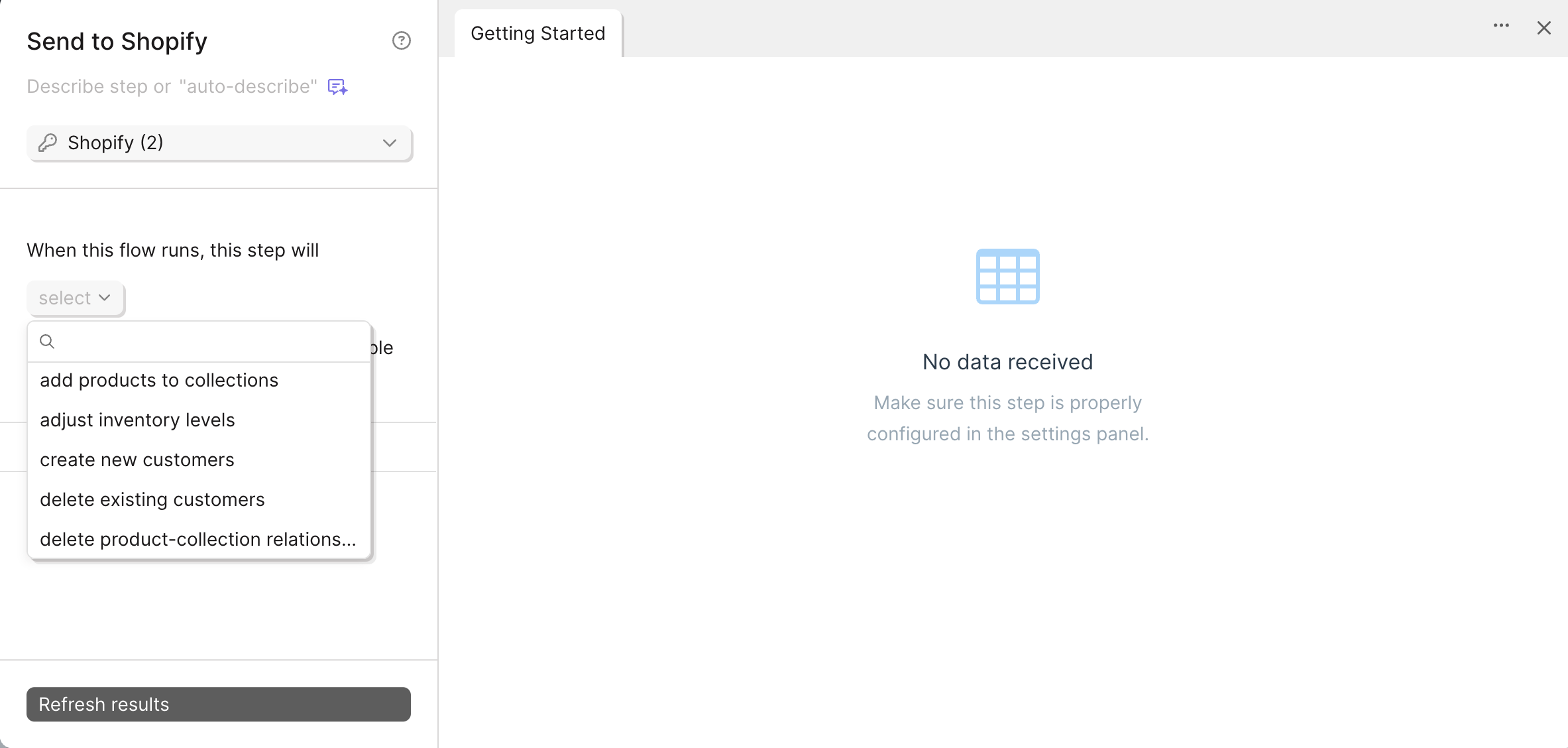

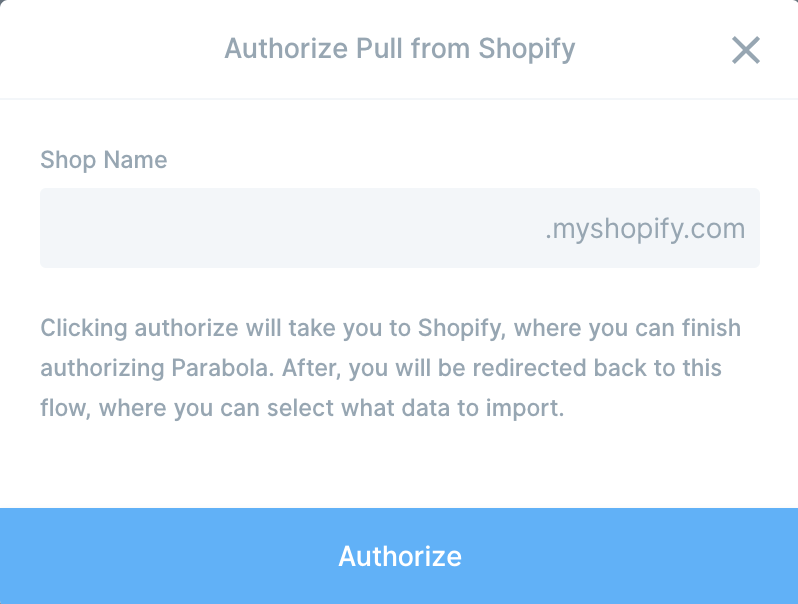

The Send to an API step sends a request to an API to export specific data. Data must be sent through the API using JSON formatted in the body of the request. This step can send data only when a flow is published.

Input

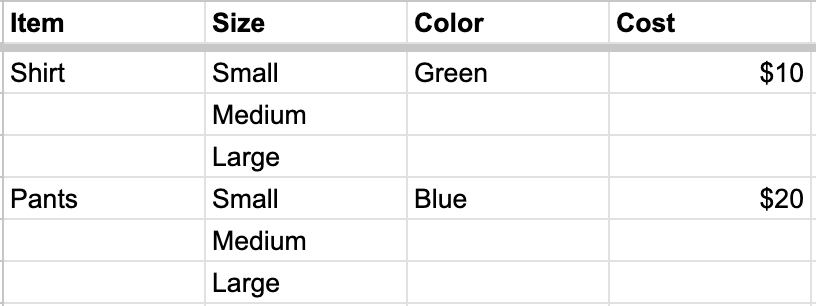

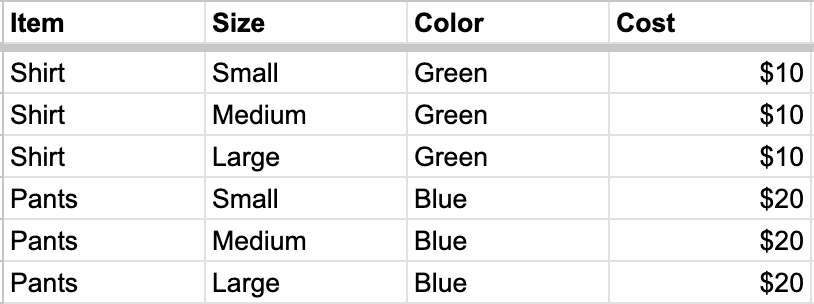

This table shows the product information for new products to be added to a store. It shows common columns like "My Product Title", "My Product Description", "My Product Vendor", "My Product Tags".

These values can be used to create products in bulk via the Send to an API step.

Basic Settings

To use the Send to an API step, a Request Type, API Endpoint URL, and Authentication are required. Some APIs require Custom Headers while other APIs nest their data into a single cell that requires a Top Level Key to format into rows and columns.

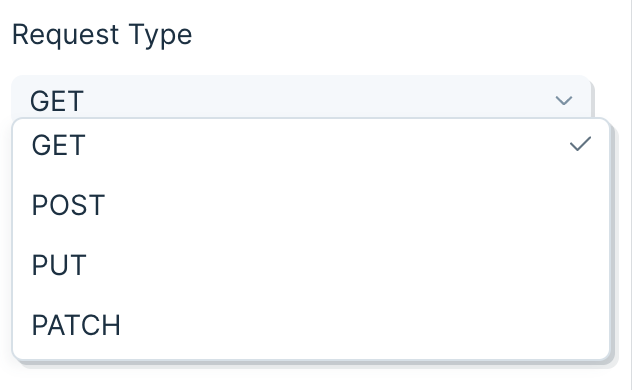

Request Type

There are four ways to send data with an API using POST, PUT, PATCH, and DELETE requests. These methods are also known as verbs.

The POST verb is used to create new data. The DELETE verb is used to delete data. The PUT verb is used to update exiting data, and the PATCH verb is used to modify a specific portion of the data.

Hey API, can you POST new data to the server? The new data is in the JSON body.

API Endpoint URL

The API Endpoint URL is the specific location where data will be sent. Each API Endpoint URL belongs to a specific resource. A resource is the broader category to be targeted when sending data.

To create a new product in Shopify, use their Products resource. Their documentation specifies making a POST request to that resource using this Endpoint URL:

Your Shopify store domain will need to prepended to each Endpoint URL:

https://your-shop-name.myshopify.com/admin/api/2020-10/products.json

The request information is sent to the API in the JSON body of the request. The JSON body is a block that outlines the data that will be added.

Body

The body of each request is where data that will be sent through the API is added. The body must be in raw JSON format using key:value pairs. The JSON below shows common attributes of a Shopify product.

{

"product": {

"title": "Baseball Hat",

"body_html": "<strong>Awesome hat!</strong>",

"vendor": "Parabola Cantina",

"product_type": "Hat",

"tags": [

"Unisex",

"Salsa",

"Hat"

]

}

}

Notice the title, body_html, vendor, product_type, and tags can be generated when sending this data to an API.

Since each product exists per row, {text merge} values can be used to dynamically pass the data in the JSON body.

This will create 3 products: White Tee, Pink Pants, and Sport Sunglasses with their respective product attributes.

Authentication

Most APIs require authentication to access their data. This is likely the first part of their documentation. Try searching for the word Authentication in their documentation. Below are the authentication types supported on Parabola:

The most common types of authentication are Bearer Tokens, Username/Password (also referred to as Basic), and OAuth 2.0. Parabola has integrated these authentication types directly into this step.

Bearer Token

This method requires you to send your API Key or API Token as a bearer token. Take a look at this example below:

The part that indicates it is a bearer token is this:

-H "Authorization: Bearer sk_test_WiyegCaE6iGr8eSucOHitqFF"

To add this specific token in Parabola, select Bearer Token from the Authorization menu and add sk_test_WiyegCaE6iGr8eSucOHitqFF as the value.

Username/Password (Basic)

This method is also referred to as Basic Authorization or simply Basic. Most often, the username and password used to sign into the service can be entered here.

However, some APIs require an API key to be used as a username, password, or both. If that's the case, Insert the API key into the respective field noted in the documentation.

The example below demonstrates how to connect to Stripe's API using the Basic Authorization method.

The Endpoint URL shows a DELETE request being made to a resource called customers. The authorization type can be identified as Basic for two reasons:

- The -u indicates Basic Authorizationusername.

- Most APIs reference the username and password formatted as username:password. There is a colon : . This indicates that only a username is required for authentication.

To delete this customer using Parabola, fill in the fields below:

OAuth2.0

This method is an authorization protocol that allows users to sign into a platform using a third-party account. OAuth2.0 allows a user to selectively grant access for various applications they may want to use.

Authenticating via OAuth2.0 does require more time to configure. For more details on how to authorize using this method, read our guide Using OAuth2.0 in Parabola.

Expiring Access Token

Some APIs will require users to generate access tokens that have short expirations. Generally, any token that expires in less than 1 day is considered to be "short-lived" and may be using this type of authentication. This type of authentication in Parabola serves a grouping of related authentication styles that generally follow the same pattern.

One very specific type of authentication that is served by this option in Parabola is called OAuth2.0 Client Credentials. This differs from our standard OAuth2.0 support, which is built specifically for OAuth2.0 Authorization Code. Both Client Credentials and Authorization Code are part of the OAuth2.0 spec, but represent different Grant Types.

Authenticating with the Expiring Access Token option is more complex than options like Bearer Token, but less complex than OAuth2.0. For more details on how to use this option, read our guide Using Expiring Access Tokens in Parabola.

Custom Headers

A header is a piece of additional information to be sent with the request to an API. If an API requires additional headers, it is commonly noted in their documentation as -H.

Remember the authentication methods above? Some APIs list the authentication type to be sent as a header. Since Parabola has specific fields for authentication, those headers can typically be ignored.

Taking a look at Webflow's API, they show two headers are required.

The first -H header is linked to a key called Authorization. Parabola takes care of that. It does not need to be added as a header. The second -H header is linked to a key called accept-version. The value of the header is 1.0.0. This likely indicates which version of Webflow's API will be used.

Advanced Settings

Encode URLs

URLs tend to break when there are special characters like spaces, accented characters, or even other URLs. Most often, this occurs when using {text merge} values to dynamically insert data into a URL.

Check the "Encode URLs" box to prevent the URL from breaking if special characters need to be passed.

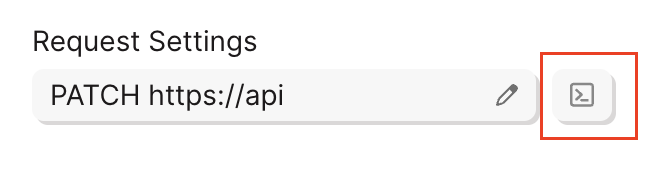

See sent request

If you woud like to see the request that was sent to the API during the Flow run, you can dothis from the API step. To do this, click the square button next to the Request Settings section in the step to see more detailed information.

Reading API Errors

Check out this guide to learn more troubleshooting common API errors.

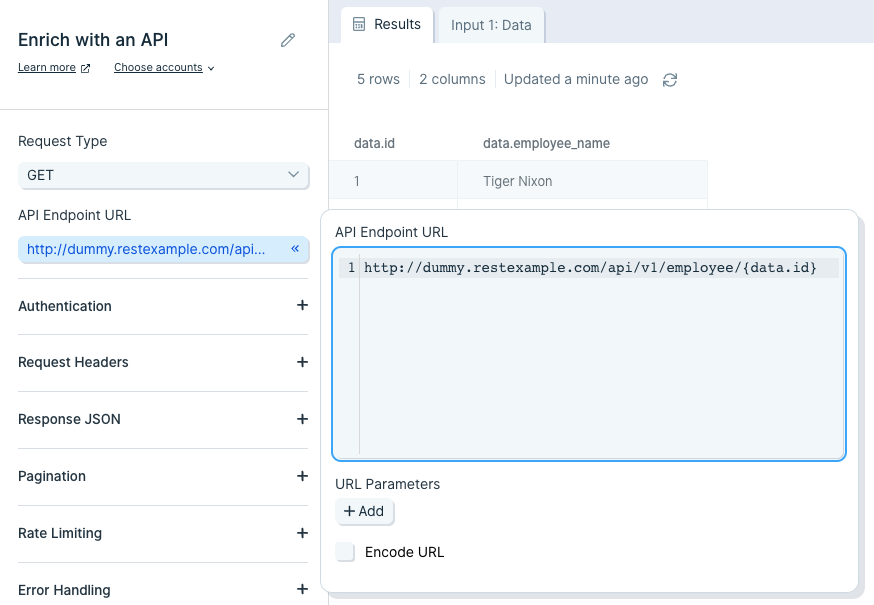

Use the Enrich with API step to make API requests using a list of data, enriching each row with data from an external API endpoint.

Input/output

Our input data has two columns: "data.id" and "data.employee_name".

Our output data, after using this step, has three new columns appended to it: "api.status", "api.data.id", and "api.data.employee_name". This data was appended to each row that made the call to the API.

Custom settings

First, decide if your data needs a GET or POST operation, or the less common PUT or PATCH, and select it in the Type dropdown. A GET operation is the most common way to request data from an API. A POST is another way to request data, though it is more commonly used to make changes, like adding a new user to a table. PUT and PATCH make updates to data, and sometimes return a new value that can be useful.

Insert your API endpoint URL in the text field.

Sending a body in your API request

- A GET cannot send a body in its request. A POST can send a Body in its request. In Parabola, the Body of the request will always be sent in JSON.

- Simple JSON looks like this:

{ "key1":"value1", "key2":"value2", "key3":"value3" }

Using merge tags

- Merge tags can be added to the API Endpoint URL or the Body of a request. For example, if you have a column named "data.id", you could use it in the API Endpoint URL by including {data.id} in it. Your URL would look like this:

http://third-party-api-goes-here.com/users/{data.id}

- Similarly, you can add merge tags to the body.

{

"key1": "{data.id}",

"key2": "{data.employee_name}",

"key3": "{Type}"

}

- For this GET example, your API endpoint URL will require an ID or some sort of unique identifier required by the API to match your data request with the data available. Append that ID column to your API endpoint URL. In this case, we use {data.id}.

- Important Note: If the column referenced in the API endpoint URL is named "api", the enrichment step will remove the column after the calculation. Use the Edit Columns step to change the column name to anything besides "api", such as "api.id".

Authentication

Most APIs require authentication to access their data. This is likely the first part of their documentation. Try searching for the word "authentication" in their documentation.

Here are the authentication types available in Parabola:

The most common types of authentication are 'Bearer Token', 'Username/Password' (also referred to as Basic), and 'OAuth2.0'. Parabola has integrated these authentication types directly into this step.

Bearer Token

This method requires you to send your API key or API token as a bearer token. Take a look at this example below:

The part that indicates it is a bearer token is this:

-H "Authorization: Bearer sk_test_WiyegCaE6iGr8eSucOHitqFF"

To add this specific token in Parabola, select 'Bearer Token' from the 'Authorization' menu and add "sk_test_WiyegCaE6iGr8eSucOHitqFF" as the value.

Username and Password (Basic)

This method is also referred to as "basic authorization" or simply "basic". Most often, the username and password used to sign into the service can be entered here.

However, some APIs require an API key to be used as a username, password, or both. If that's the case, insert the API key into the respective field noted in the documentation.

The example below demonstrates how to connect to Stripe's API using the basic authorization method.

The endpoint URL shows a request being made to a resource called customers. The authorization type can be identified as basic for two reasons:

- The -u indicates a username.

- Most APIs reference the username and password formatted as username:password. Here, there is a colon with no string following, indicating that only a username is required for authentication.

To authorize this API in Parabola, fill in the fields below:

OAuth2.0

This method is an authorization protocol that allows users to sign into a platform using a third-party account. OAuth2.0 allows a user to selectively grant access for various applications they may want to use.

Authenticating via OAuth2.0 does require more time to configure. For more details on how to authorize using this method, read our guide Using OAuth2.0 in Parabola.

Expiring Access Token

Some APIs will require users to generate access tokens that have short expirations. Generally, any token that expires in less than 1 day is considered to be "short-lived" and may be using this type of authentication. This type of authentication in Parabola serves a grouping of related authentication styles that generally follow the same pattern.

One very specific type of authentication that is served by this option in Parabola is called "OAuth2.0 Client Credentials". This differs from our standard OAuth2.0 support, which is built specifically for "OAuth2.0 Authorization Code". Both methods are part of the OAuth2.0 spec, but represent different grant types.

Authenticating with an expiring access token is more complex than using a bearer token, but less complex than OAuth2.0. For more details on how to use this option, read our guide Using Expiring Access Tokens in Parabola.

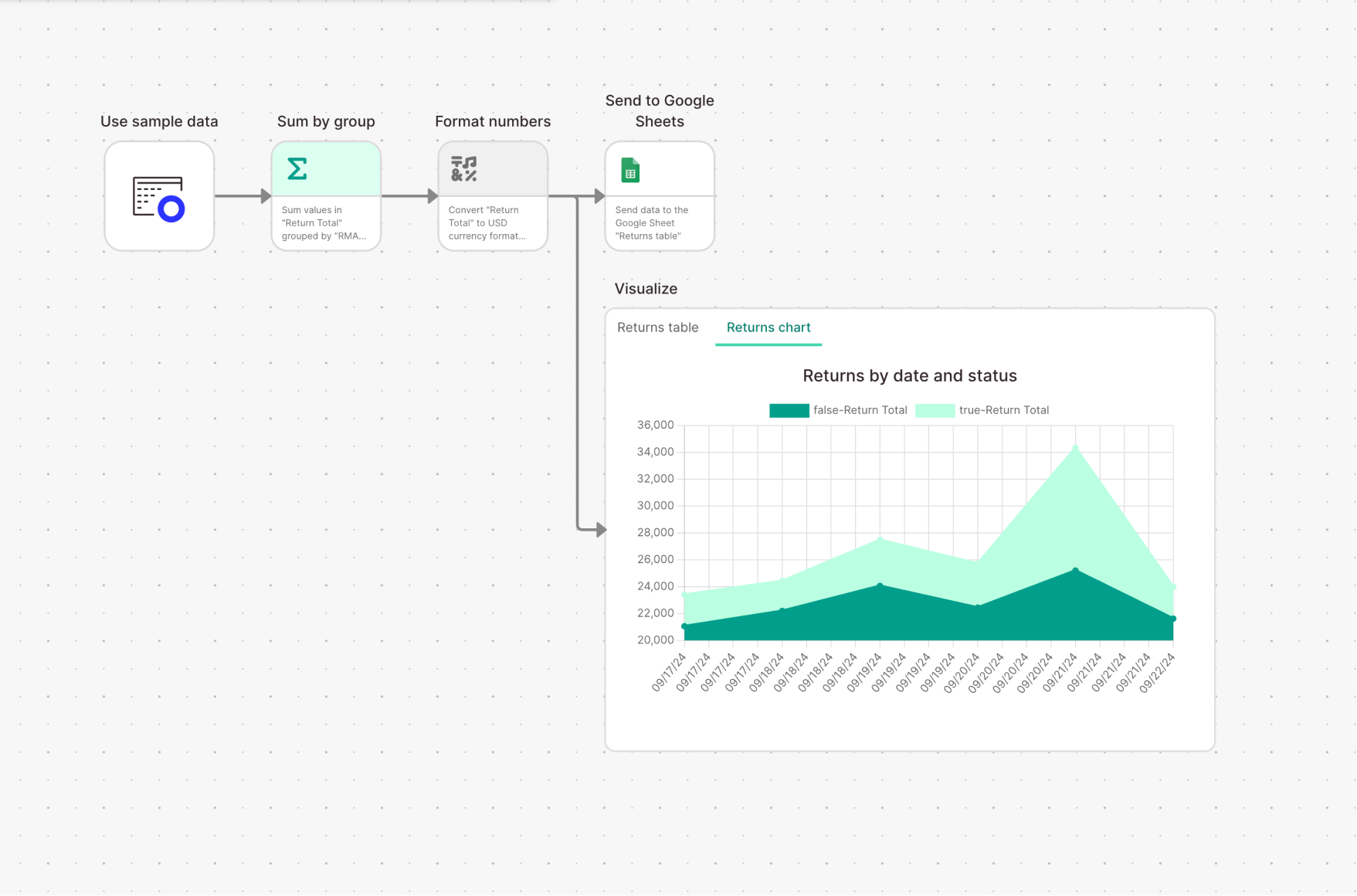

How to work with errors when you expect them in your API calls

Enabling Error Handling

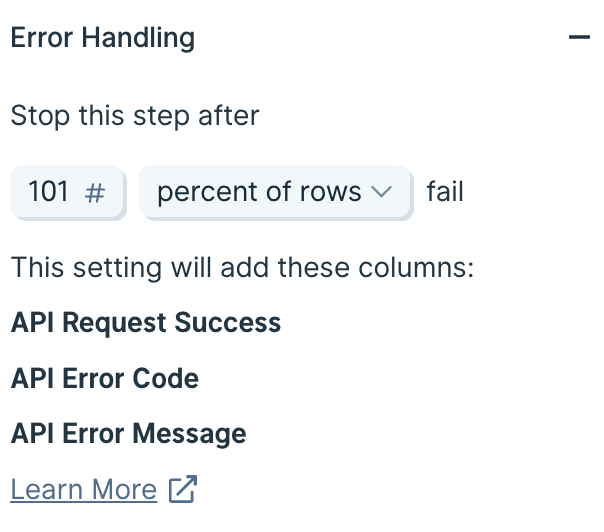

In the Enrich with an API step and the Send to an API step, enable Error Handling to allow your API steps to pass through data even if one or more API requests fail. Modifying this setting will add new error handling columns to your dataset reporting on the status of those API calls

By default, this section will show that the step will stop running when 1 row fails. This has always been the standard behavior of our API steps. Remember, each row of data is a separate API call. With this default setting enabled, you will never see any error handling columns.

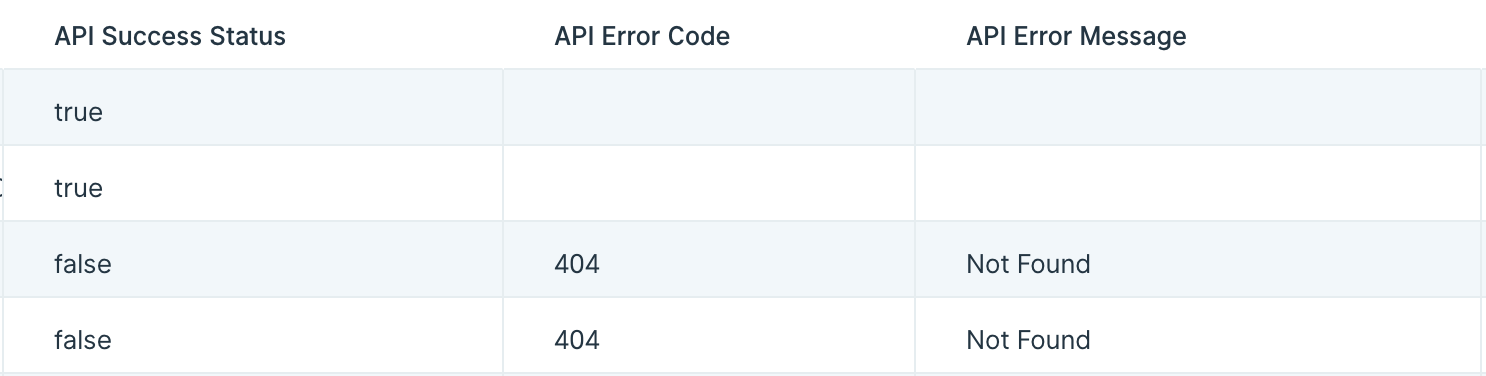

Update that setting, and you will see that new columns are set to be added to your data. These new columns are:

- API Success Status

- API Error Code

- API Error Message

API Success Status will print out a true or false value to show if that row's API call succeeded or failed.

API Error Code will have an error code for that row if the API call failed, and will be blank if the API call succeeded.

API Error Message will display the error message associated with any API call that failed, if the API did in fact send us back a message.

With the exception of the default settings, these columns will still be included even if every row succeeded. In that case, you will see the API Success Status column with all true values, and the other two columns as all blank values.

Using the error handling settings

It is smart to set a threshold where the step will still fail if enough rows have failed. Usually, if enough rows fail to make successful API calls, there may be a problem with your step settings, the data you are merging into those calls, or the API itself. In these cases, it is a good idea to ensure that the step can fully stop without needing to run through every row.

Choose to stop running this step if either a static number of rows fail, or if a percentage of rows fail.

You must choose a number greater than 0.

When using a percentage, Parabola will always round up to the next row if the percentage of the current set of rows results in a partial row.

Prevent the step from ever stopping

In rare cases, you may want to ensure that your step never stops running, even if every row results in a failed API call. In that case, set your error handling threshold to any number greater than 100%, such as 101% or 200%.

What to do with these new error handling columns

Once you have enabled this setting, use these new columns to create a branch to deal with errors. The most common use case will be to use a Filter Rows step to filter down to just the rows that have failed, and then send those to a Google Sheet for someone to check on and make adjustments accordingly.

Error handling in the Live flow Run logs

If you have a flow that is utilizing these error handling columns, the run logs on the live view of the flow will not indicate if any rows were recorded as failed. The run logs will only show a failure if the step was forced to stop by exceeding the threshold of acceptable errors. It is highly advisable that you set up your flow to create a CSV or a Google Sheet of these errors so that you have a record of them from each run.

Integration:

AfterShip

How to connect your AfterShip account

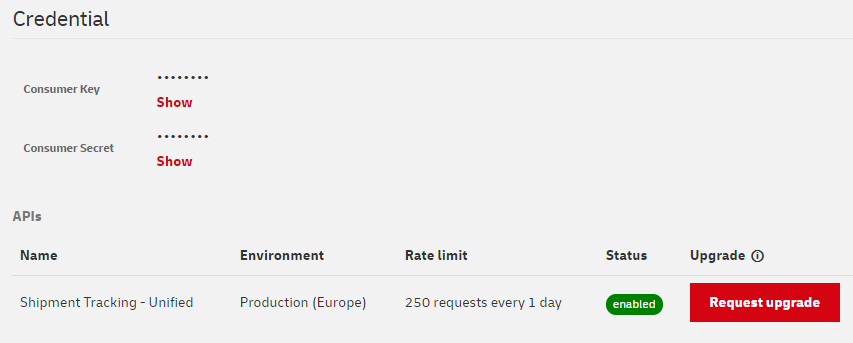

AfterShip uses API Key authentication for secure access.

- Log in to your AfterShip account

- Navigate to Settings (located in the bottom-left corner of the dashboard)

- Click on API Keys in the settings menu

- Click Create API key and follow the instructions:

- Enter a descriptive name for your API key

- Select the appropriate API permissions for your use case (aftership:tracking:read for tracking)

- Click Save to generate your key

- Copy the API key immediately (it will only be displayed once) and store it securely

In Parabola:

- Add a Track with AfterShip step to your flow

- Click Authorize and paste your API key when prompted

- Once authenticated, select the AfterShip endpoint you want to access

💡 Tip: You can generate multiple API keys for different applications or team members. For security, consider rotating your API keys periodically and removing any unused keys.

What data can I pull using the AfterShip integration?

Right now, Parabola's supports AfterShip's Tracking API—allowing you to pull real-time tracking data about a shipment. Access data such as:

- Delivery status and tags: Shipment categorization by status (In Transit, Out for Delivery, Delivered, Exception, etc.) and custom tags for filtering and organization.

- Estimated delivery dates: AI-powered delivery date predictions based on carrier performance, route analysis, and historical data.

- Order information: Associated order numbers, customer details, shipping methods, and custom metadata linked to each tracking.

- Exception details: Delivery failure information, return-to-sender status, failed delivery attempts, and reason codes for exceptions.

- Trackings: Complete shipment tracking records including tracking numbers, carrier information, delivery status, origin and destination addresses, estimated delivery dates, transit times, and shipment tags.

- Checkpoints: Detailed scan history and location updates with timestamps, event descriptions, city/state/country information, and coordinates for each checkpoint along the delivery route.

- Courier information: Carrier details including courier names, slugs (identifiers), service types, required tracking fields, and courier-specific capabilities.

- Courier detection: Automatically identify the correct carrier based on tracking number format or additional shipment information.

- Last checkpoint: The most recent tracking update for a specific shipment, including status, location, and timestamp.

- Notifications: Customer notification settings and history, including email and SMS subscription details for shipment updates.

What use cases can I built with Parabola's AfterShip integration?

Brands turn to Parabola any time they're exporting CSVs, breaking into Excel, monitoring and reconciling systems, and doing work manually. Here are some common use cases from brands that leverage AfterShip:

- Centralized shipment tracking: Pull tracking data from AfterShip and combine it with order records from Shopify, NetSuite, or your WMS to create a single consolidated view of all outbound shipments across carriers and channels.

- Order-to-tracking reconciliation: Compare order data from your ERP or sales channels with AfterShip tracking feeds to identify missing tracking numbers, mismatched shipments, duplicates, or orders with no delivery updates.

- Delivery SLA and transit-time reporting: Monitor delivery speed, missed SLAs, and carrier transit-time performance by combining AfterShip timestamps with promised delivery dates from your ERP or OMS.

- Carrier performance scorecards: Analyze on-time rates, exception frequency, and regional performance by carrier using AfterShip status histories to generate internal performance dashboards and quarterly business review scorecards.

- Exception and delay monitoring: Create automated workflows that pull AfterShip exceptions—like failed delivery attempts, “Available for Pickup,” or “Exception”—and alert CX or ops teams for proactive customer outreach via Slack or email.

- Return shipment visibility: Track return parcels by pulling AfterShip reverse-logistics checkpoints and joining them with RMA or returns data in Shopify, ERP, or your returns platform to improve refund timing and accuracy.

Tips to find success

- Schedule your flow to run automatically: Set up hourly or daily refreshes to keep tracking data current and catch exceptions as they happen.

- Use Filters to identify at-risk shipments: Create rules to flag trackings that have been "In Transit" for longer than expected or haven't moved in several days.

- Combine with other systems: Join AfterShip tracking data with your order management system (Shopify, NetSuite, etc.) to create complete order-to-delivery reports and catch missing tracking numbers.

- Set up Alerts for exceptions: Configure automated Slack messages or email alerts when shipments move to "Exception" status or miss their estimated delivery dates.

- Normalize courier slugs early: AfterShip uses standardized courier identifiers (slugs) like "fedex" or "ups"—use these consistently when joining data from multiple sources.

- Archive historical data: Export completed shipments to Google Sheets or your data warehouse to analyze long-term trends in carrier performance and delivery times.

- Filter by destination region: Use checkpoint location data to analyze delivery performance by geography and identify regions with higher exception rates.

Integration:

Airtable

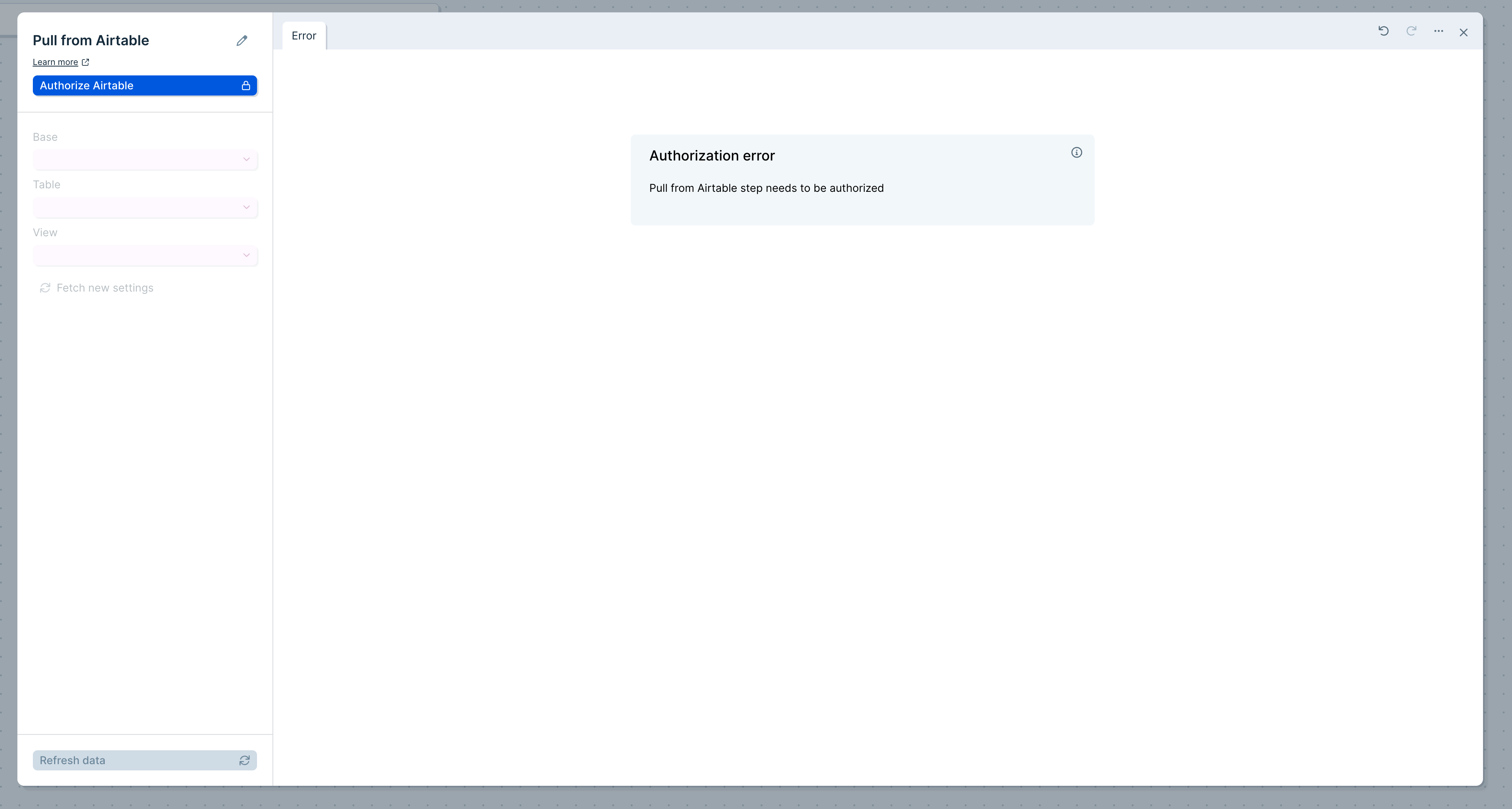

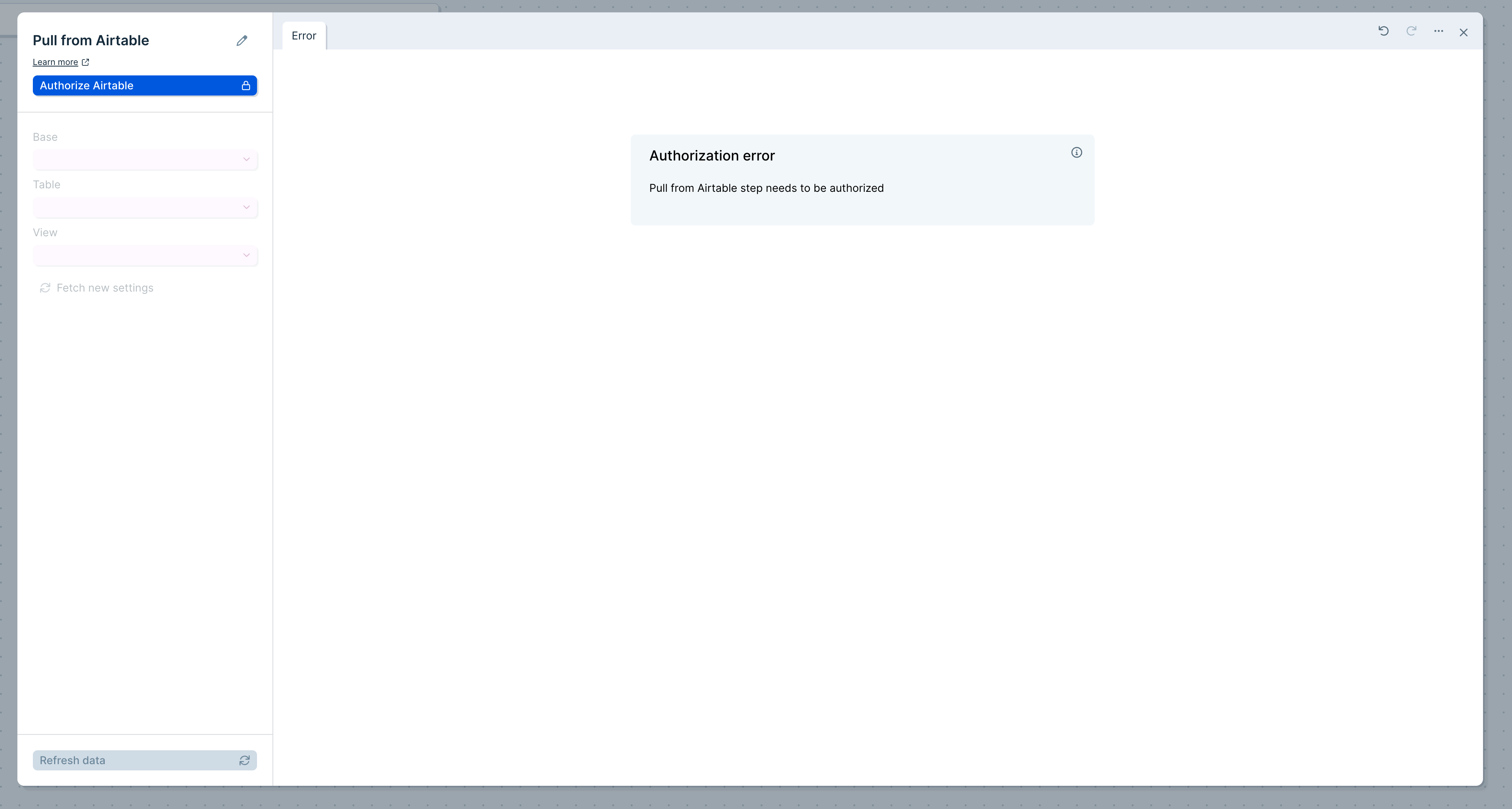

Use the Pull from Airtable step to pull in your data from your Airtable databases.

On August 1, 2023, Airtable will no longer allow users to generate new API keys. If you have a Pull from Airtable step that was authorized before July 27th, 2023 (using an API key for authentication), it will continue to pull in data until February 1, 2024. After that date, the step will no longer function. To migrate your step to the new authentication method, open the step, click "Choose Accounts" -> "Add new account". Once that authentication has been added to one step in your Flow, you can switch other Airtable steps to use it as well.

Connect your Airtable account

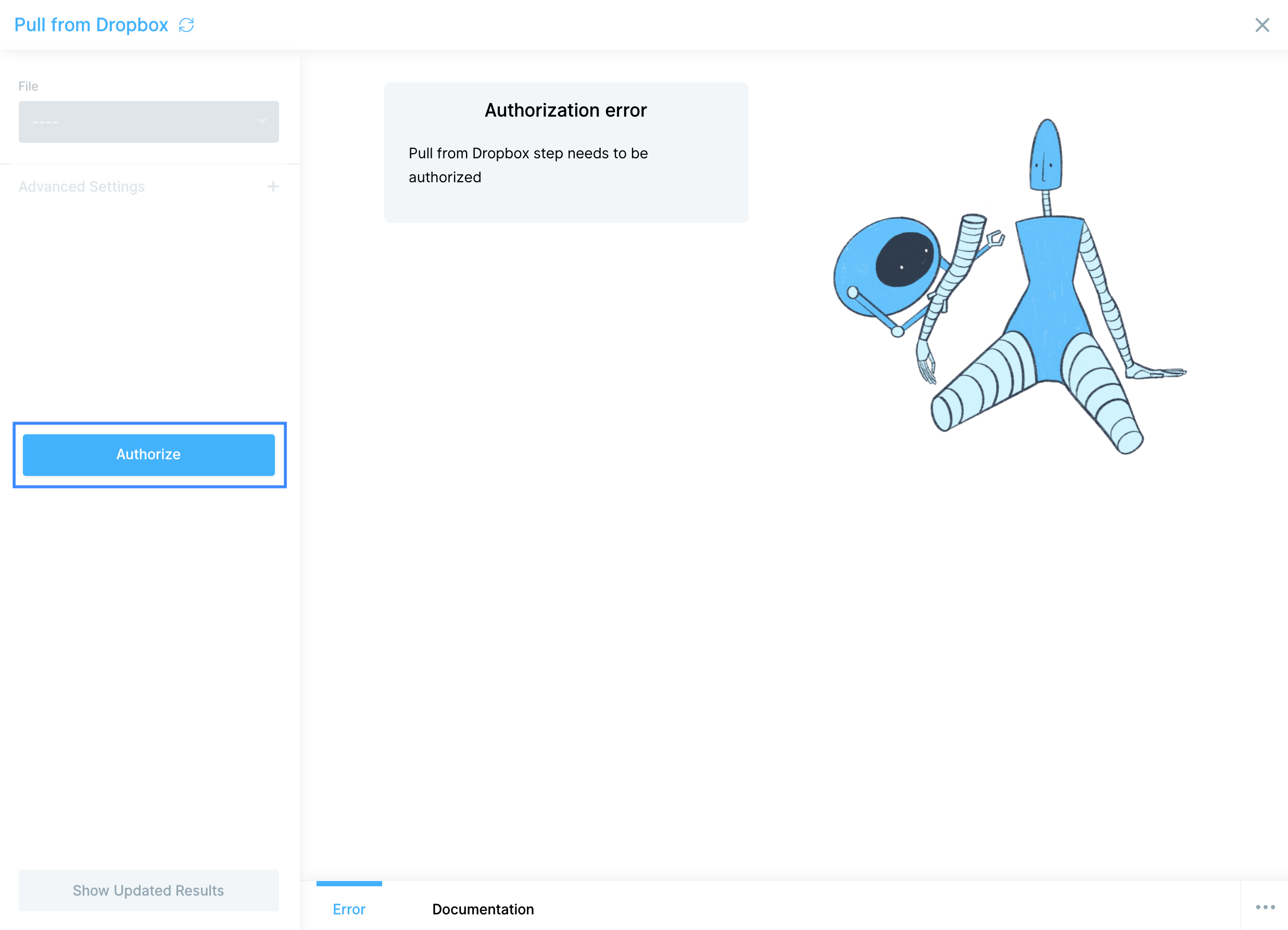

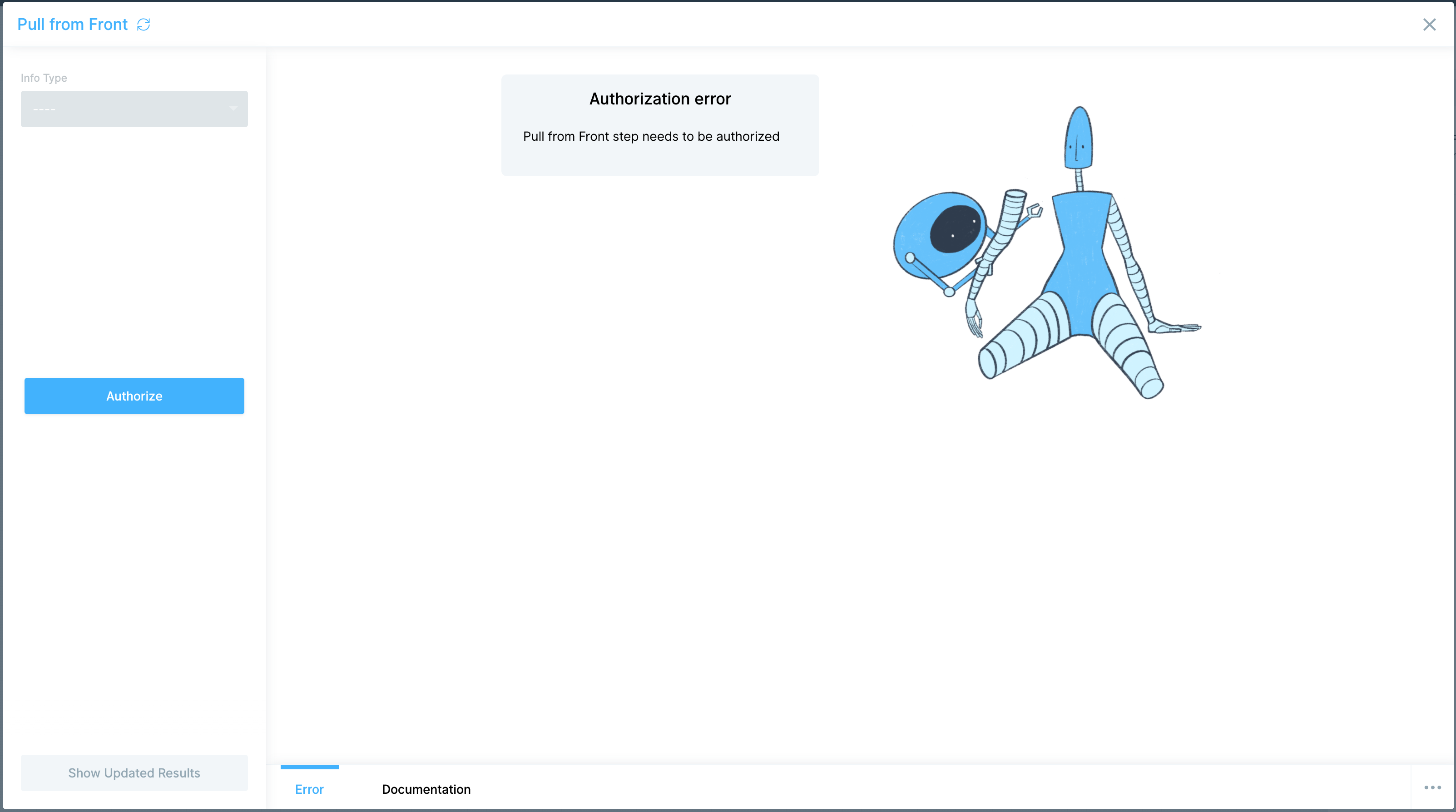

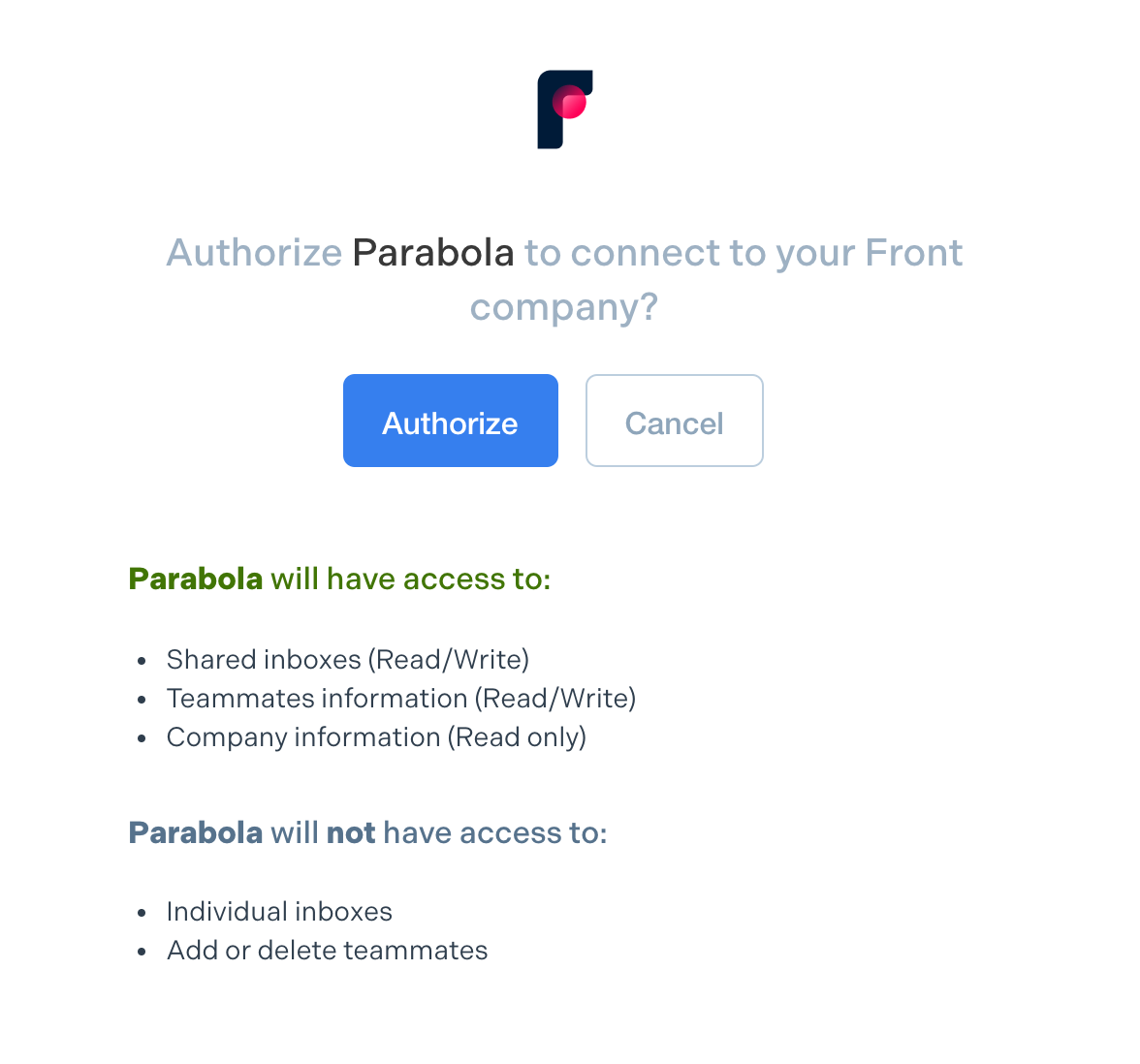

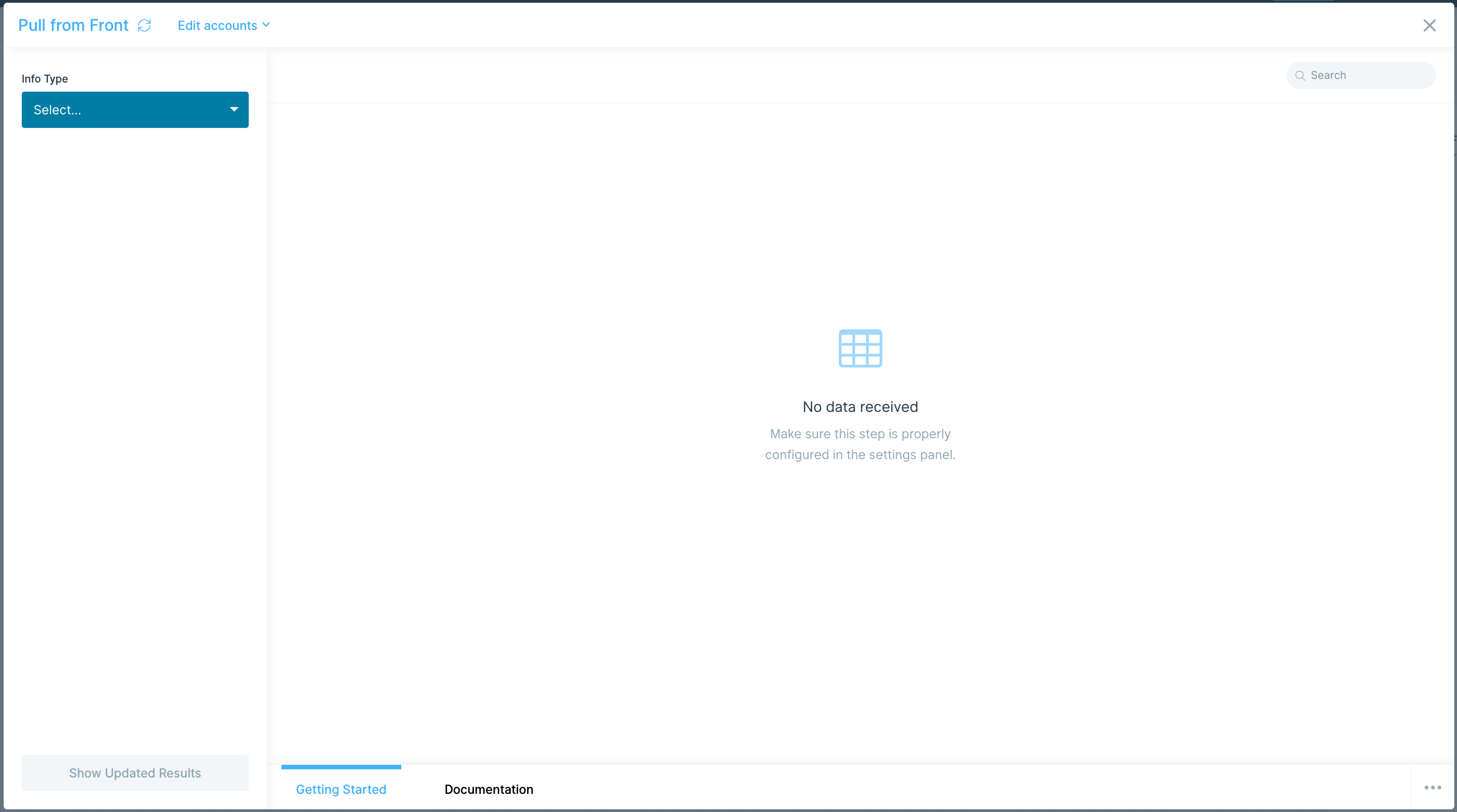

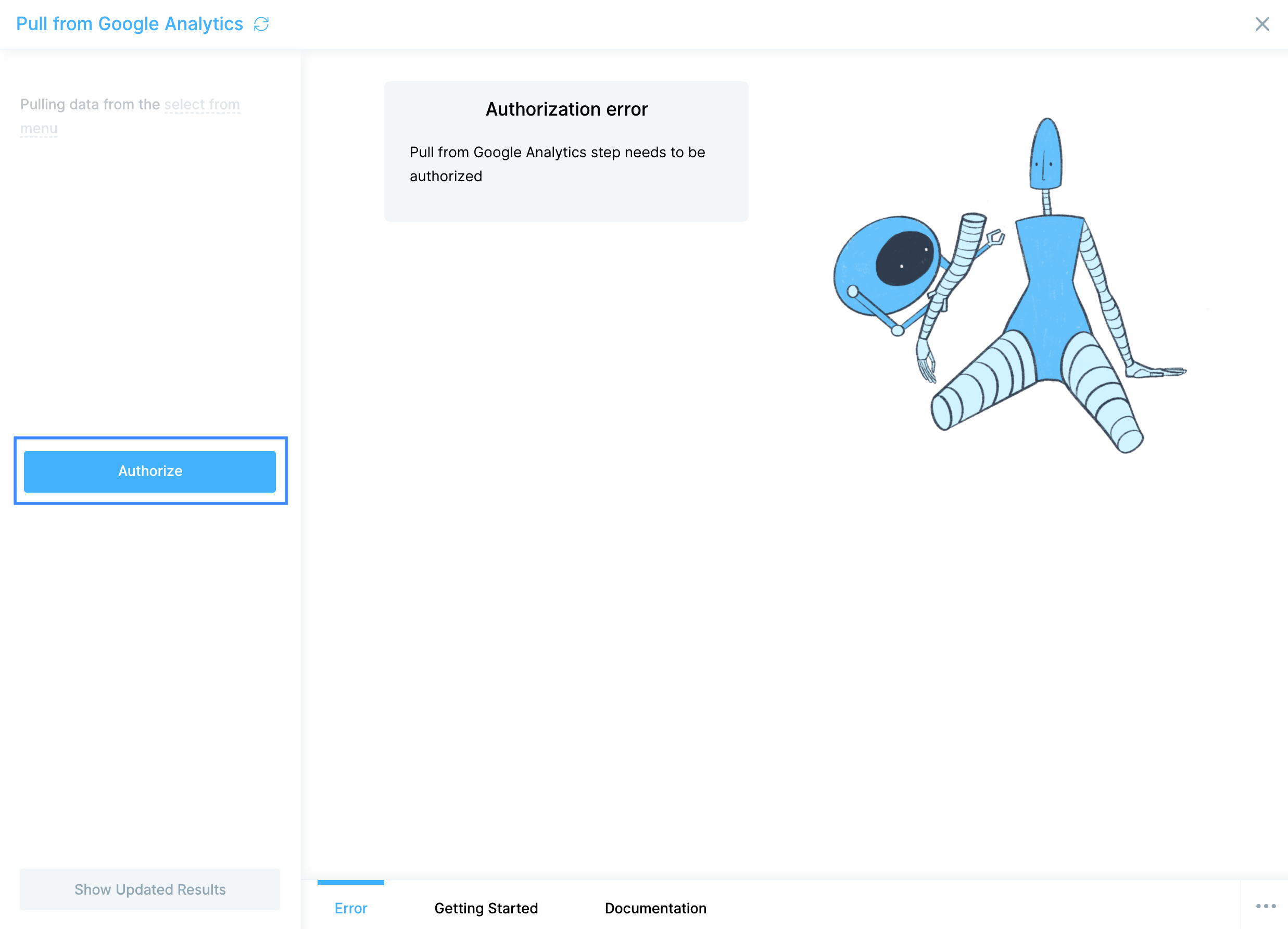

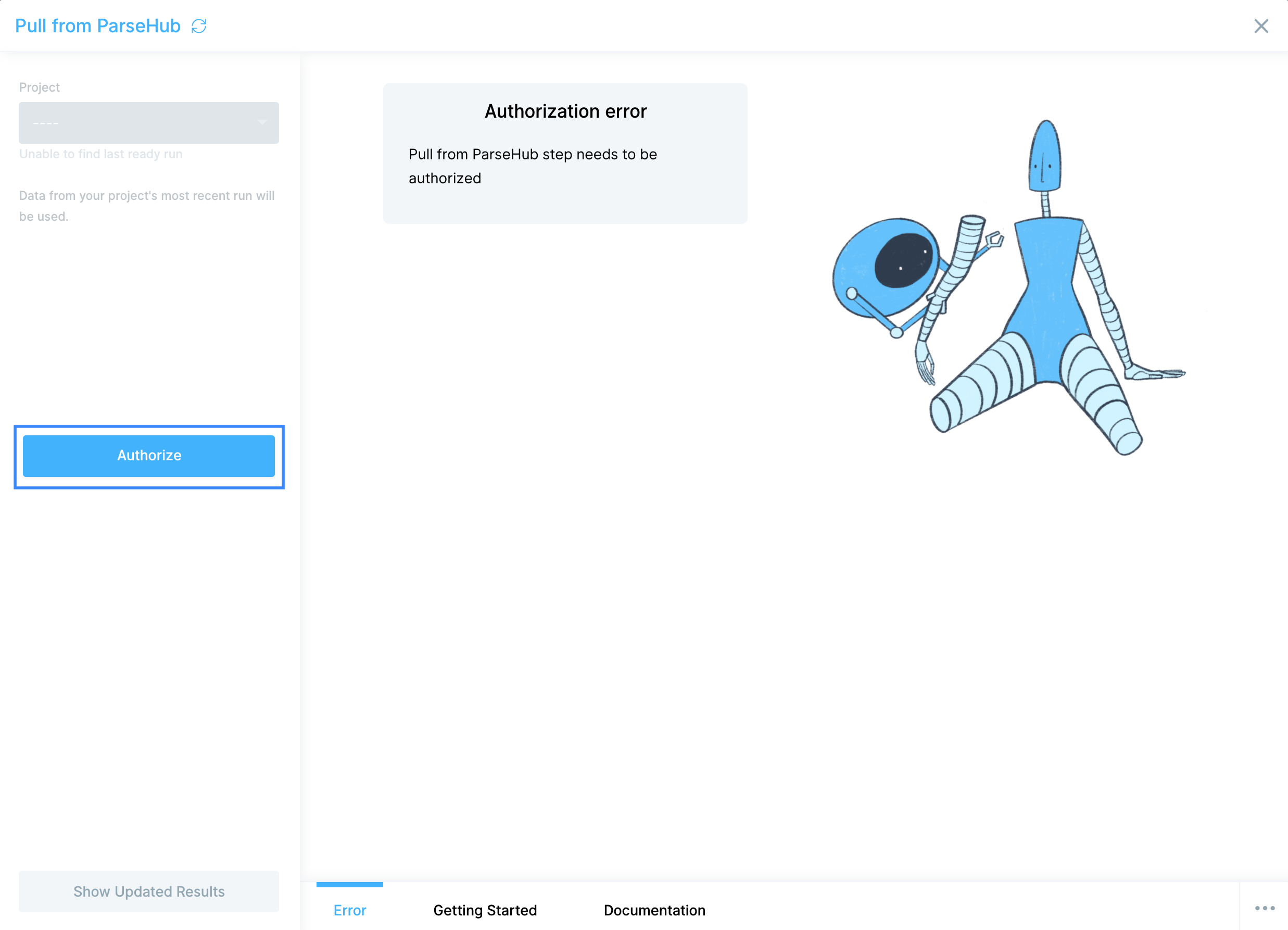

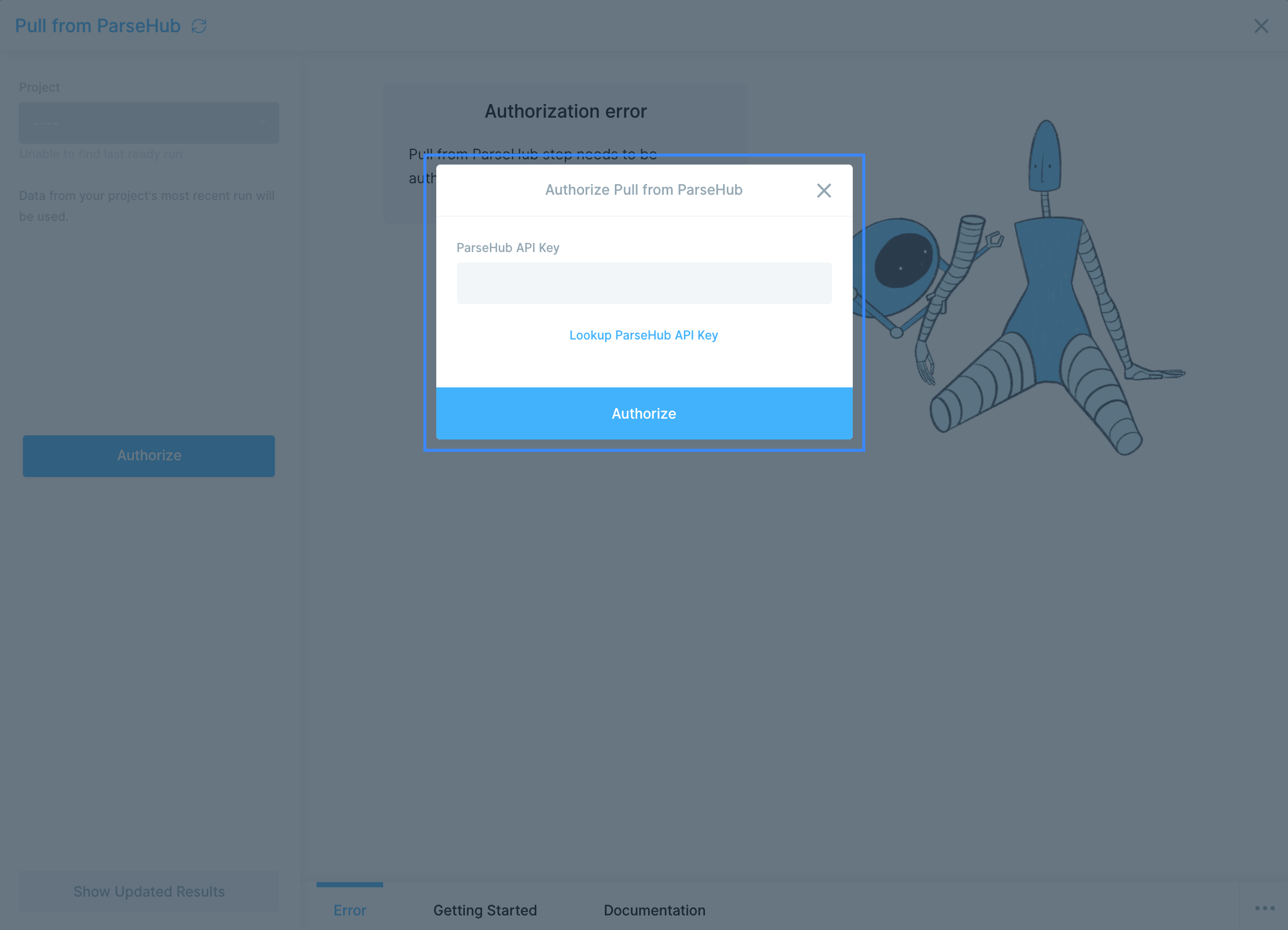

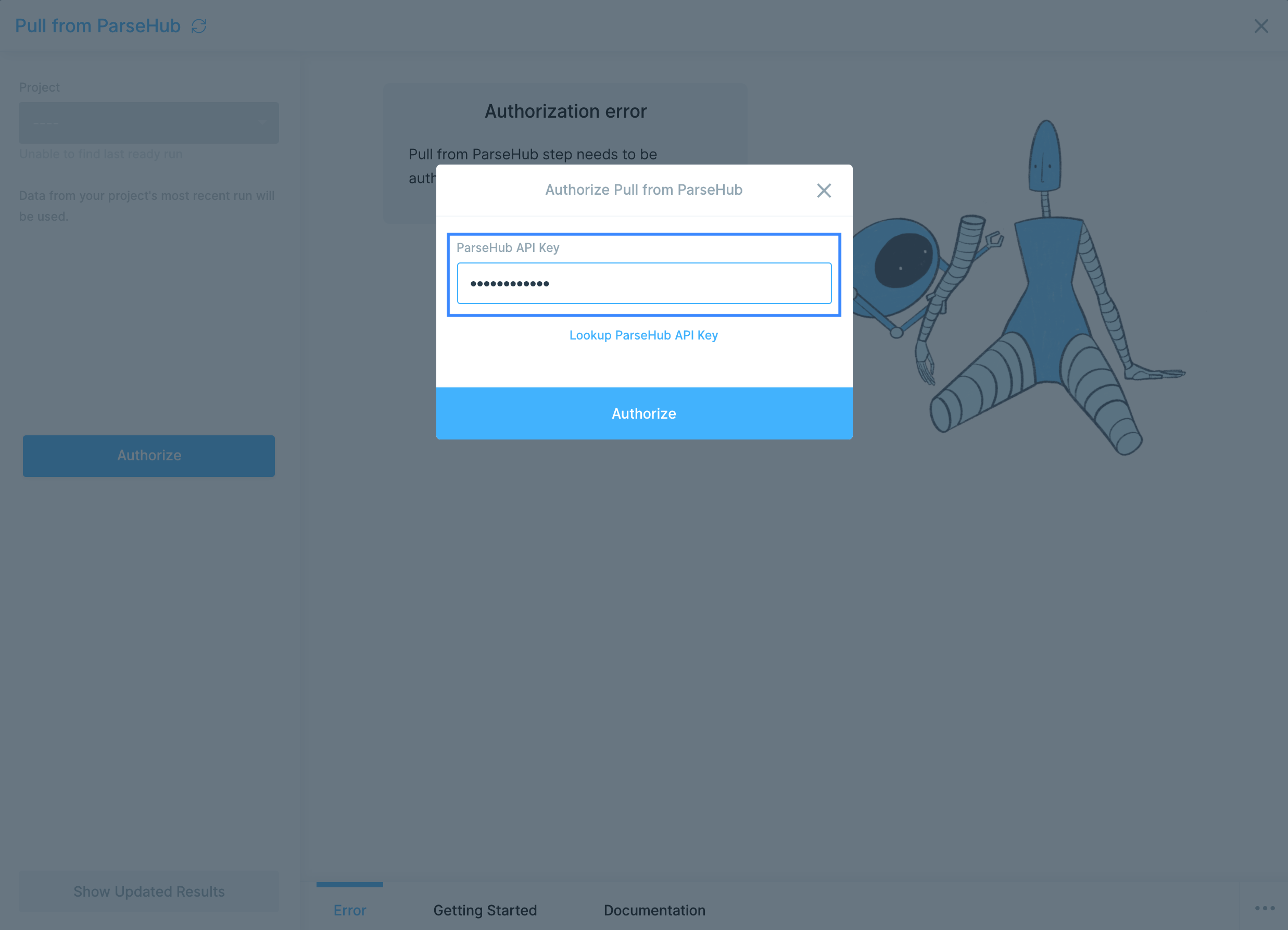

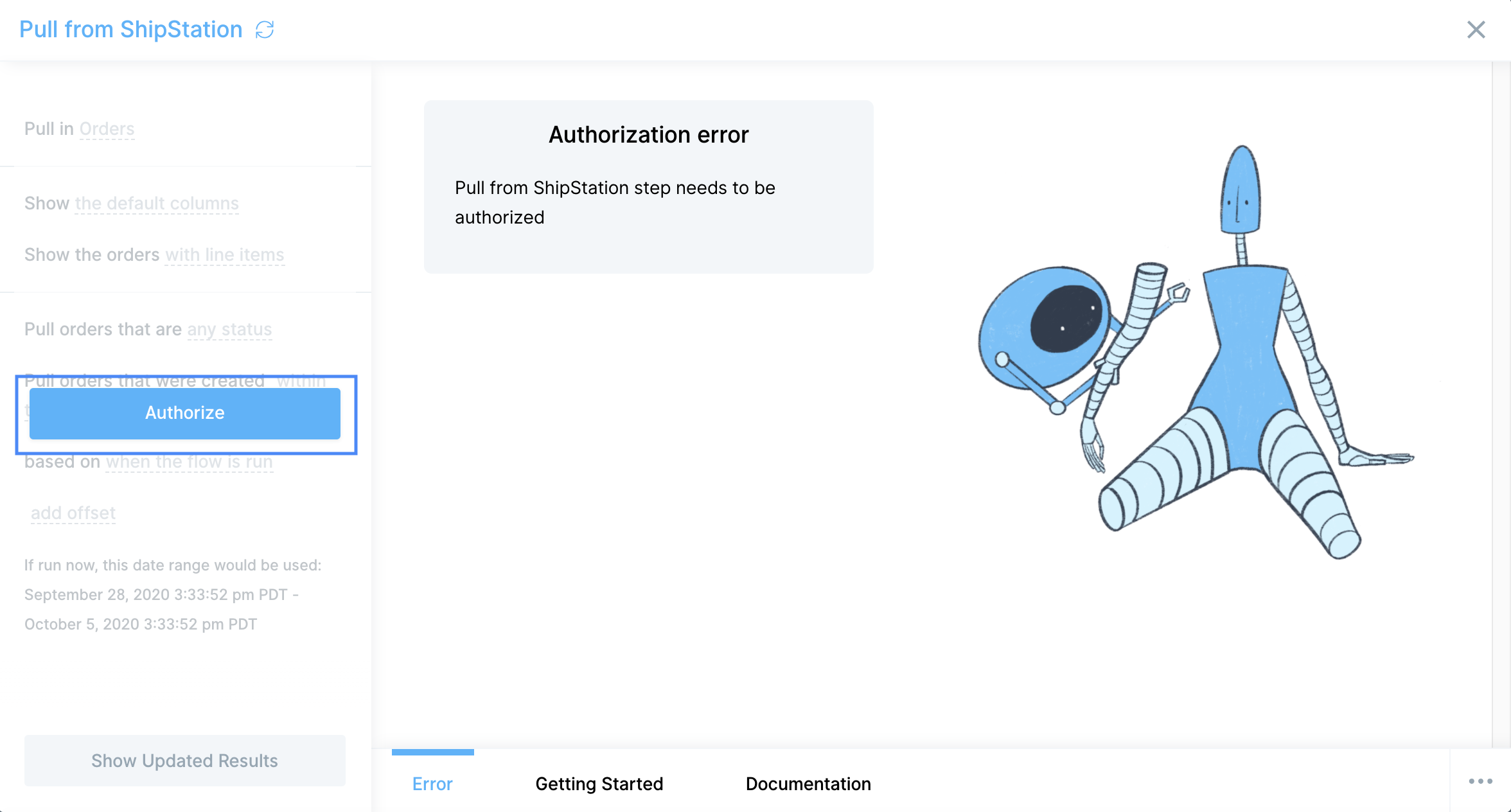

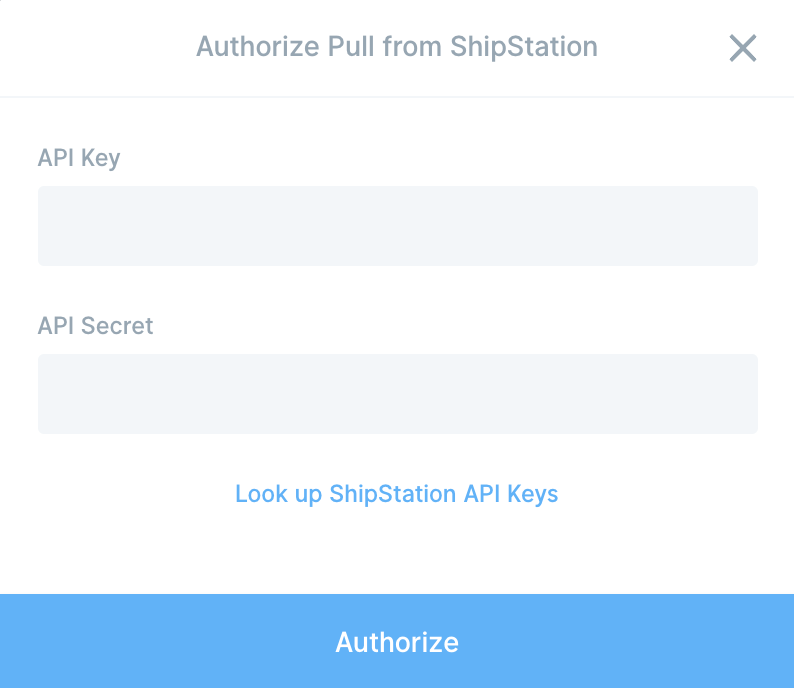

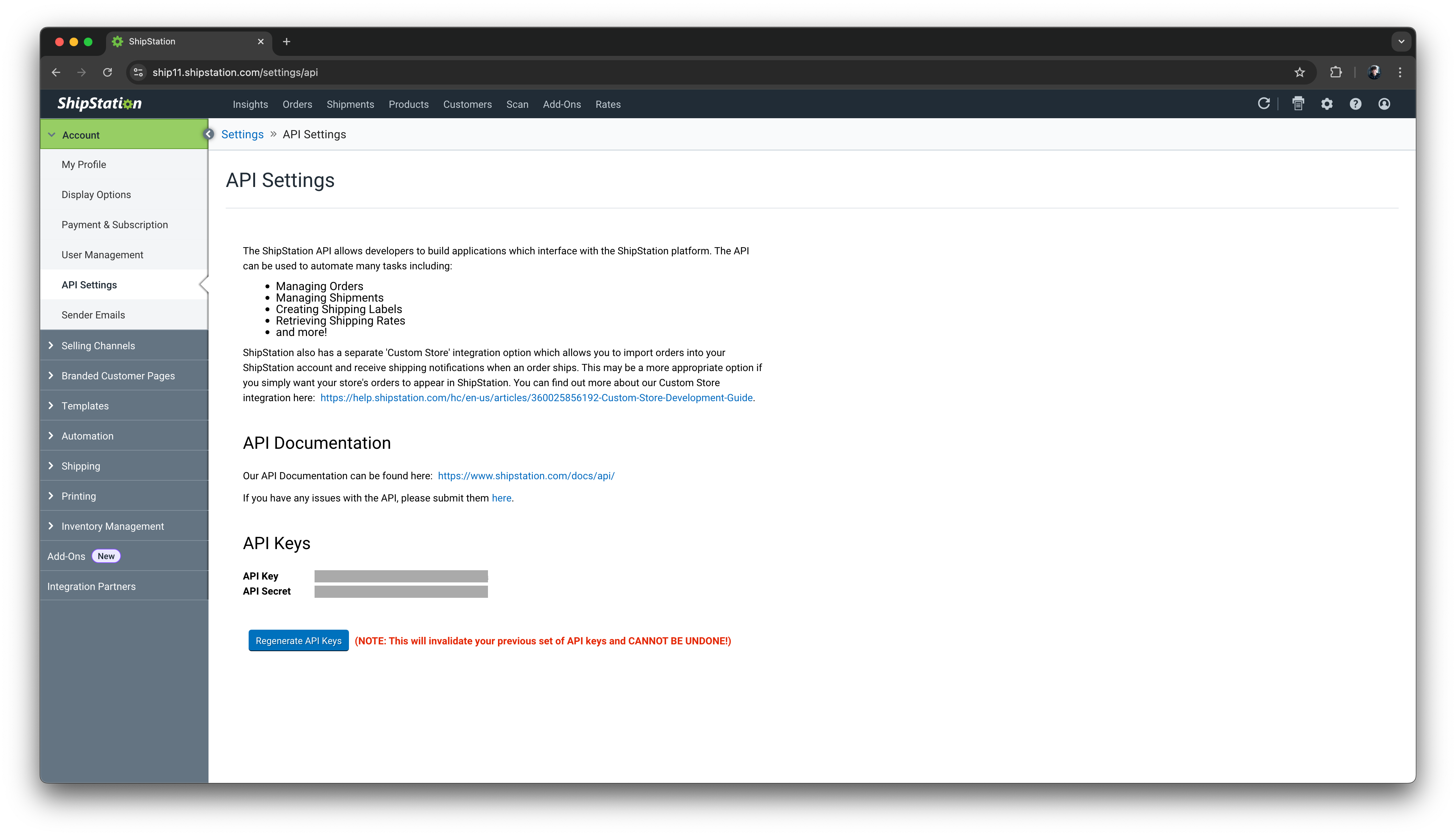

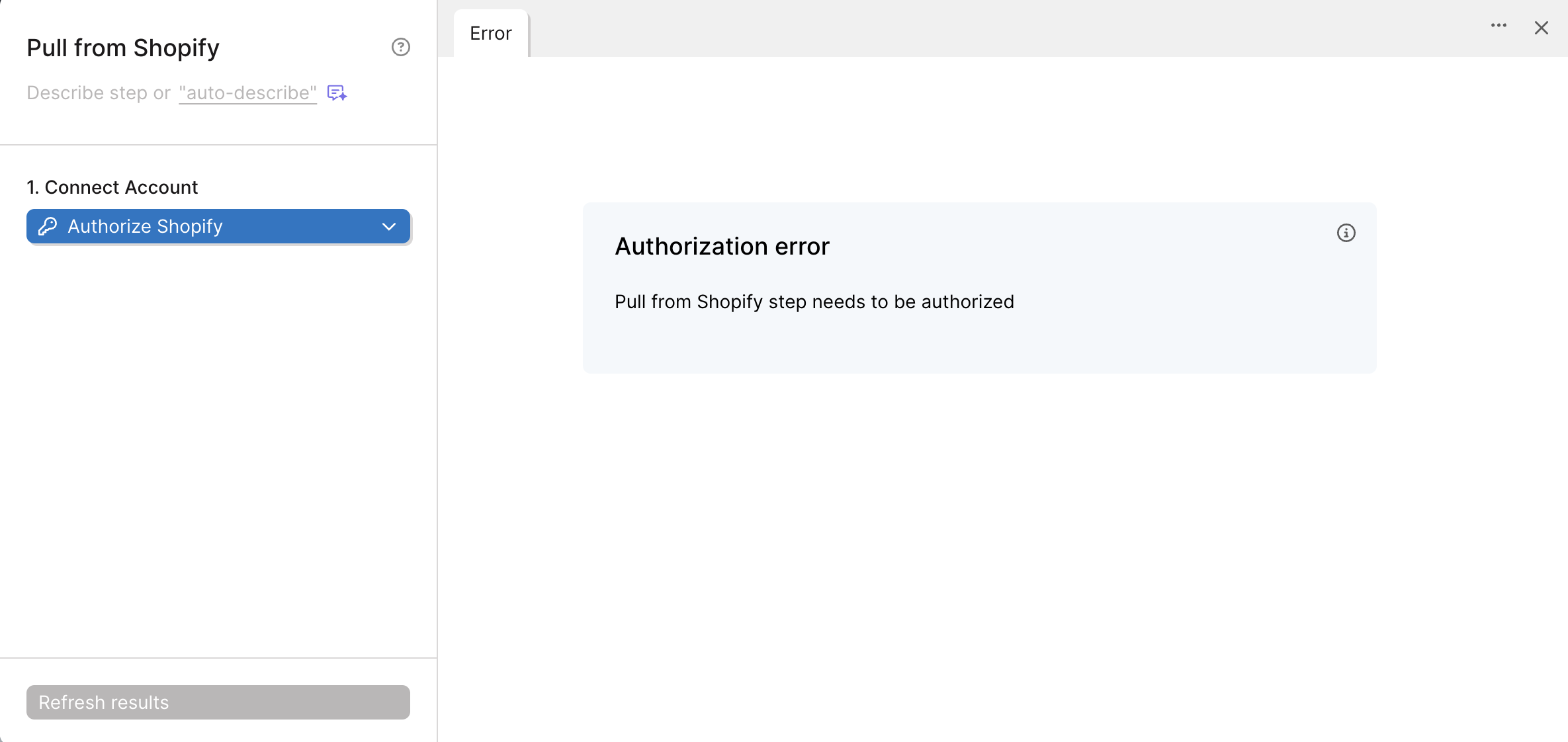

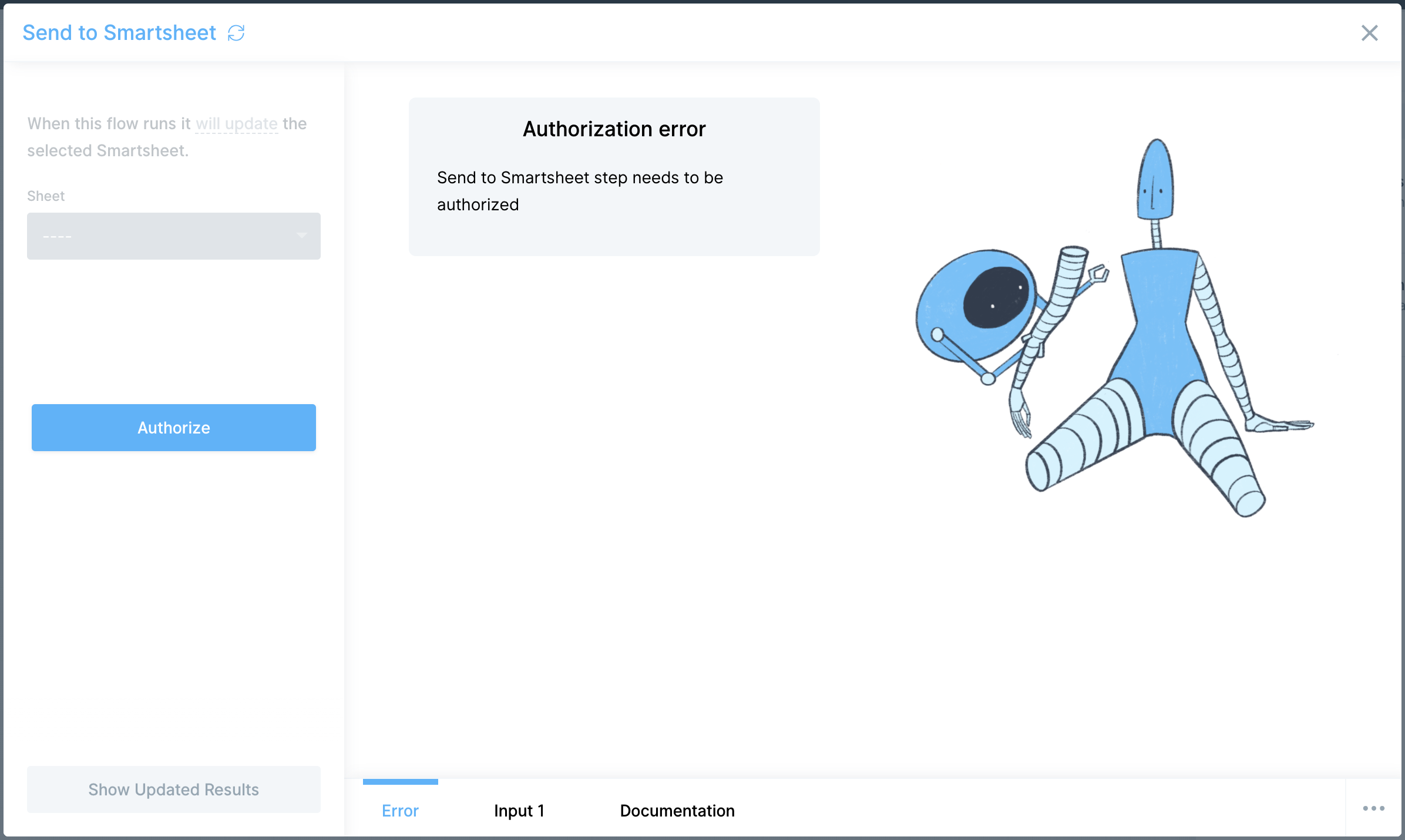

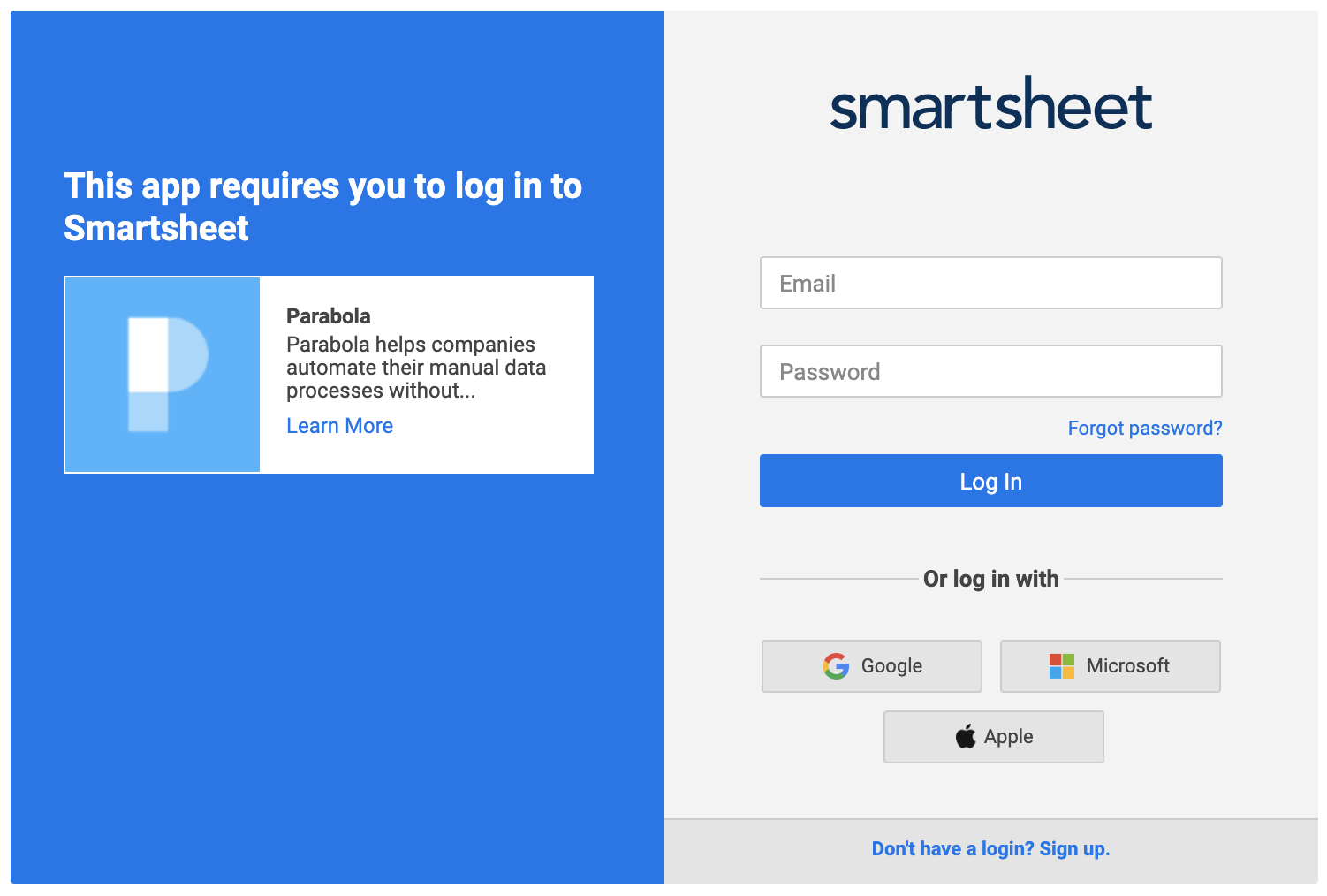

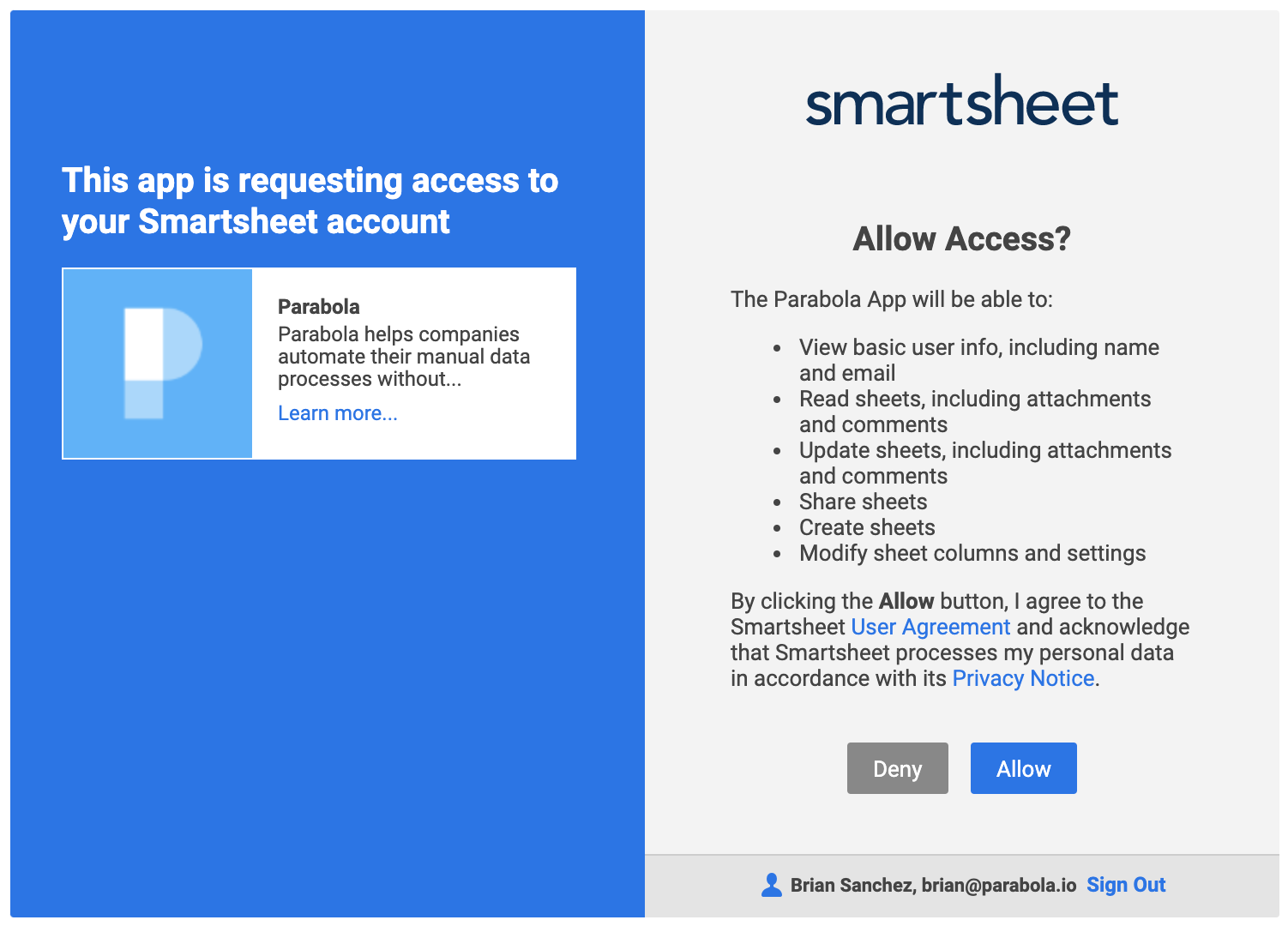

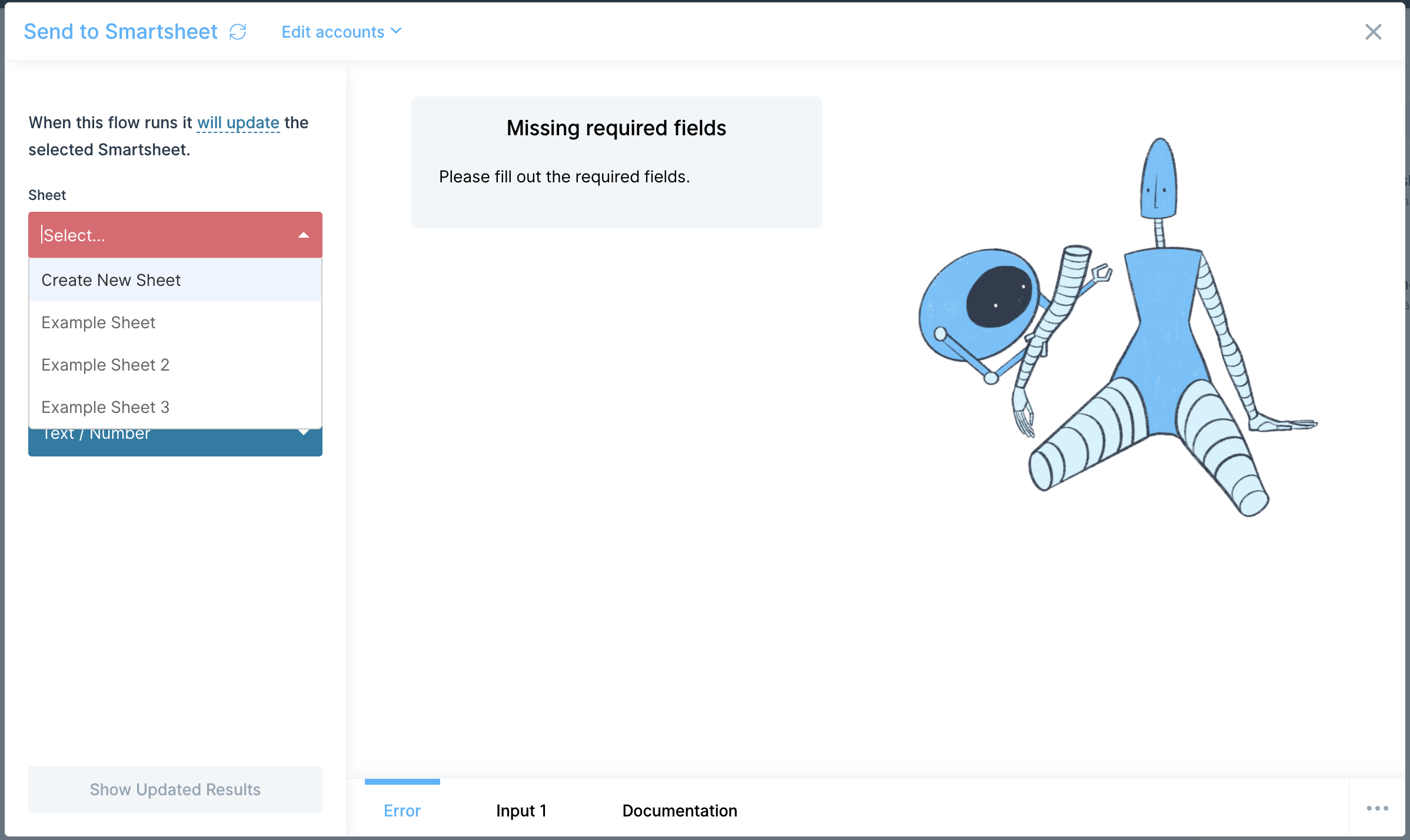

To connect to your Airtable account, click the blue Authorize button.

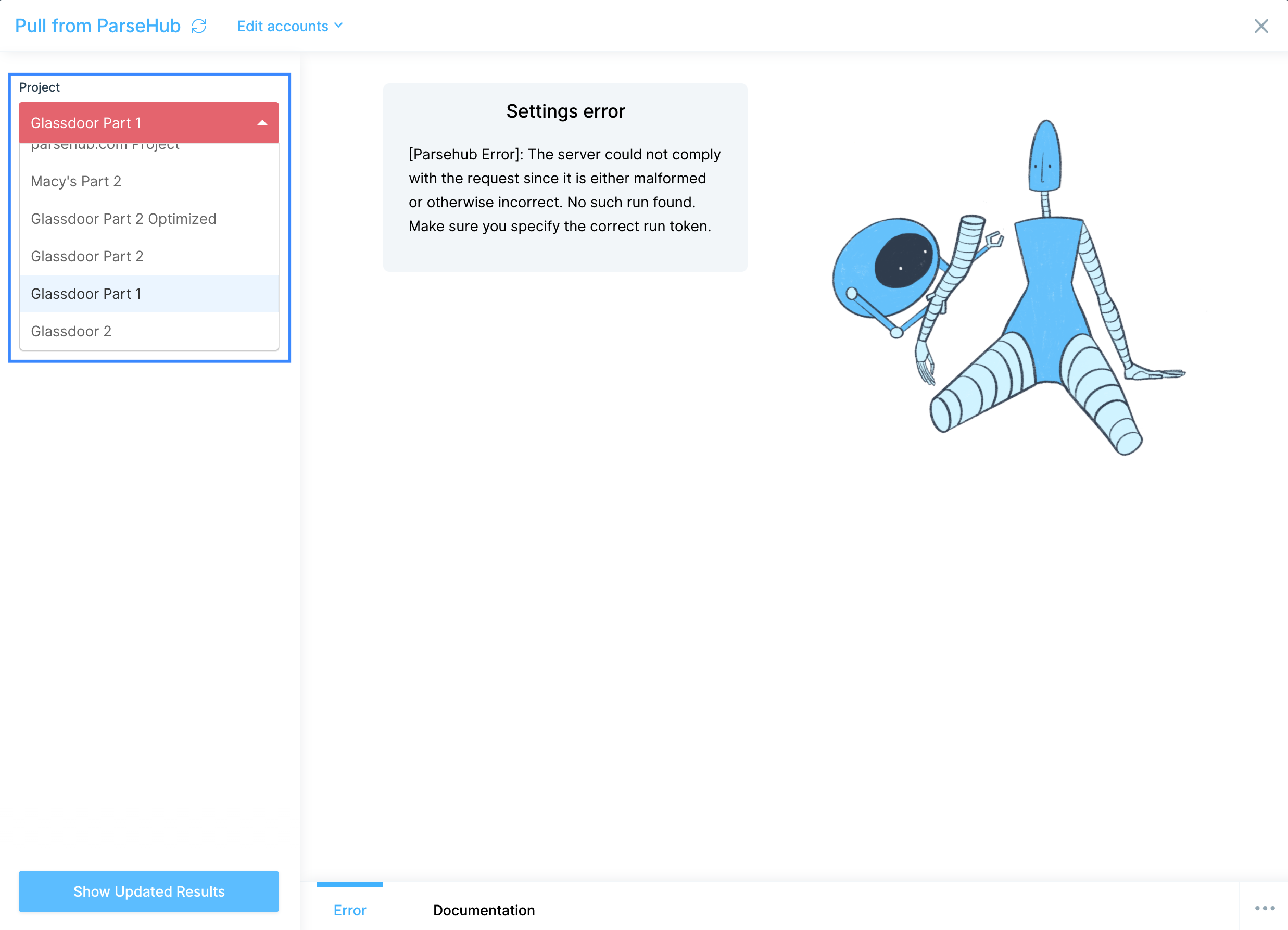

Clicking Authorize will launch a window where you can sign in to Airtable and confirm which bases you would like Parabola to have access to. Any base that you do not select from this menu will not be available to pull data from.

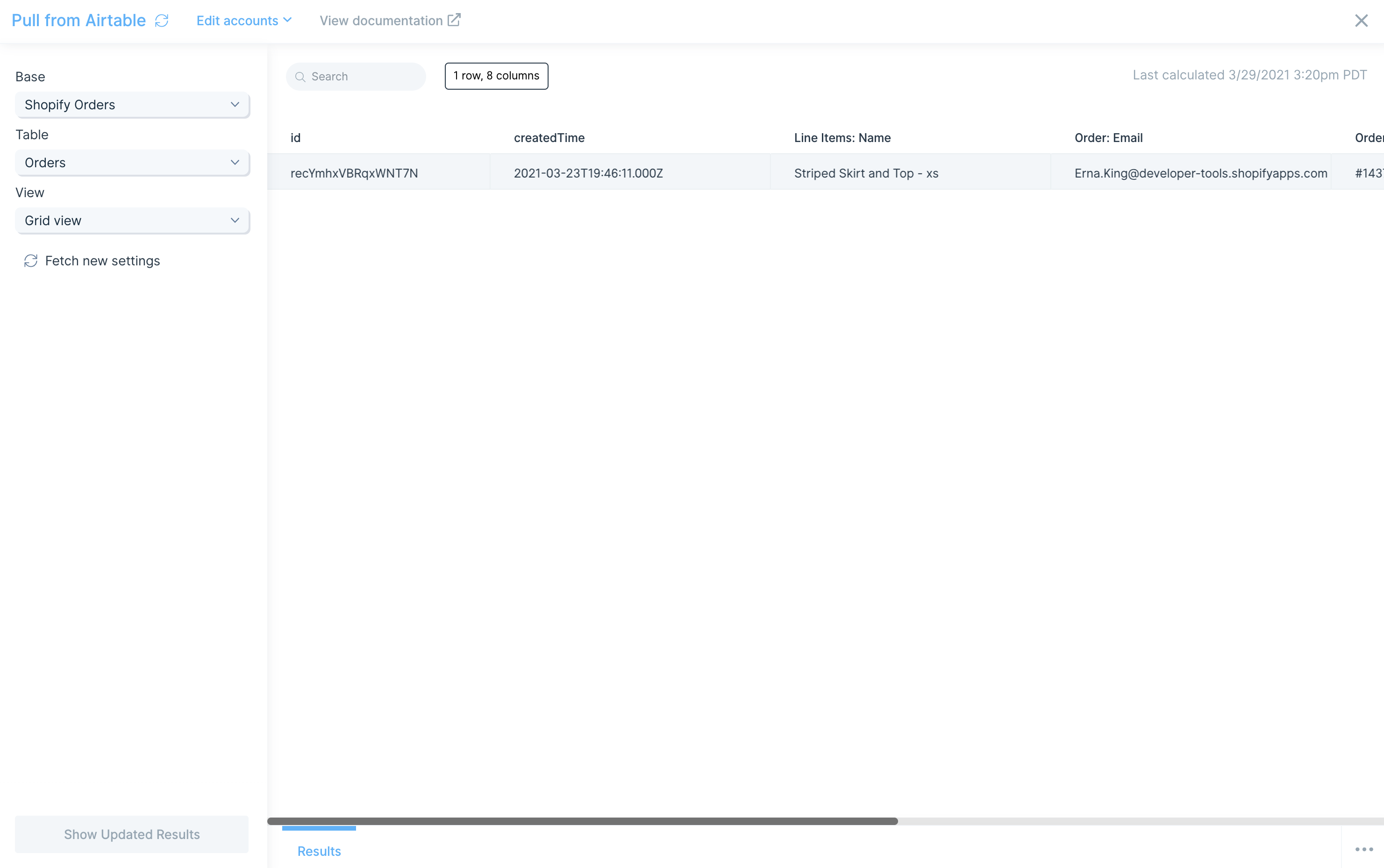

Custom settings

Once connected, you can select the Base, Table and View from your Airtable bases. In the example below, we are pulling data from our Shopify Orders base and our Orders table using the Grid view.

You can also click Fetch new settings to reload any bases, tables, or views since your data was last imported.

Helpful tips

Linked Records

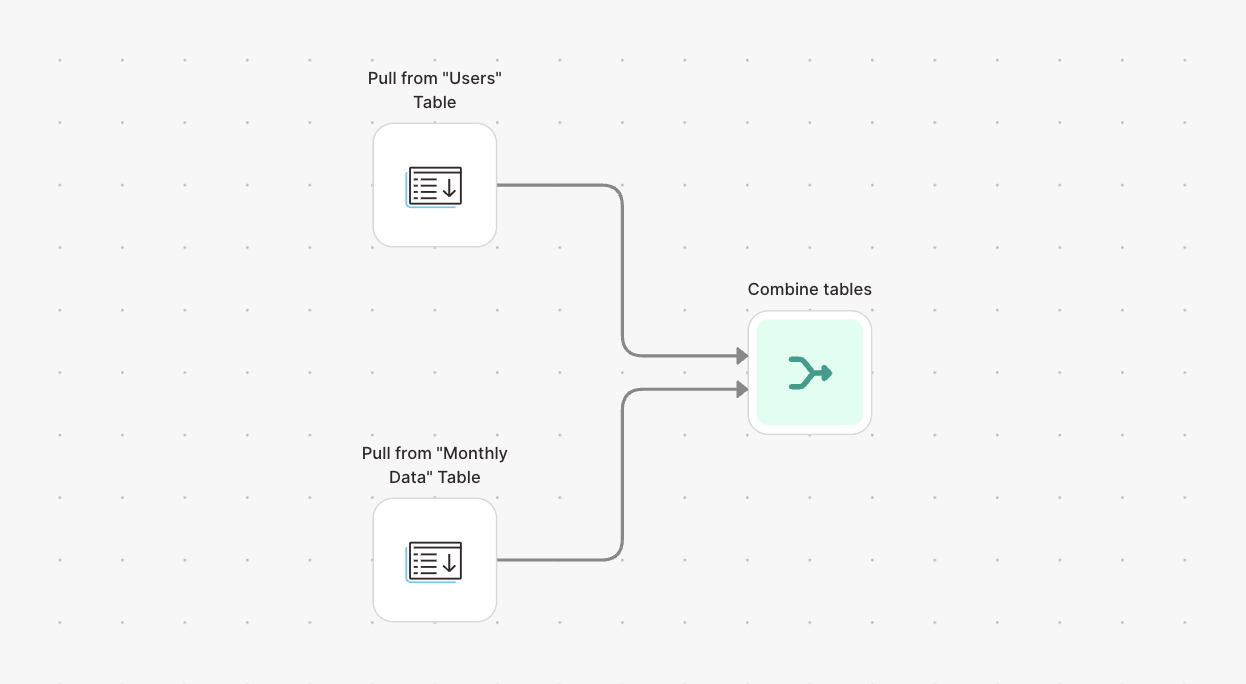

If your base uses linked records to connect tables, those values will be pulled in as record ids. To get the complete data associated with those records, use another Pull from Airtable step to import the related table. Using the Combine tables step, you can merge the tables together based on a shared record id.

Good to know

If a column has no values in it, that column will not be imported. There must be at least one value present in a row for the column itself to come through.

If a column has a duration in an h:mm format, Airtable exports duration value in millisecond units, parses incoming duration value using minutes. For example, Airtable sends 0:01 as 60.

Use the Send to Airtable step to create, update, or delete records in your Airtable base. Just map the fields in your Airtable base to the related columns in Parabola.

On August 1, 2023, Airtable will no longer allow users to generate new API keys. If you have a Send to Airtable step that was authorized before July 27th, 2023 (using an API key for authentication), it will continue to pull in data until February 1, 2024. After that date, the step will no longer function. To migrate your step to the new authentication method, open the step, click "Choose Accounts" -> "Add new account". Once that authentication has been added to one step in your Flow, you can switch other Airtable steps to use it as well.

Connect your Airtable account

To connect to your Airtable account, click the blue Authorize button.

Clicking Authorize will launch a window where you can sign in to Airtable and confirm which bases you would like Parabola to have access to. Any base that you do not select from this menu will not be available to pull data from.

Custom settings

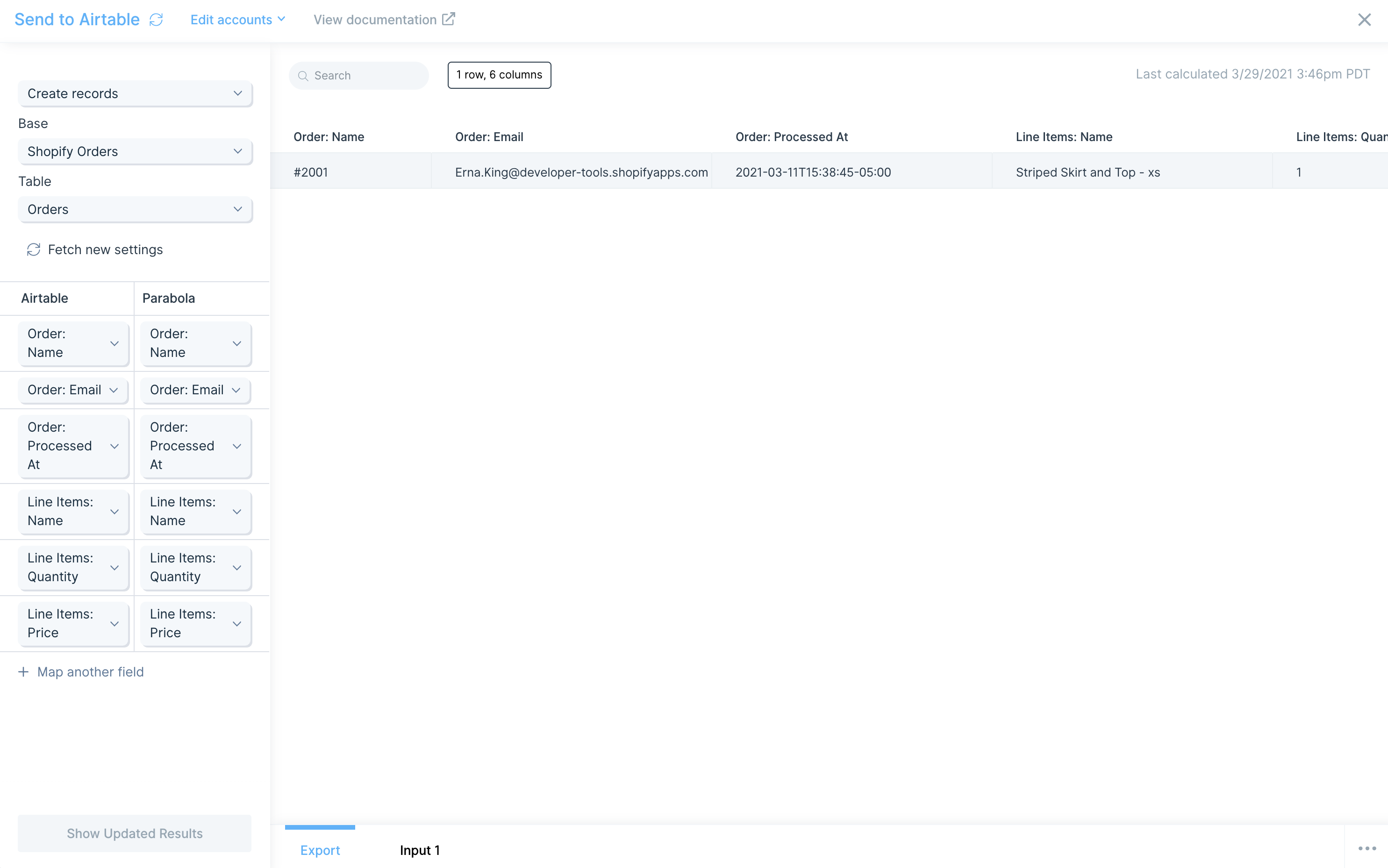

Once connected, you can chose to create records, update records, or delete records from the base and table of your choosing.

Creating records

In the example below, we are adding order #2001 to our Orders table within our Shopify Orders base.

Note how the Airtable fields are displayed on the left-hand side. Each of the columns from your Airtable base appears. On the right-hand side, map the values from our Parabola data to be added into those fields.

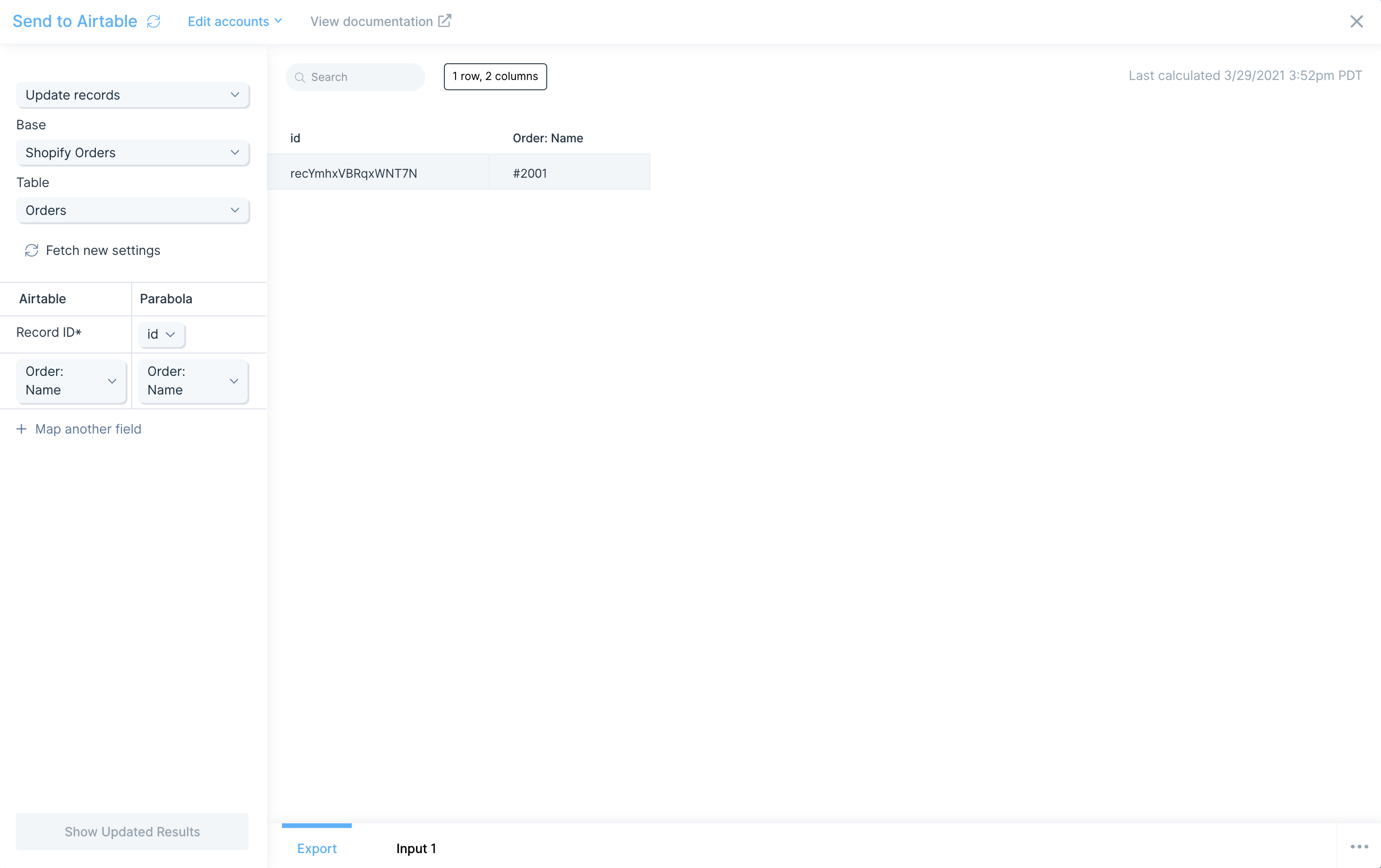

Updating records

You can also target a specific record to be updated. Map the Record ID* to the id column in Parabola that contains that data. You can also chose the specific fields you want to update.

In this example, we are updating the Order: Name of record recYmhxVBRqxWNT7N.

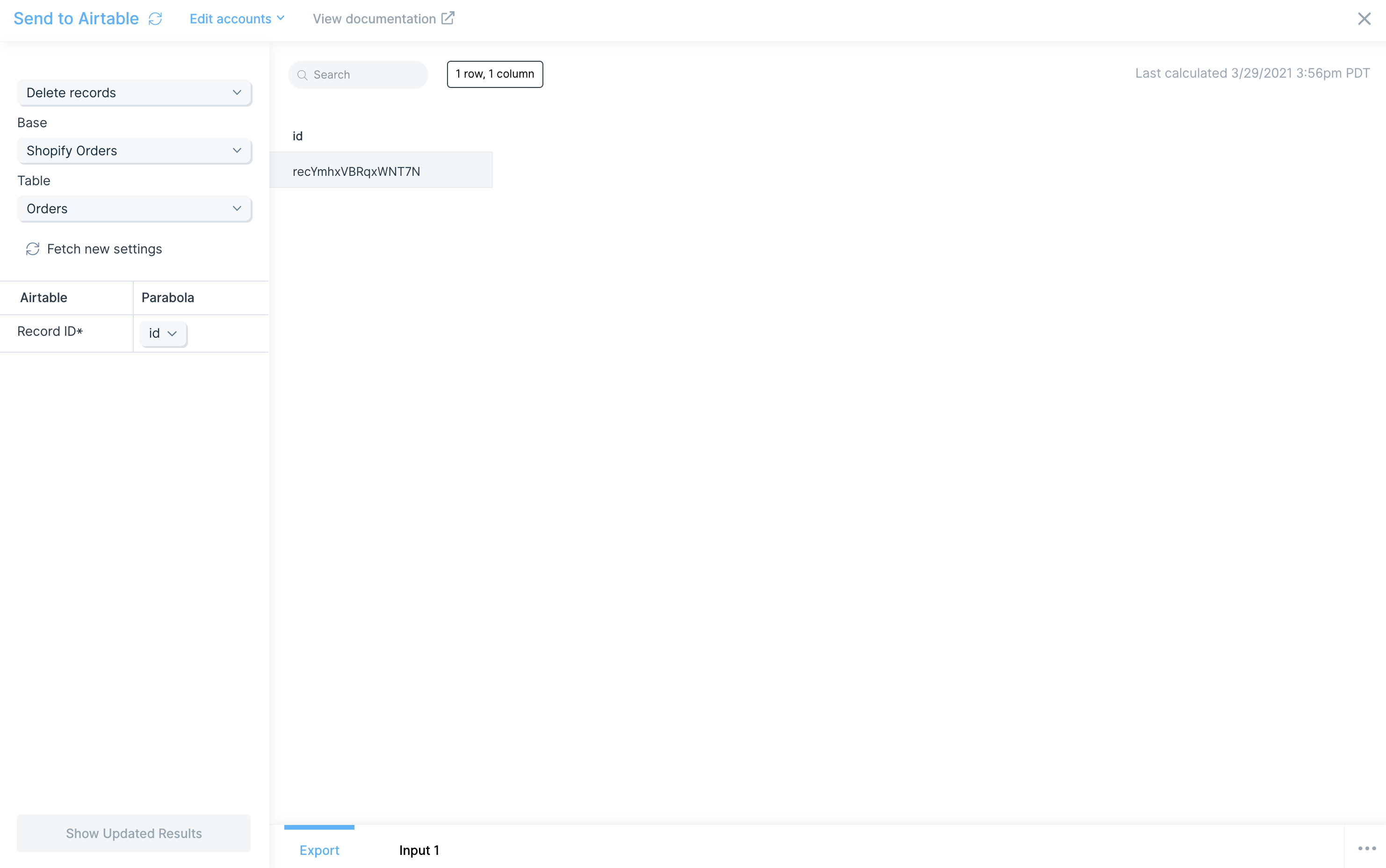

Deleting records

To delete a record, simply map the Record ID* to the id column in Parabola. In this example, we are deleting record recYmhxVBRqxWNT7N.

Helpful tips

Percentages

Convert your percentages to decimal values using before sending data to Airtable. For example, if your data contains 0.51%, convert that to 0.0051 and adjust your precision values in Airtable. By default, Airtable may interpret that as 0.01%.

Single select/Multi select

You can automatically pass the values of your select options to set those values in your Airtable base. If you enter a select option that does not exist, Airtable will automatically create new select option for that value.

Duration using h:mm format

Airtable parses incoming duration value using minutes. For example, if your duration is 60 milliseconds, Airtable will parse that value as 1:00.

Checkboxes

Set a value of true to toggle a checkbox in your table. Set a value of false to un-toggle a checkbox in your table.

Collaborators

When updating Airtable column with field type collaborator, you can pass in an id or email value. Passing a name value will return an error of "Cannot parse value".

Integration:

Amazon Seller Central

Use the Pull from Amazon Seller Central step to import Amazon reports into your flow.

Set up the step

- Drag the Pull from Amazon Seller Central step onto the canvas.

- Click "Authorize Amazon Seller".

- In the pop-up that appears, log in to your Amazon Seller Central account to connect it to Parabola.

Configure your settings

.png)

Troubleshooting "fatal error" response from Amazon

Potential reasons for fatal errors from Amazon

- No data is available for the date range specified.

- The date range doesn’t follow Amazon’s specifications. Some report types have minimum and maximum date range limits. Check the description of your selected report type for details.

- The connected seller account doesn’t sell in the marketplace(s) specified in the request.

- You’ve made the same exact report request too many times in a row. In rare cases, this can result in a fatal error response from Amazon.

Potential solutions

- Try adjusting your date range to a smaller or different window.

- Manually confirm in Amazon Seller Central that data exists for the date range you’re requesting.

- While this behavior isn’t confirmed in Amazon’s official documentation, several users have observed it. Try spacing out repeated report requests (try again in 24 hours) if you suspect that this might be the cause of the fatal errors.

Helpful tips

- This step pulls from Amazon’s Reporting API. If you need data from the Orders or Customers APIs, look for reports that already contain that information.

- At this time, we are unable to pull reports from the Easy Ship and Orders report categories. (Last update: October 7, 2025)

- There are two types of inventory reports: Inventory and Fulfillment by Amazon (FBA) Inventory. Check both if you’re unsure where your dataset lives. Inventory reports cover products you fulfill directly, while FBA Inventory reports cover products Amazon fulfills on your behalf.

- Amazon’s API can take up to an hour to return report results. Limit the timeframe or data size when possible to reduce wait times.

- The default timezone matches your browser. You can adjust this if needed. Parabola converts your timeframe and timezone to UTC when requesting the report.

- If a report exists in Amazon Seller Central but isn’t available in Parabola, contact us at help@parabola.io.

Integration:

BigQuery

How to authenticate

BigQuery uses Google authentication to authorize the connection.

- In your Parabola flow, add a Pull from BigQuery step.

- Open one of the Pull from BigQuery steps and click Authorize and sign in to your Google account, giving Parabola the requested permissions.

- Each Pull from BigQuery step can re-use your authentication; make sure each step is configured to use your credentials.

Parabola will securely store your credentials and use them to authenticate each request to BigQuery.

Required IAM Permissions in Google

projects.list:

- bigquery.projects.list (or resourcemanager.projects.list)

- Allows listing projects the user can access

jobs.query:

- bigquery.jobs.create — create/run query jobs

- bigquery.tables.getData — read data from the tables referenced in the query

- Note: Users need table-level access for each table they query

jobs.getQueryResults:

- bigquery.jobs.get — retrieve query job results

Recommended IAM Roles

- roles/bigquery.user — includes bigquery.jobs.create and bigquery.jobs.get

- roles/bigquery.jobUser — includes bigquery.jobs.create and bigquery.jobs.get (more limited than bigquery.user)

- roles/viewer or roles/browser — includes resourcemanager.projects.list (for listing projects)

Plus dataset/table access:

- roles/bigquery.dataViewer — read access to specific datasets/tables

- Or grant bigquery.tables.getData on the specific tables/datasets they need to query

Minimum setup: Assign roles/bigquery.user (or roles/bigquery.jobUser) plus roles/viewer (for project listing), and ensure users have read access to the datasets/tables they query (via roles/bigquery.dataViewer or dataset-level permissions).

Available data

Using the BigQuery integration in Parabola, you can run custom SQL queries against any dataset and table in your BigQuery projects. Write Standard SQL queries to filter, aggregate, join, and transform data from your BigQuery tables—try using the chat interface on the left-hand side of your canvas to help write your SQL queries.

Here’s how to use the available connections to run a SQL query:

- Use the List Projects step if you need to find a BigQuery project ID. Increase the "Total pages limit" value if you need to see more projects.

- Use the Send Query (Run a SQL query) and Pull results from BigQuery (Poll for query results) steps to submit a query and get all results. The Custom transform will create a table of results from your query results.

The integration supports full SQL query capabilities, allowing you to leverage BigQuery's powerful analytics engine to prepare data exactly as needed before pulling it into Parabola.

Common use cases

- Automate recurring reports daily or weekly, then export results to Google Sheets, email them to stakeholders, or push them to other systems.

- Combine BigQuery analytics data with operational data from systems like Shopify, NetSuite, or your warehouse management system to create unified views and spot discrepancies.

- Query BigQuery tables on a schedule to check for data freshness, validate row counts, and flag anomalies—then send alerts via Slack or email when issues are detected.

- Pull summarized data from BigQuery and combine it with real-time metrics from other sources to create comprehensive dashboards that update automatically.

- Run complex analytical queries in BigQuery, pull the results into Parabola, and use them to trigger downstream workflows like updating inventory systems or sending targeted communications.

Tips for using Parabola with BigQuery

- Schedule your flows strategically to run on the cadence your team needs: hourly for real-time dashboards, daily for standard reporting, or weekly for executive summaries.

- Write efficient SQL queries to filter, aggregate, and transform data in your SQL query before pulling it into Parabola. This reduces data transfer, speeds up your flow, and optimizes BigQuery costs.

- Leverage parameterized queries: When building dynamic queries based on dates or other variables, use BigQuery's parameterized query features for better performance and security.

- Handle pagination for large results: For queries returning many rows, use the pagination settings in Parabola to efficiently retrieve all results without timeout issues.

- Validate your BigQuery data with steps in Parabola that flag unexpected values, missing data, or anomalies before the data flows to downstream systems.

- Document your SQL logic with cards in Parabola to explain what each query does and why specific filters or transformations are applied, making it easier for teammates to maintain your flows.

By connecting BigQuery with Parabola, you transform your data warehouse into an active part of your operational workflows—automating reports, reconciliations, and data-driven decisions without manual exports or custom code.

Integration:

Box

The Pull from Box step gives you the ability to pull a CSV or Excel file from your Box account.

Connect your Box account

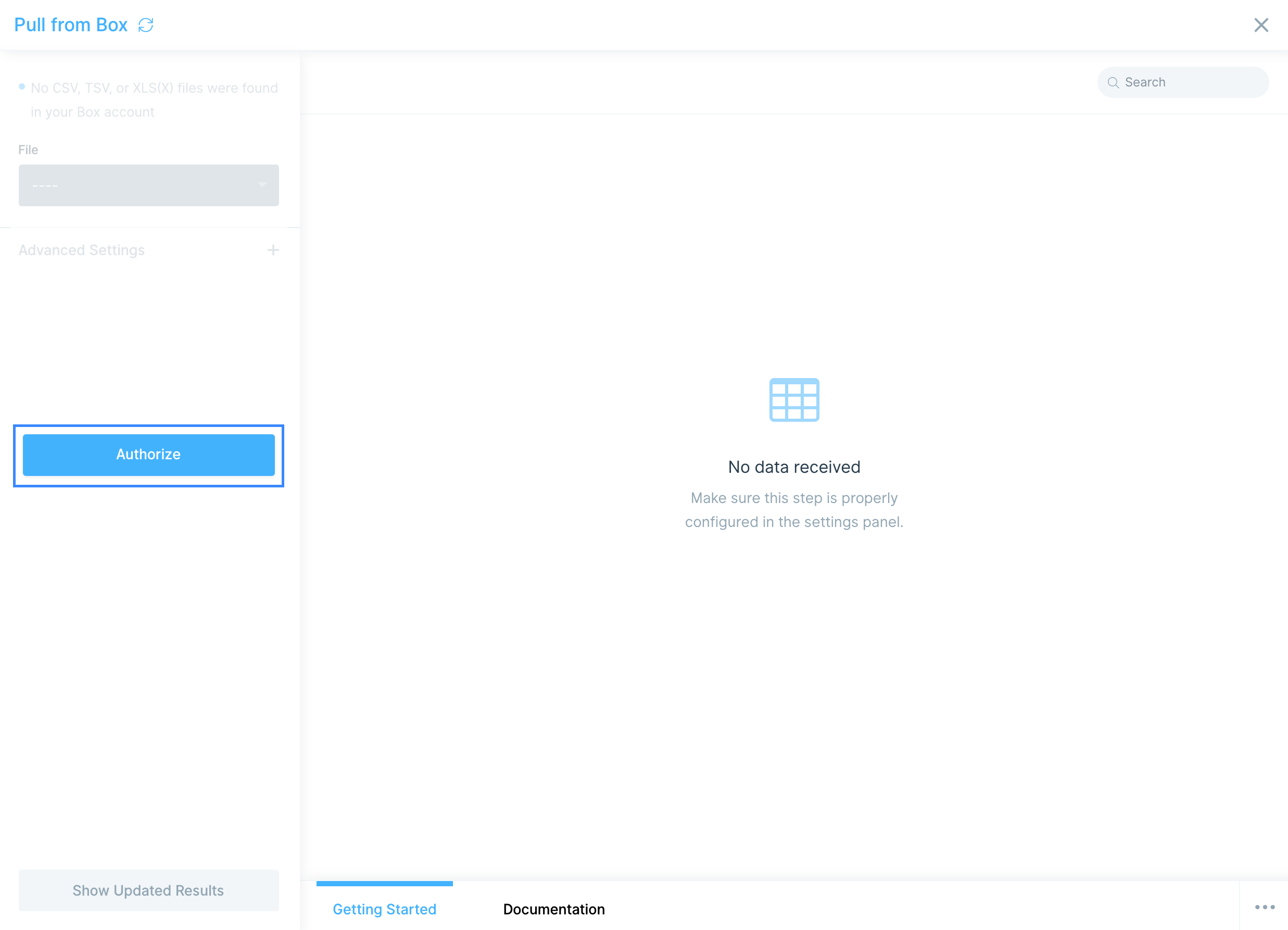

To connect your Box account to Parabola, select Authorize and follow the prompt to grant Parabola access to your Box files.

Custom settings

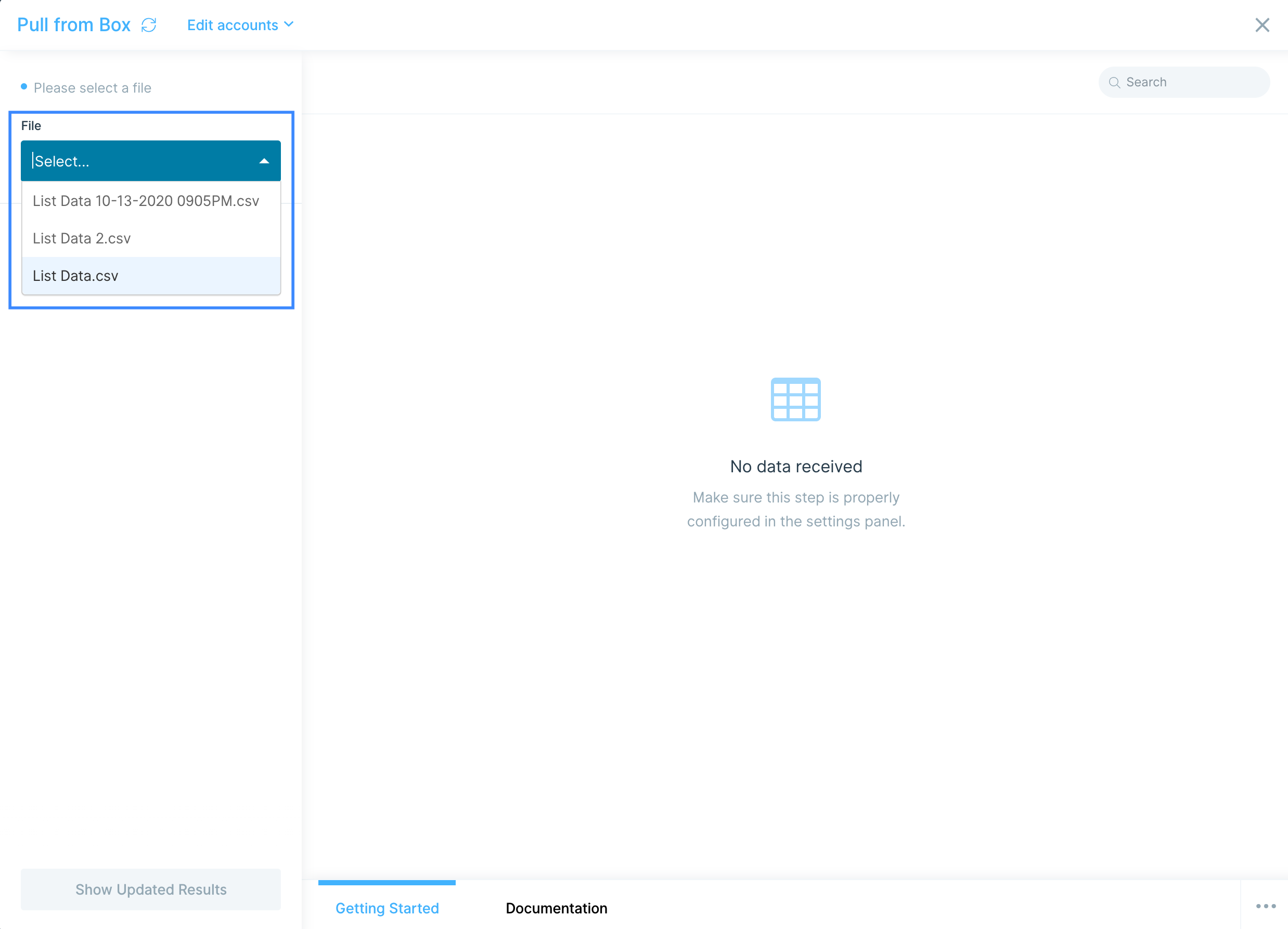

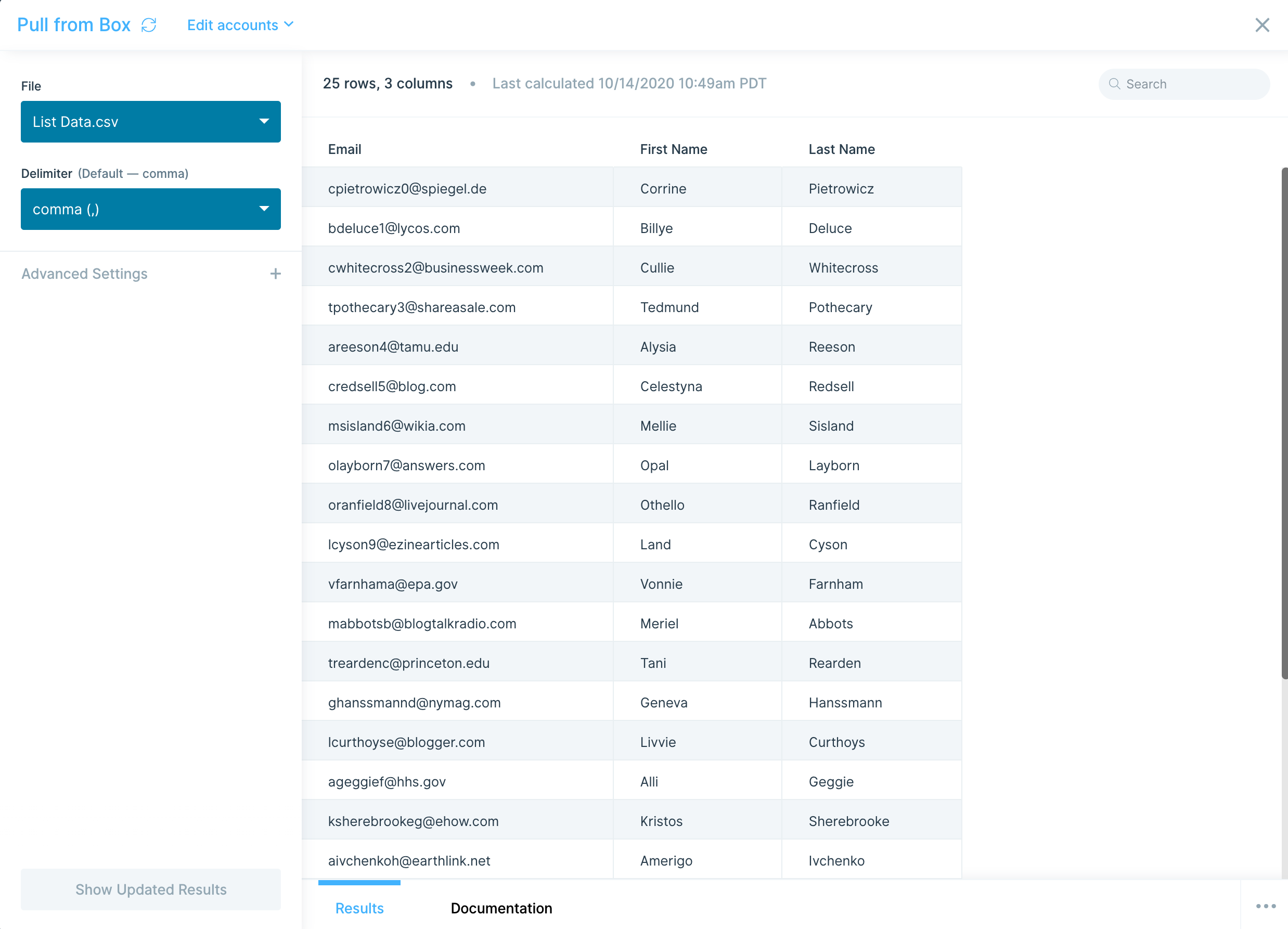

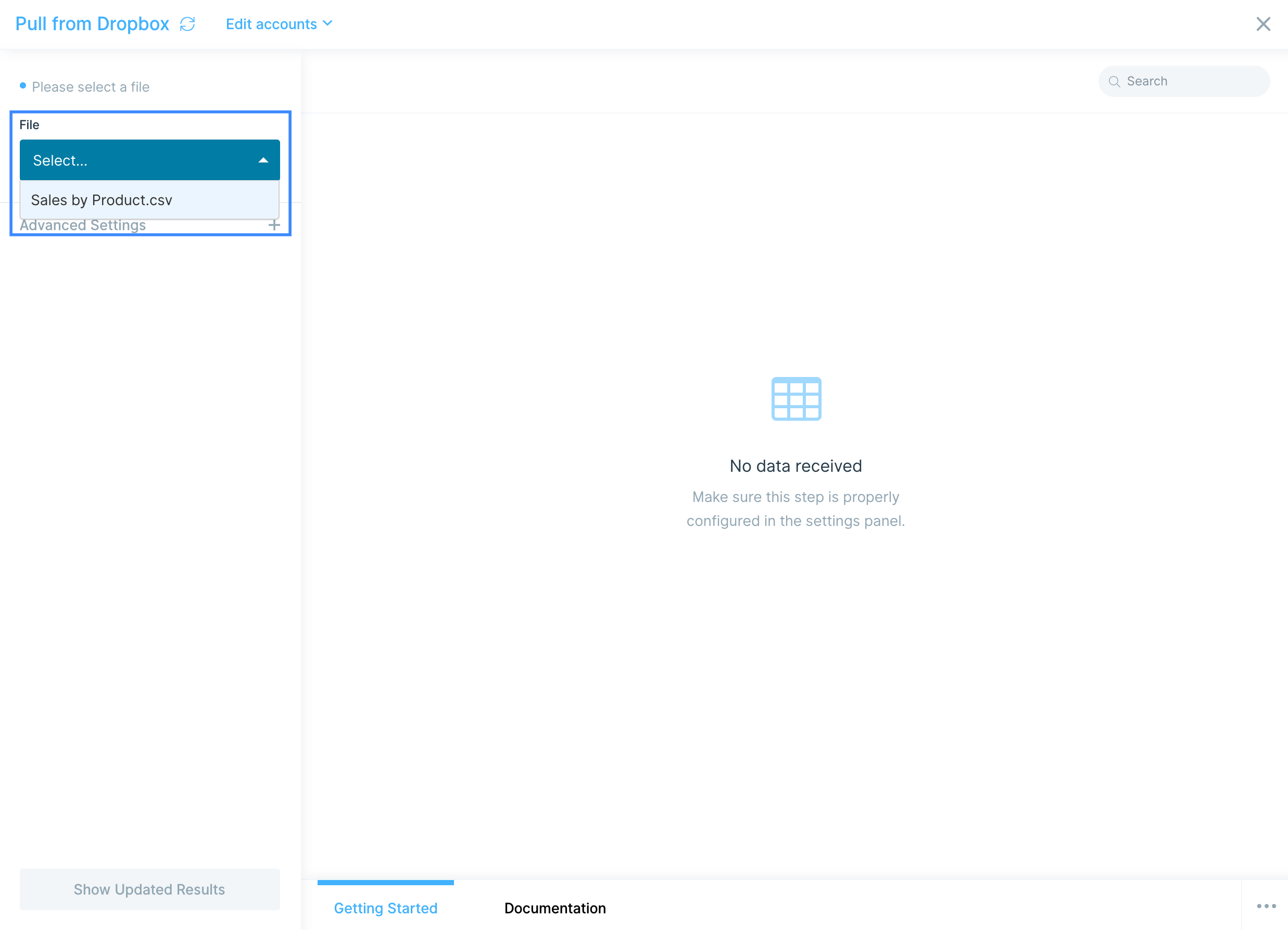

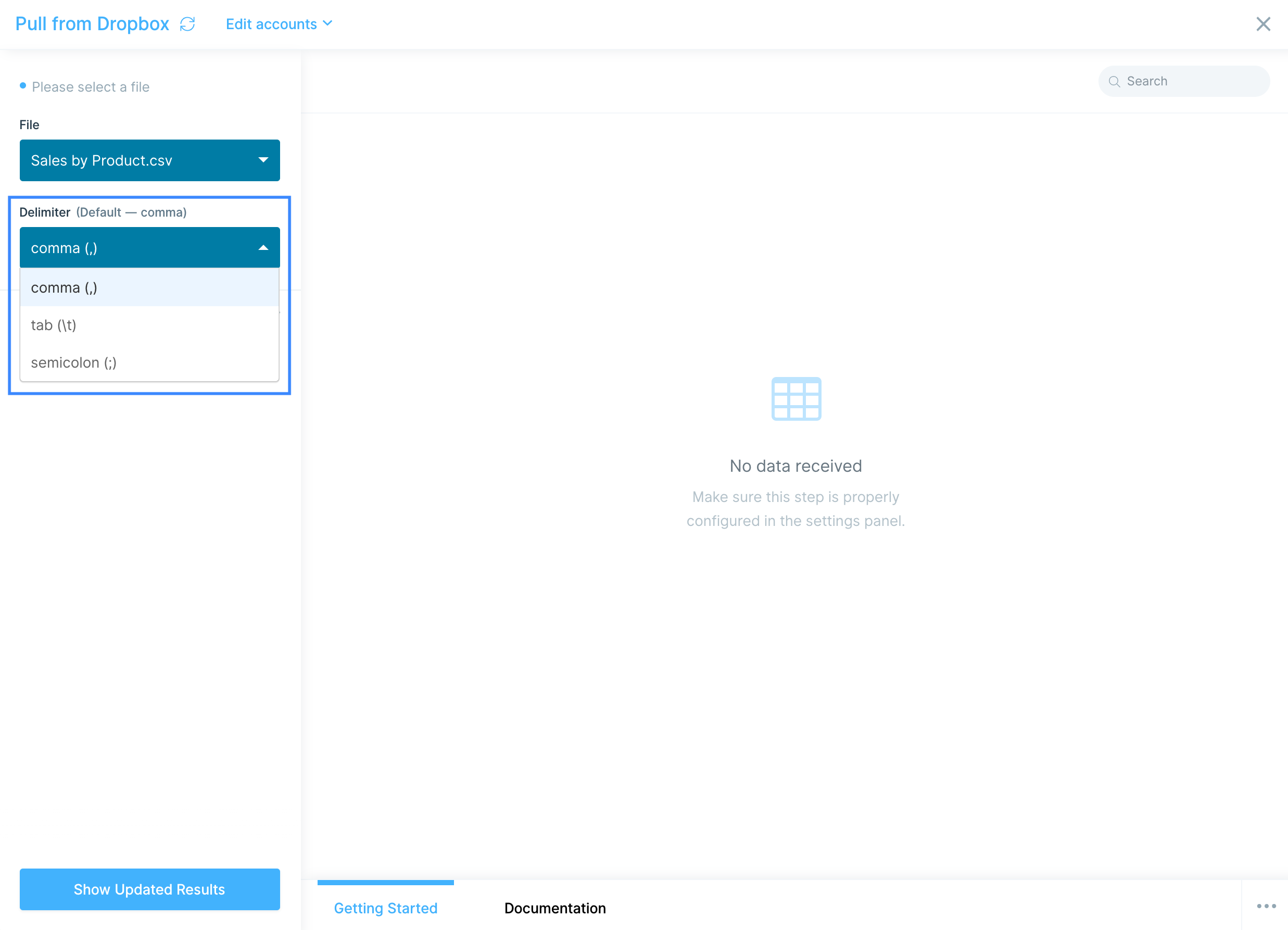

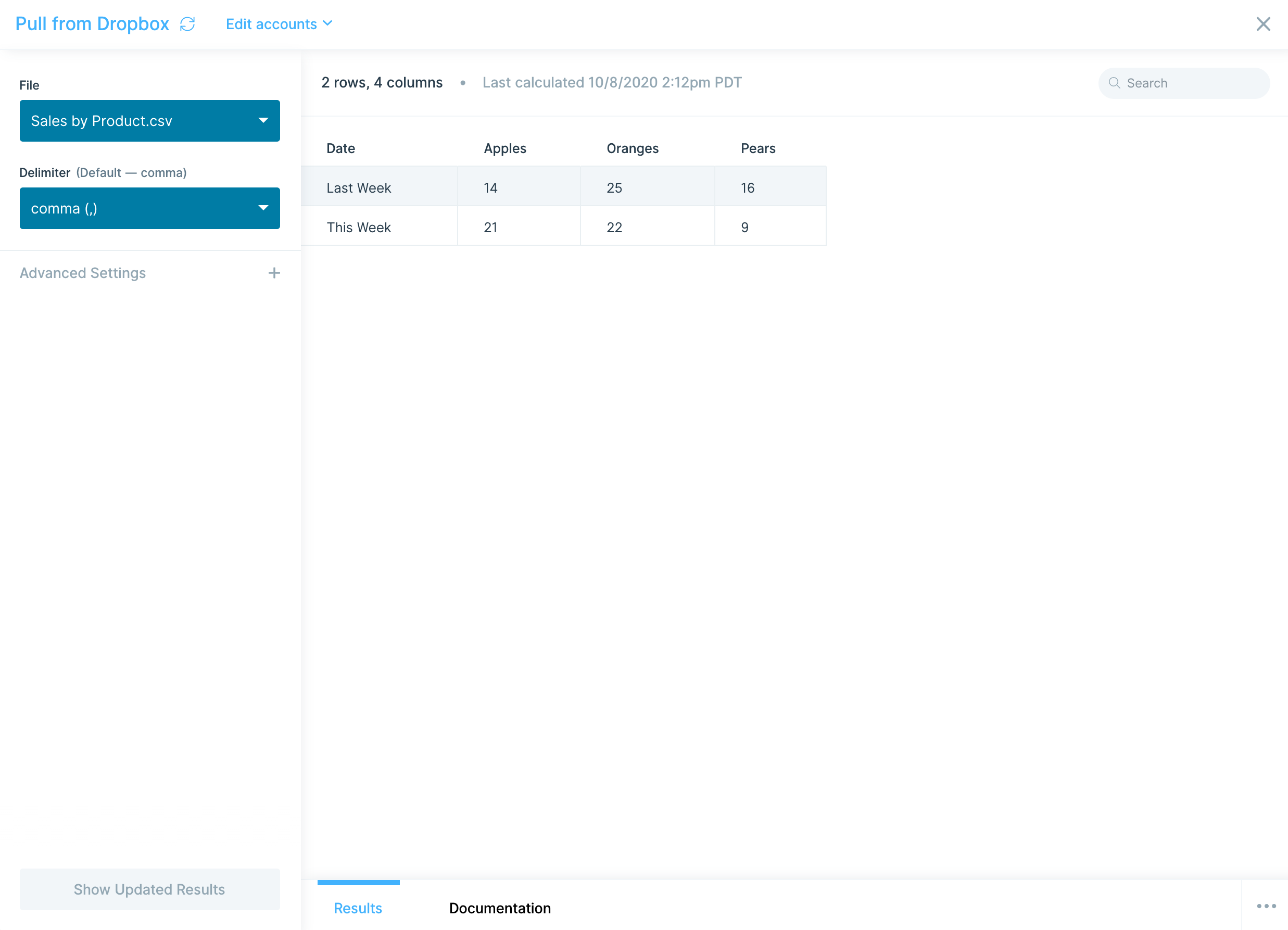

Once you have authorized your Box account, select your file in the File dropdown.

Additionally, you can tell Parabola if you're using a different delimiter, such as tab (\t) or semicolon (;), by selecting in the Delimiter dropdown. By default, Parabola will use the standard comma (,) delimiter.

Helpful tips

- Any changes made to the selected Box file will be automatically synced with Parabola the next time you open or run your flow.

- Box's API runs on a 10 minute delay for newly created files. If your newly added Box file does not immediately appear in the File dropdown, check back in a few minutes.

The Send to Box step gives you the ability to create a new or update an existing file in your Box account.

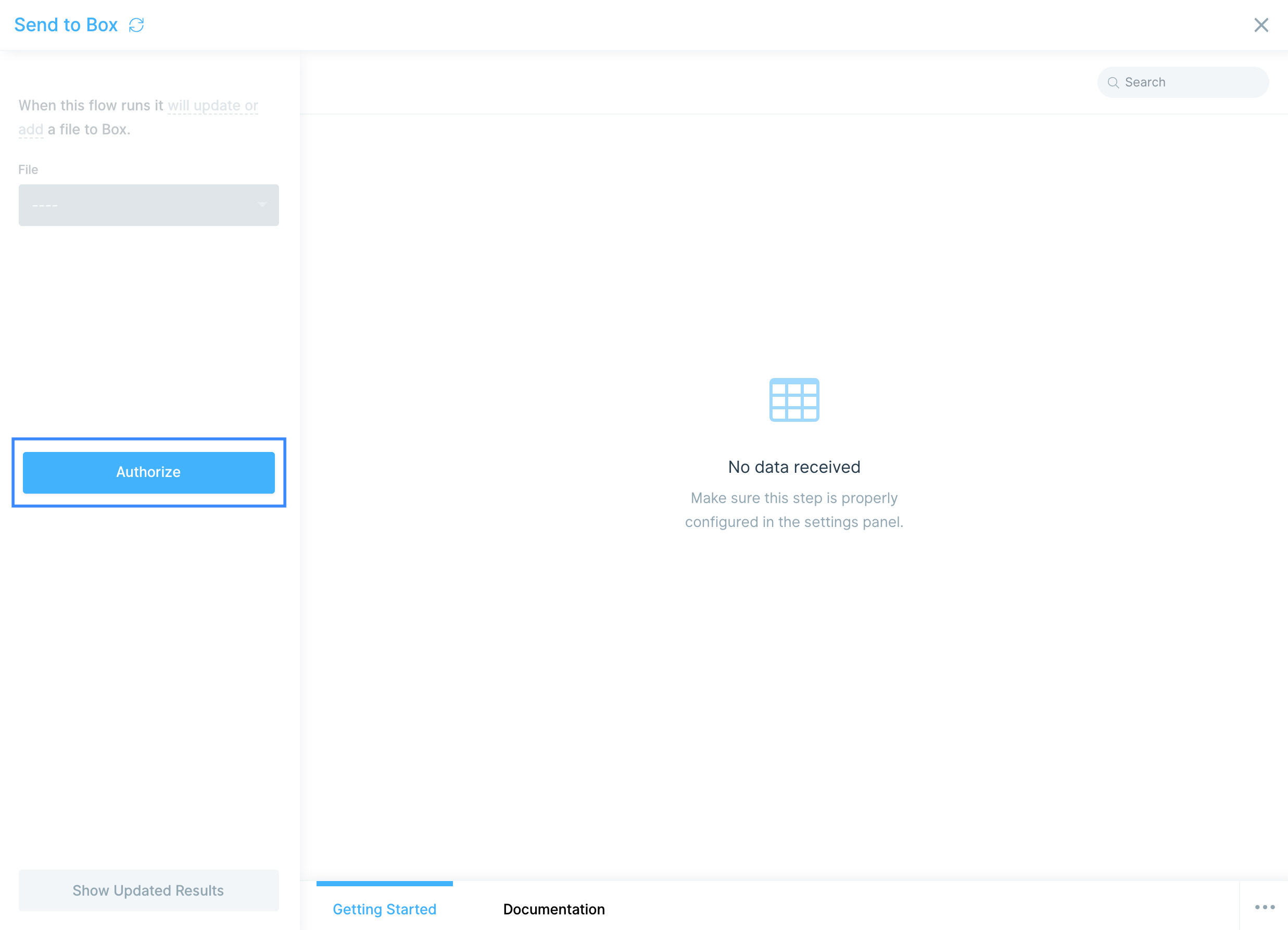

Connect your Box account

To connect your Box account to Parabola, select Authorize and follow the prompt to grant Parabola access to your Box files.

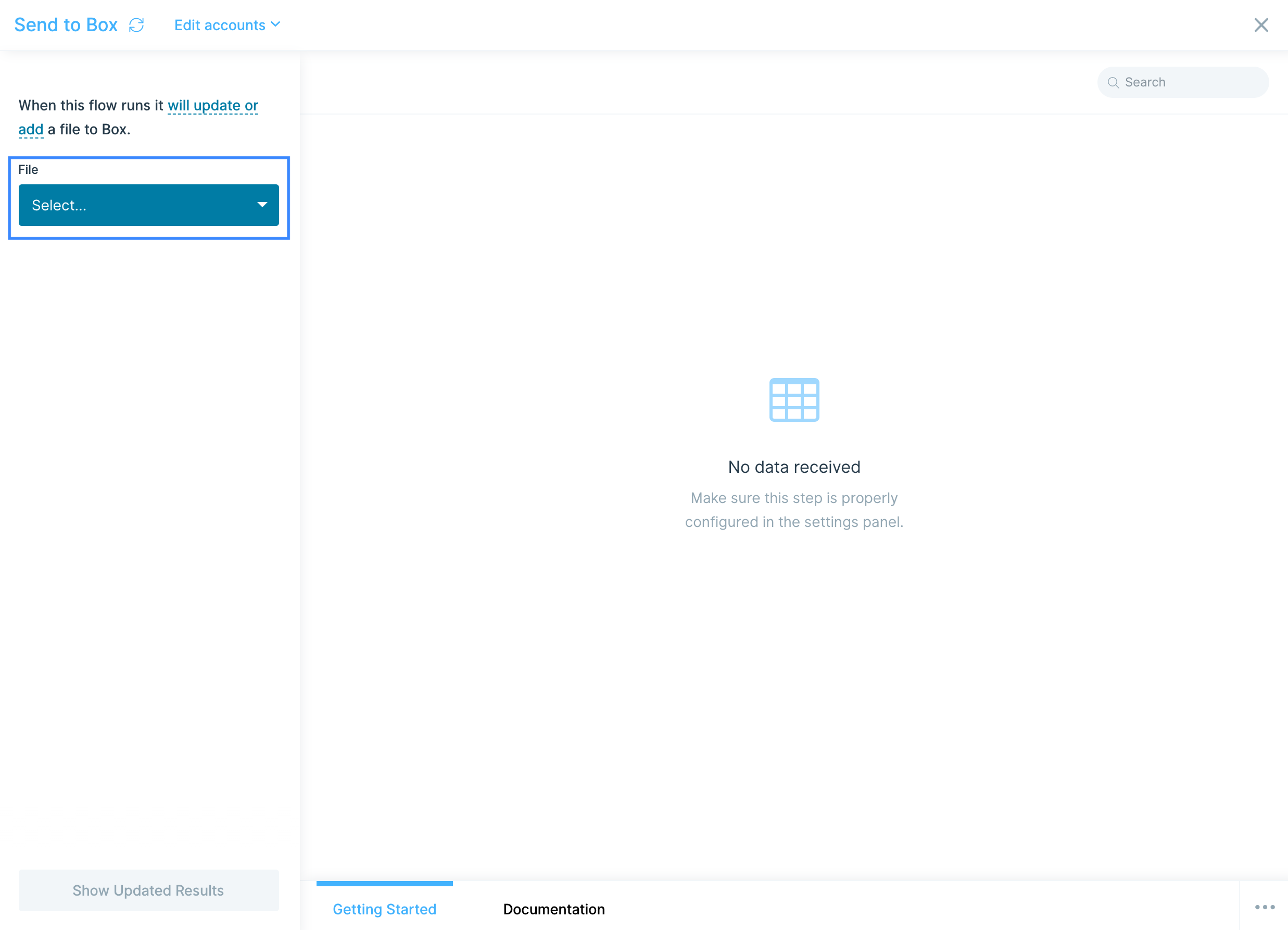

Custom settings

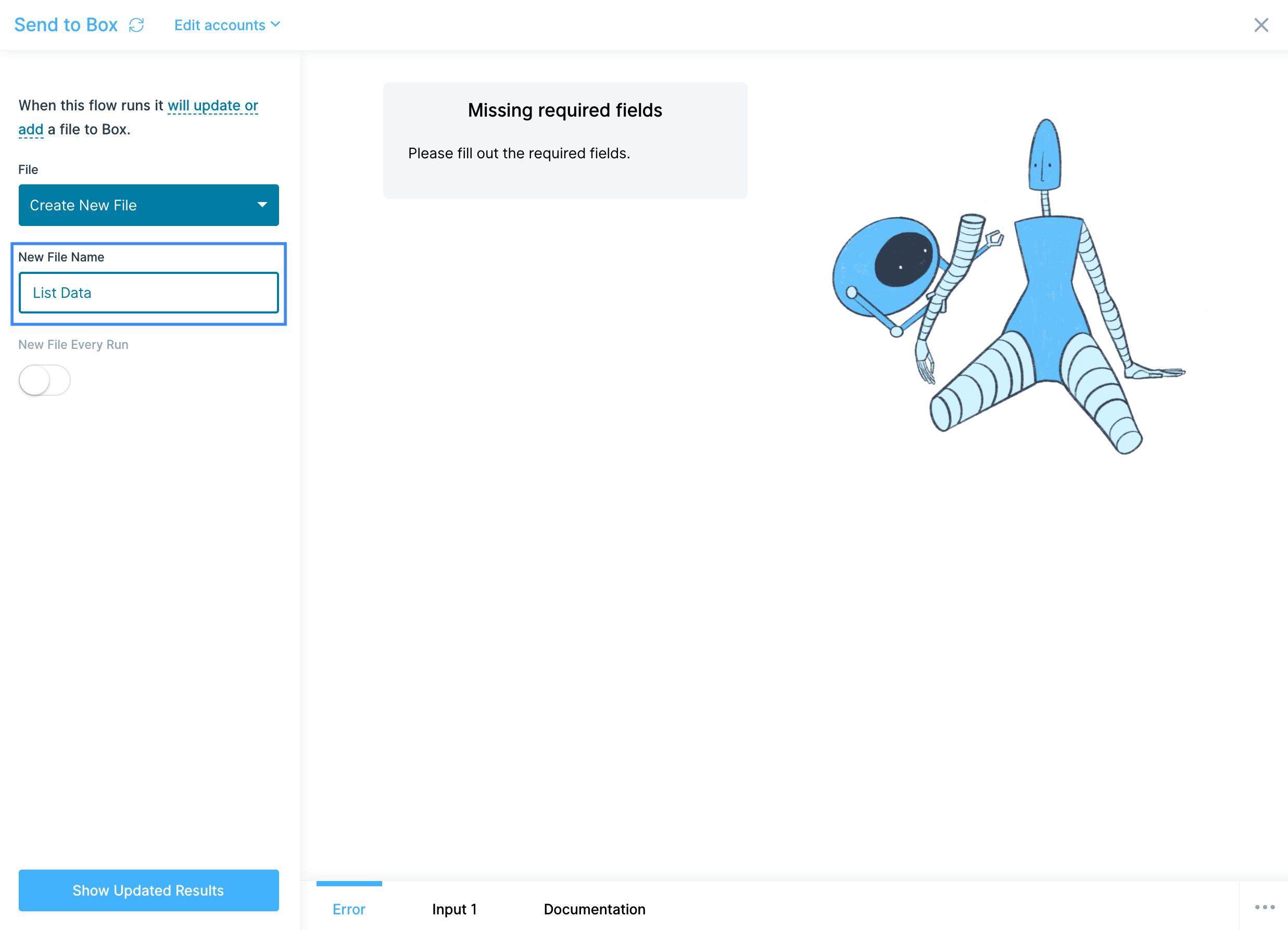

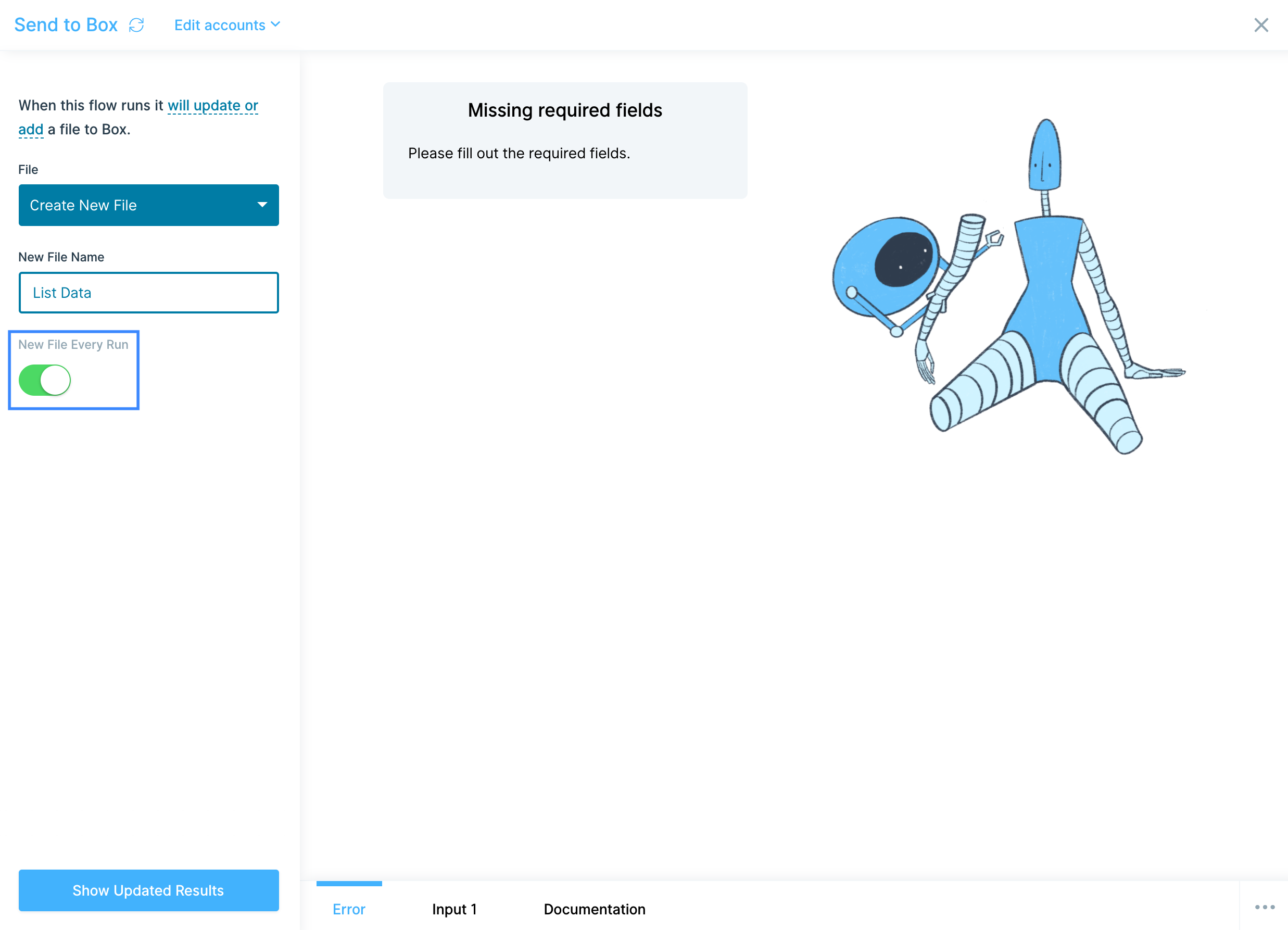

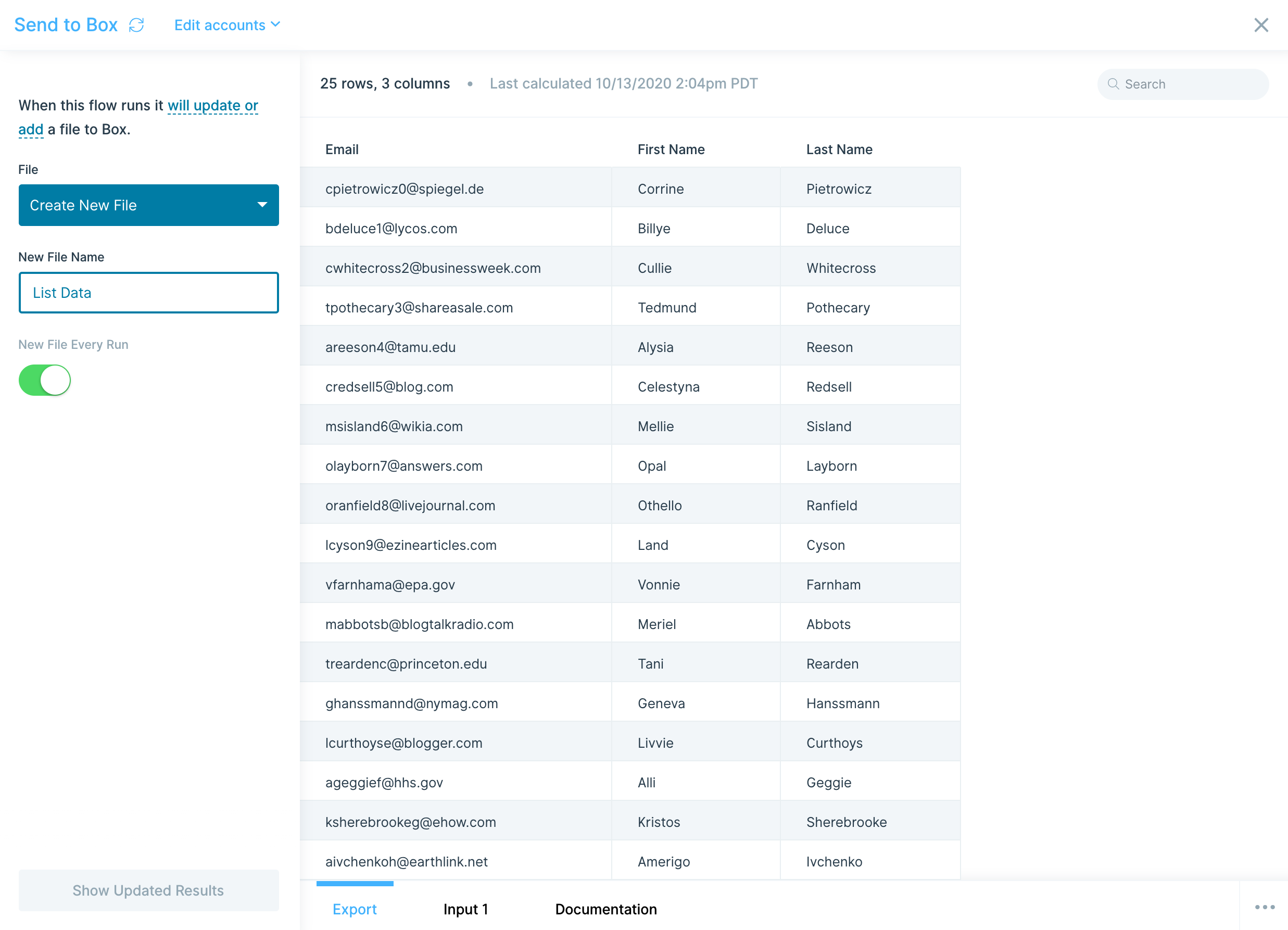

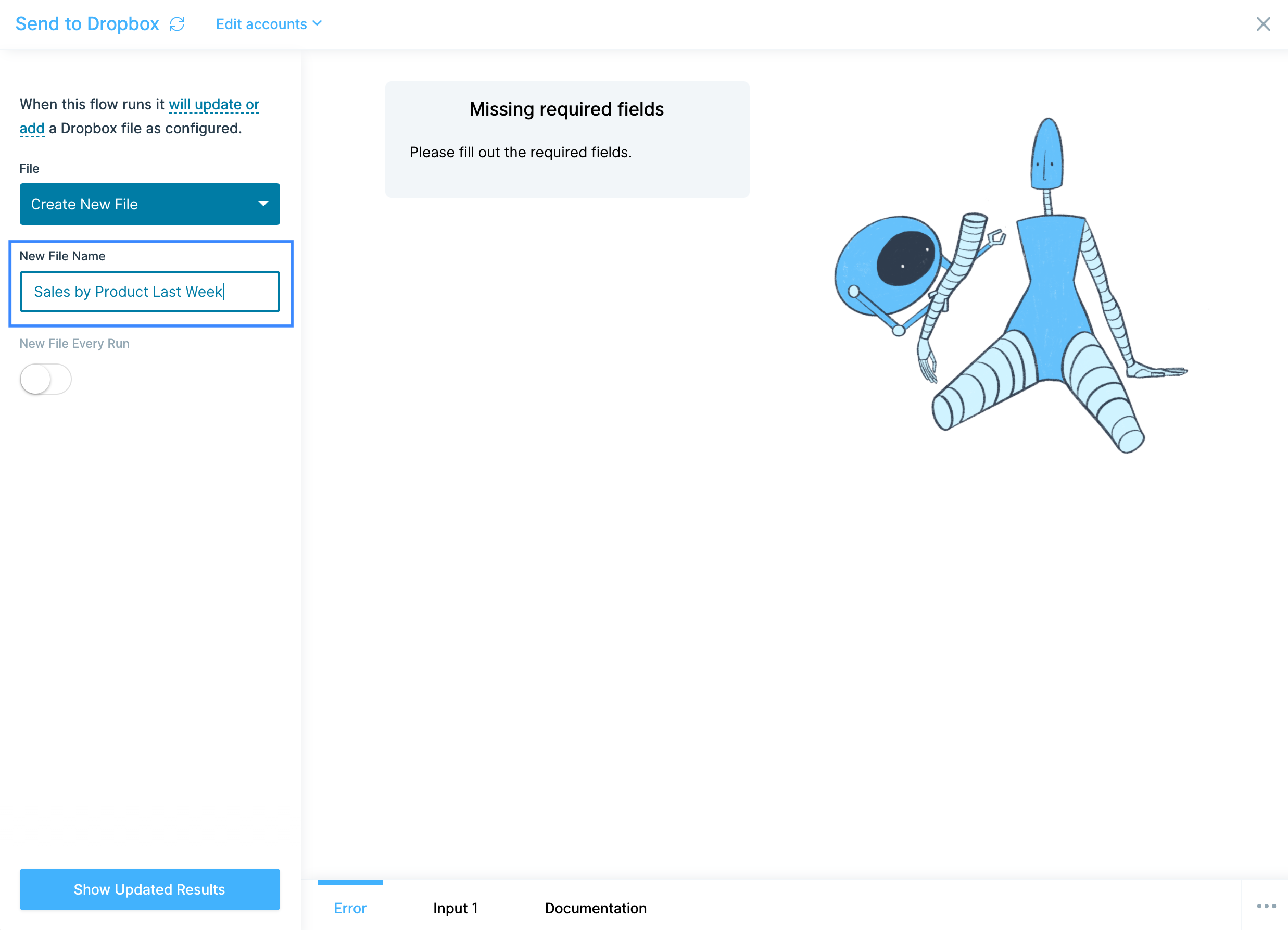

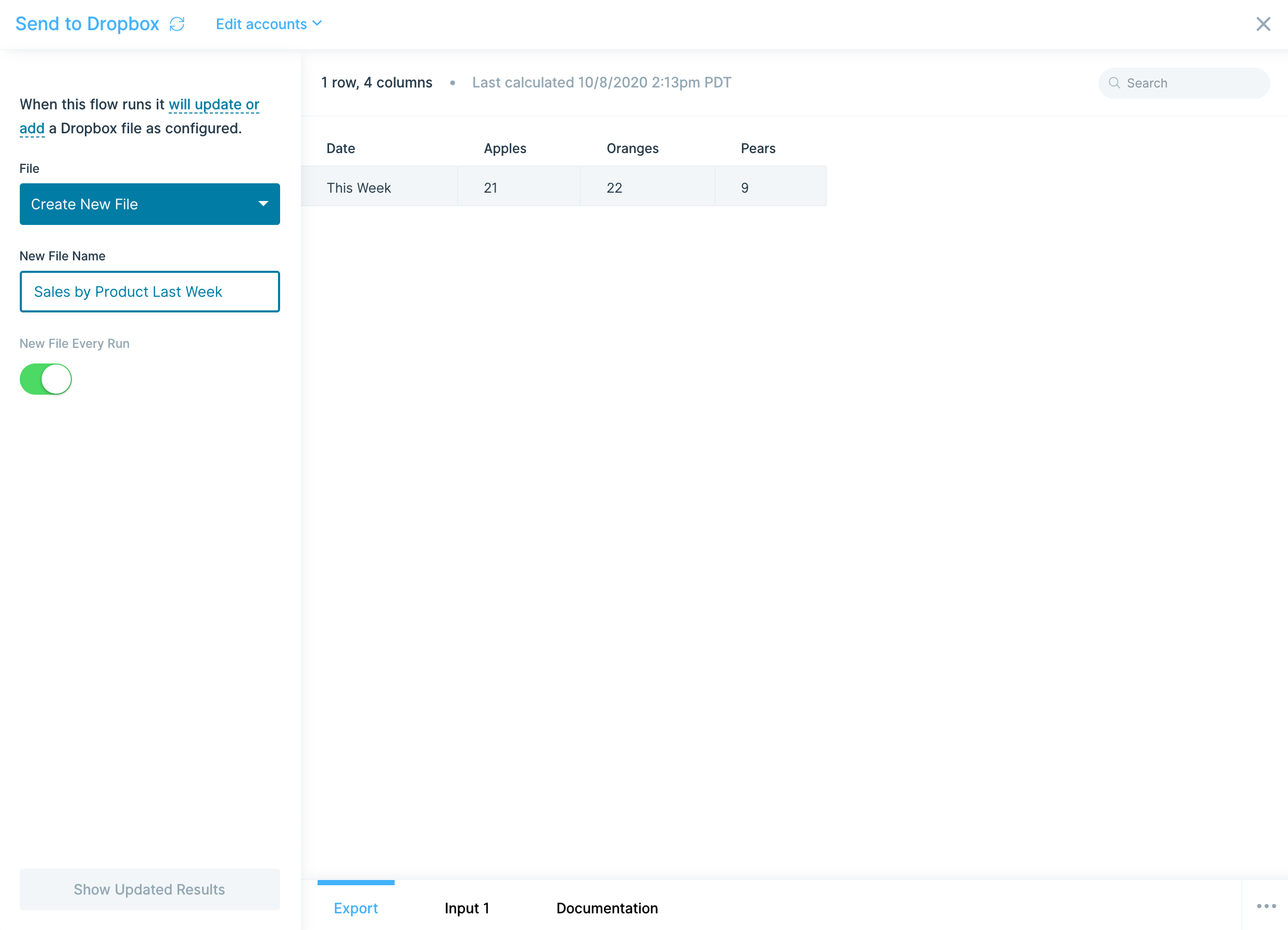

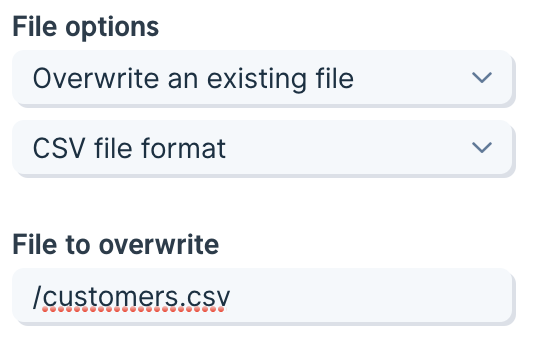

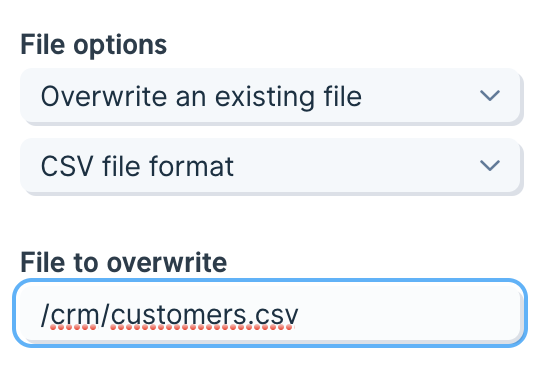

Select the File dropdown to choose if you want to overwrite an existing file or create a new file.

If creating a new file, give the file a name in the New File Name field.

You can also decide if this is a one-off creation, or if you'd like to create a new file every time your flow runs. If you choose to create a "New File Every Run", each new file will have a timestamp appended to the file name in Box.

Helpful tips

- Anyone with access to an existing file will be able to see and use the changed data.

- Box's API runs on a 10 minute delay for newly created files. If your newly added Box file does not immediately appear in the File dropdown, check back in a few minutes.

- Upload size using our Box step is limited to ~50MB. If you are at or nearing this file size limit, we recommend splitting the file to prevent errors!

- As of today, users cannot specify the destination folder for the Send to Box step.

Integration:

CSV file

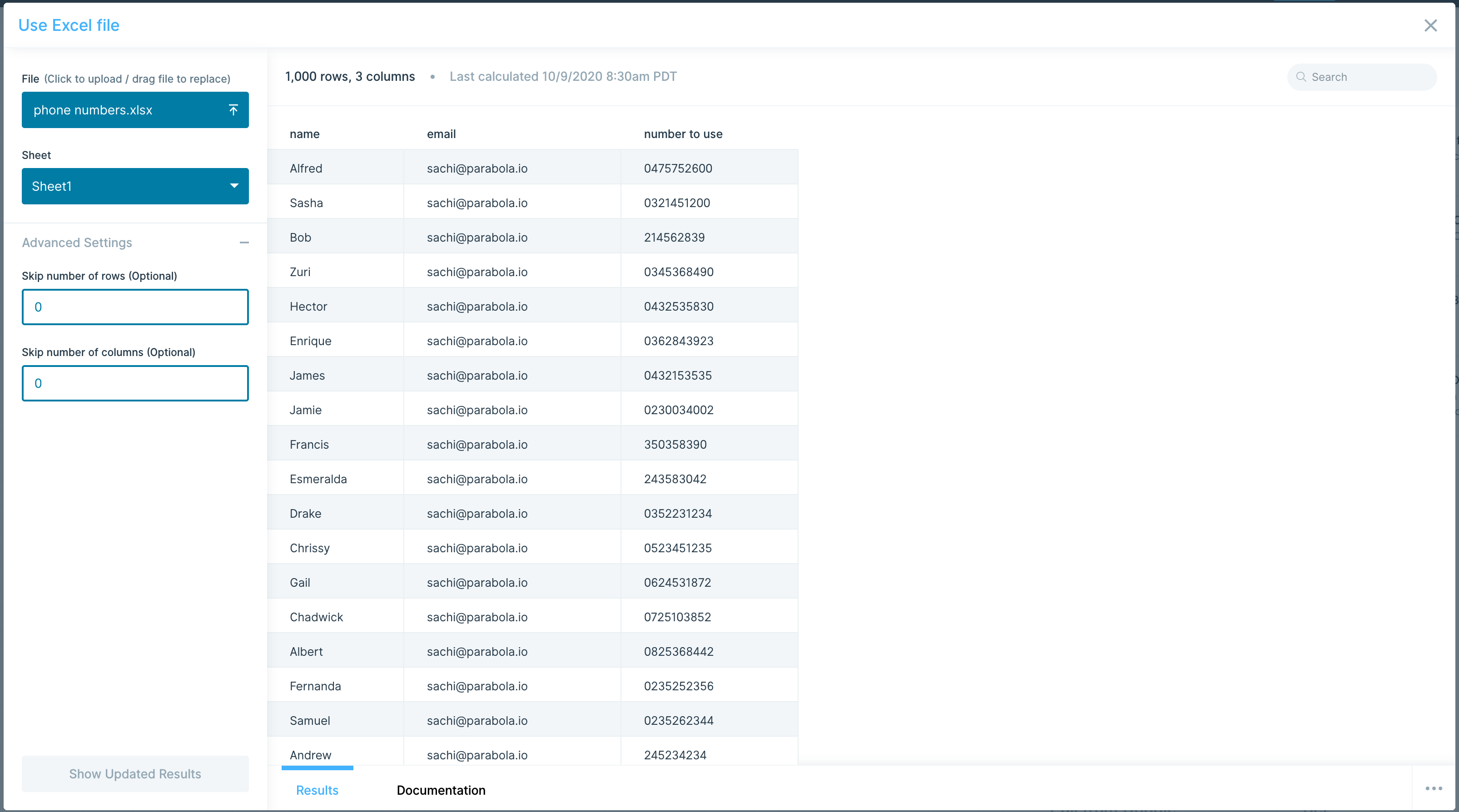

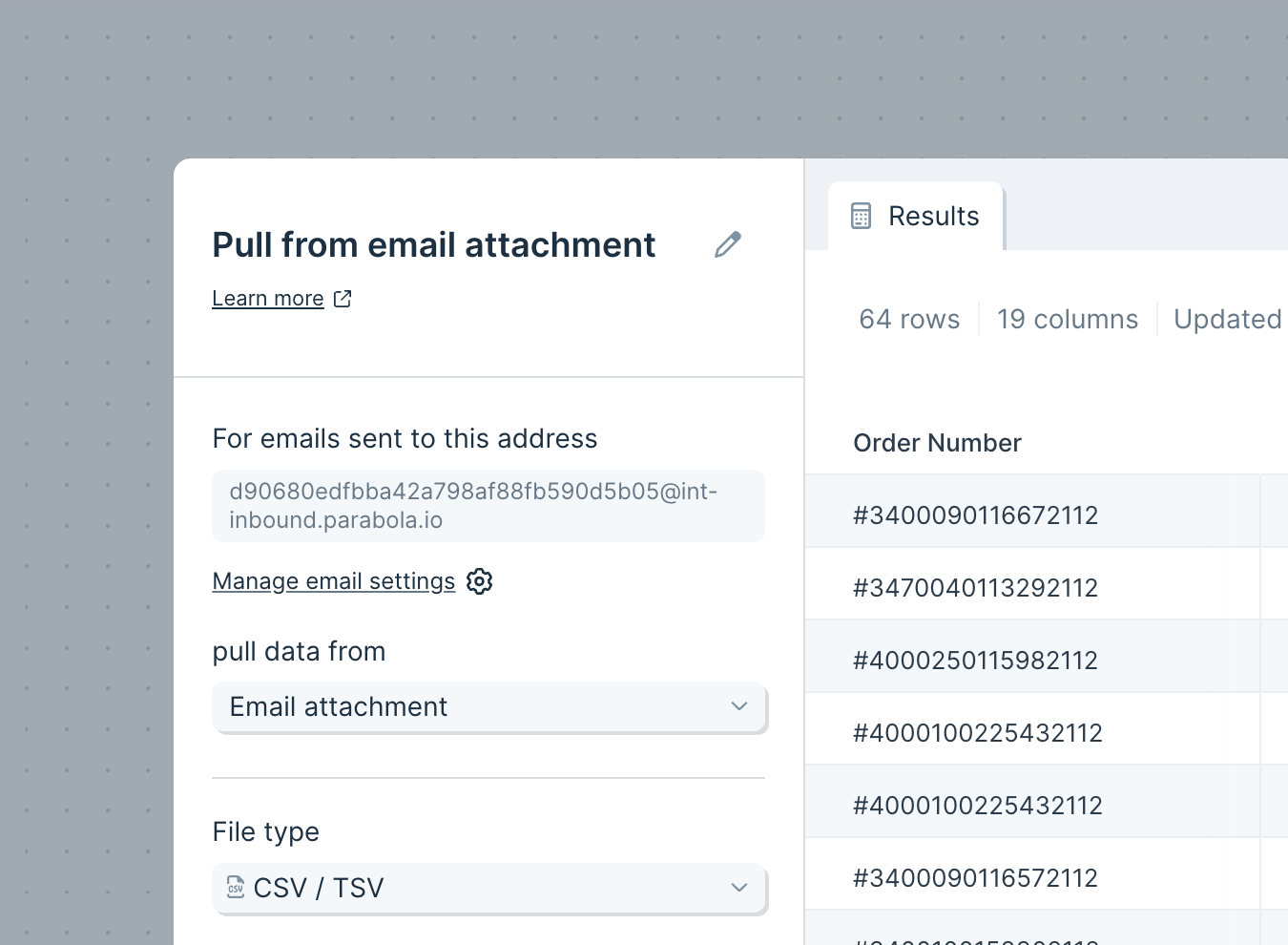

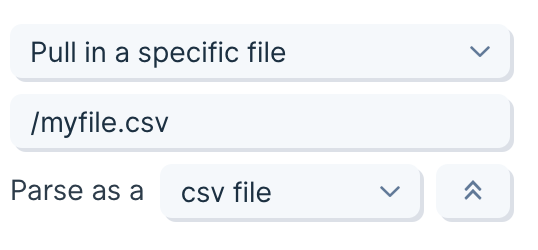

The Use CSV file step enables you to pull in tabular data from a CSV, TSV, or a semicolon delimited file.

Custom Settings

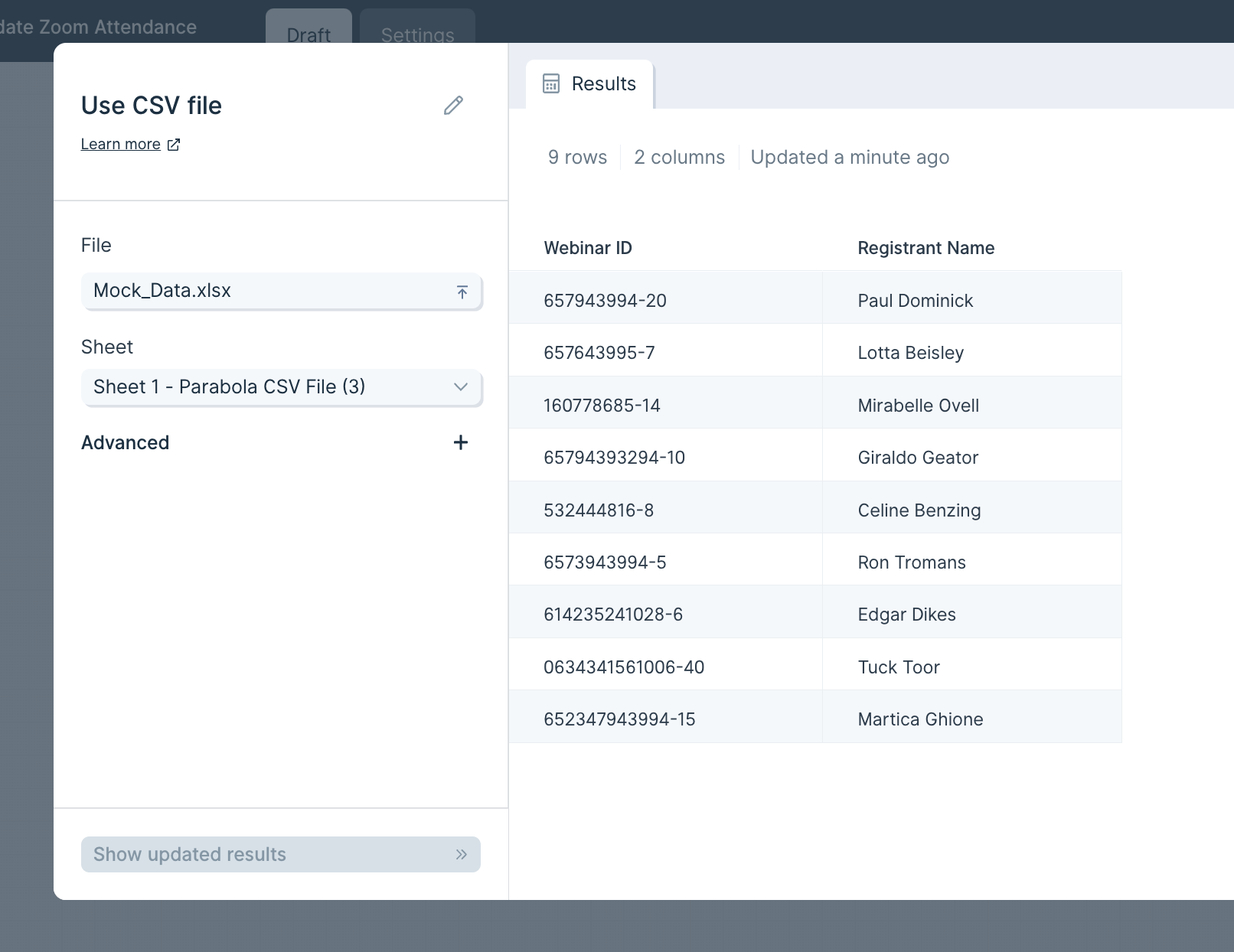

The first thing to do when using this step is to either drag a file into the outlined box or select "Click to upload a file".

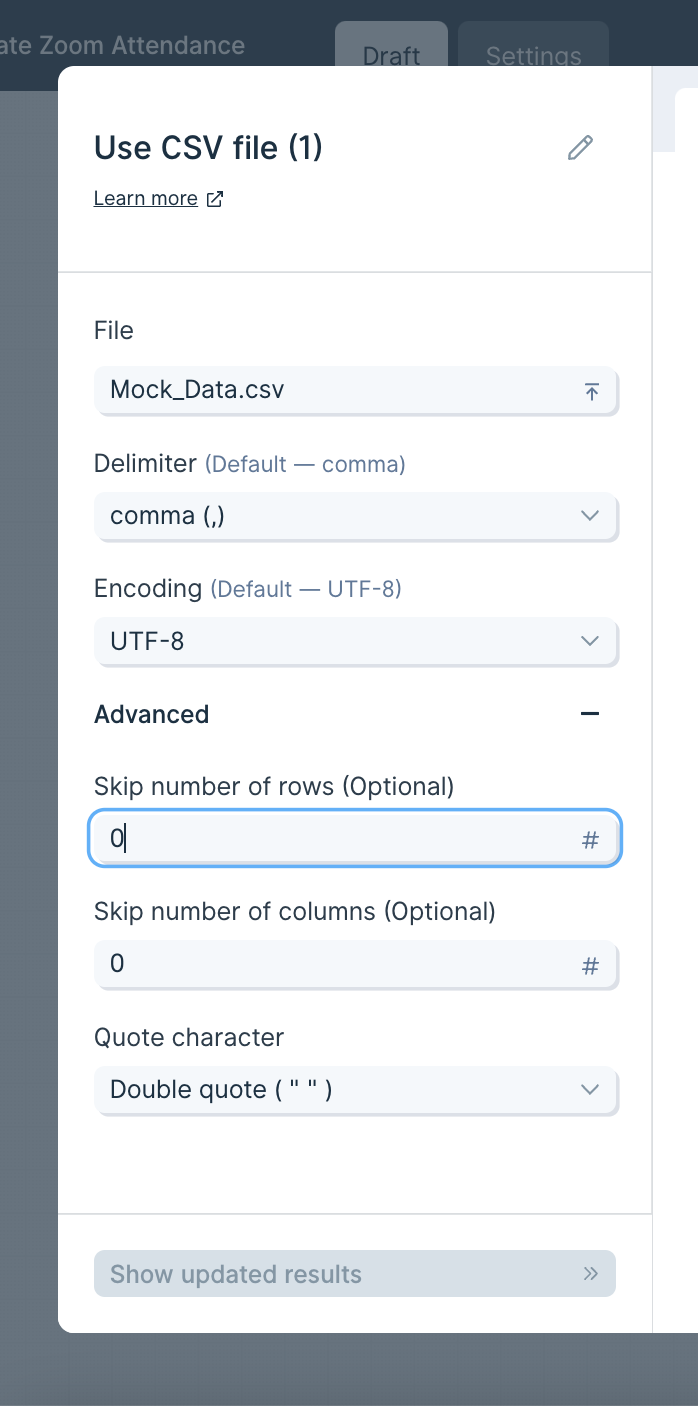

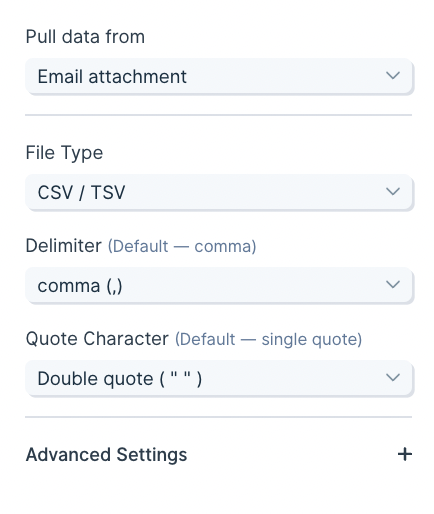

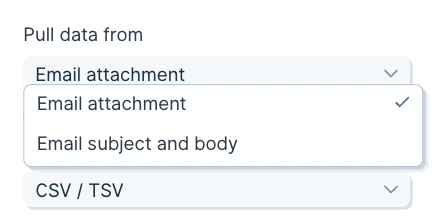

Once the file is uploaded and displayed in the Results tab, you'll see two settings on the lefthand side: File and Delimiter. You can click File to upload a different file. Parabola will default to using a comma delimiter, but you can always update the appropriate delimiter for your file by clicking on the Delimiter dropdown. Comma , , tab \t, and semi-colon ; are the three delimiter types we support.

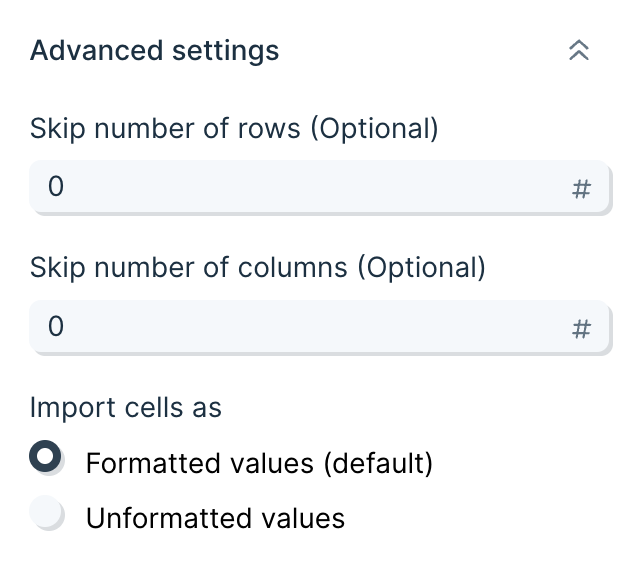

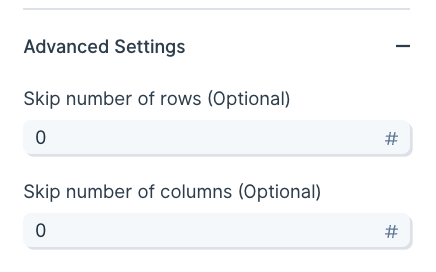

In the "Advanced Settings", you can set a number of rows and a number of columns to skip when importing your data. This will skip rows from top-down and columns from left-to-right. You can also select a Quote Character which will help make sure data with commas in the values/cells don’t disrupt the CSV structure.

Helpful Tips

- Security: the files you upload through this step are stored by Parabola. We store the data as a convenience, so that the next time you open the flow, the data is still loaded into it. Your data is stored securely in an Amazon S3 Bucket, and all connections are established over SSL and encrypted.

- Limitations: Parabola can't pull in updates to this file from your computer automatically, so you must manually upload the file's updates if you change the original file. Formatting and formulas from a file will not be preserved. When you upload this file, all formulas are converted to their value and formatting is stripped.

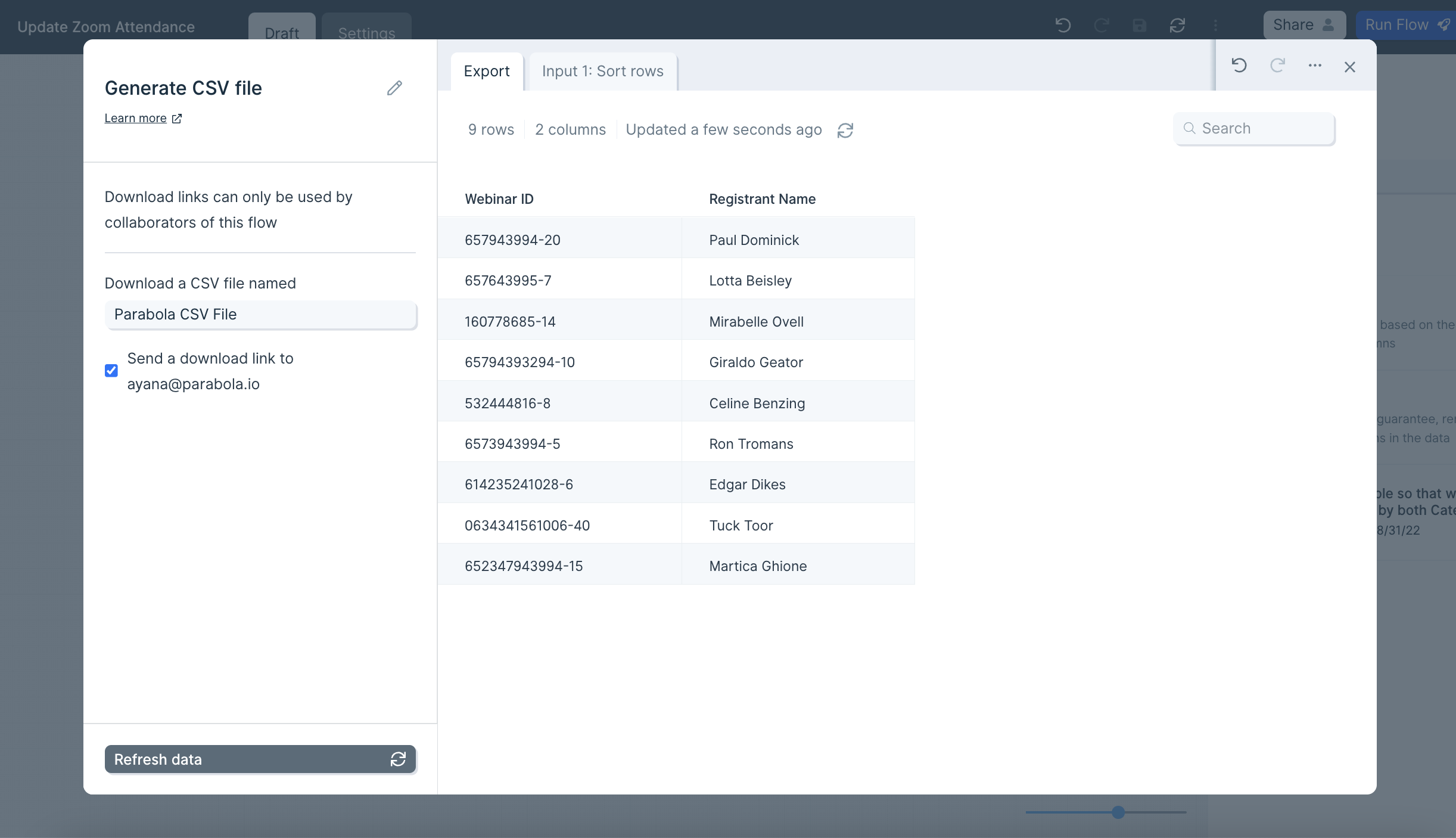

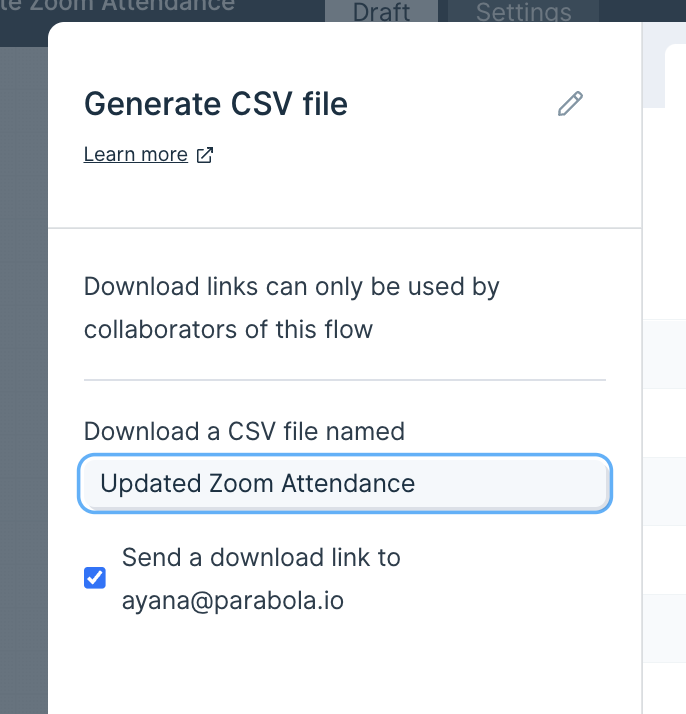

The "Generate CSV file" step allows you to export tabular data as a CSV file. You can use it to create custom datasets from various sources within your Flow. Once the Flow run is complete, the CSV file can be downloaded from the Flow’s Run History. You can also configure the step to email a download link to the Flow owner.

Custom Settings

Once you connect your Flow to this export step, it will display a preview of the tabular data to be exported.

The name of the generated file will match the step’s title. To rename your custom dataset file, simply double-click the step title and enter a new name.

After publishing and running your Flow, you can download the generated CSV file from the Flow’s Run History panel. Past CSVs created by this step are also accessible there.

You can optionally configure the step to email a download link to the Flow owner when the run is complete. Please note that this link will expire after 24 hours.

If the step receives zero rows of data as input, no CSV file will be generated and no download link will be emailed.

Helpful Tips

Security

Files generated by this step are stored by Parabola for your convenience. This allows the data to be reloaded the next time you open the Flow. Your data is stored securely in an Amazon S3 bucket, with all connections established over SSL and encrypted.

Limitations

This step supports only one input source at a time.

If your Flow includes multiple branches or datasets, you'll need to connect each one to its own Generate CSV file step to export them separately.

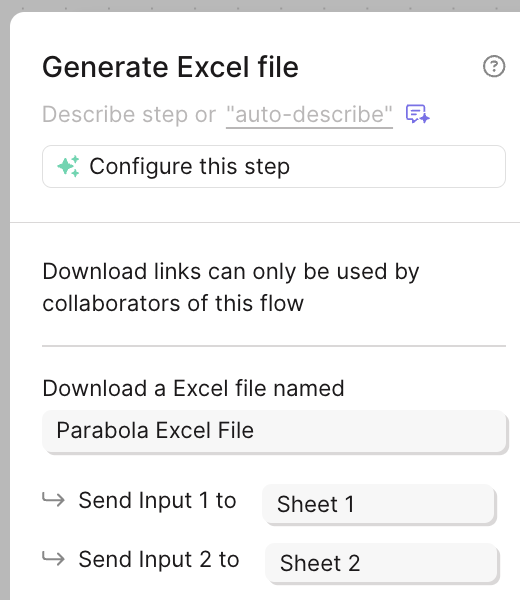

Alternatively, consider using the "Generate Excel file" step, which allows multiple inputs and creates a single Excel file with each input as a separate tab.

Integration:

Cin7

How to authenticate

Cin7 Core uses API Key authentication with two required credentials: an Account ID and an API Application Key.

Creating API credentials in Cin7 Core

- Log in to your Cin7 Core account at https://inventory.dearsystems.com

- Navigate to Settings, then go to Integrations & API > Cin7 Core API (or go directly to https://inventory.dearsystems.com/ExternalAPI)

- Click the plus icon or Add API Connection button to create a new application

- Give your application a descriptive name (e.g., "Parabola Integration")

- Once created, you'll see two credentials:

- Account ID (also called api-auth-accountid)

- API Application Key (also called api-auth-applicationkey)

- Copy both values and store them securely

Tip: Each company you have access to in Cin7 Core will have a different Account ID. You can create multiple API Applications for the same account, which is useful for tracking usage across different integrations.

Note: Your Account ID and API Application Key are equivalent to a login and password. Keep them secret and never share them publicly.

Connecting in Parabola

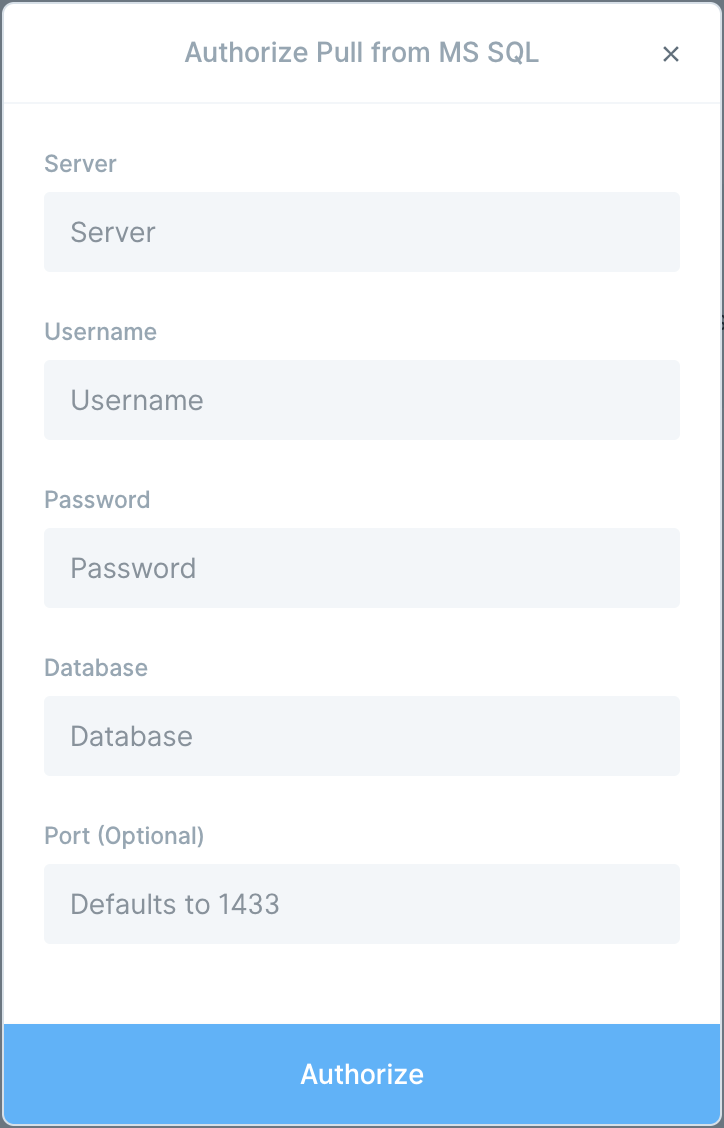

- In your Parabola flow, add a Pull from Cin7 step and select the “Core” service.

- Click Authorize and you'll be prompted to enter:

- Give your connection a memorable name (e.g., "Production Cin7 Core Account")

- API Account ID - Your Cin7 Core Account ID

- API Application Key - Your API Application Key

Once connected, you can select from Cin7 Core's available endpoints to bring live data into your flow.

Available data

Using the Cin7 Core integration in Parabola, you can pull in a wide range of operational data:

- Products: Product master data including SKUs, names, descriptions, pricing, categories, suppliers, inventory attributes, and product families. Access product availability across locations and markup pricing details.

- Sales and Orders: Complete sales order information including customer details, line items, quantities, pricing, order status, fulfillment details, shipments, invoices, credit notes, and payments.

- Purchases: Purchase order data with supplier information, line items, costs, receiving status, quantities, invoices, credit notes, and payment records.

- Customers: Customer records with contact information, addresses, payment terms, tax rules, price tiers, credit limits, tags, and custom attributes.

- Suppliers: Supplier details including contact information, addresses, payment terms, currencies, and related purchase history.

- Locations: Warehouse and location data including addresses, active status, and location-specific inventory settings.

Common use cases

- Reconcile inventory levels from Cin7 Core, Shopify, Amazon, and other sales channels into a single source of truth. Automatically flag discrepancies, low stock levels, or oversell risks across locations.

- Track purchase order status from creation through receiving and invoicing. Analyze supplier lead times, identify delayed shipments, and automate supplier follow-ups when orders fall behind schedule.

- Monitor sales orders from placement through picking, packing, and shipment. Calculate fulfillment times, flag orders at risk of missing delivery SLAs, and generate real-time fulfillment dashboards for operations teams.

- Reconcile purchase orders, receipts (stock received), and supplier invoices to catch pricing discrepancies, quantity variances, and billing errors before payment.

- Combine inventory data with financial transactions to calculate inventory value by location, track cost of goods sold (COGS), and generate automated reports for finance teams and accounting systems.

Tips for using Parabola with Cin7 Core

- Schedule your flow based on business needs: Set up daily or hourly runs for inventory reconciliation and order tracking. For financial reporting and purchase order monitoring, weekly schedules may be sufficient.

- Join related data for complete visibility: Combine data from multiple endpoints to create comprehensive reports. For example, join Sales Orders with Shipments and Invoices to track the complete order lifecycle, or merge Products with Inventory Availability across multiple locations.

- Combine with other systems for end-to-end automation: Integrate Cin7 Core data with your accounting software (Xero, QuickBooks), 3PLs, carrier tracking systems, and BI tools to build comprehensive operational dashboards and automated reconciliation workflows.

- Document your logic with cards: Add cards to your Parabola flow to explain business rules, calculations, and data transformations. This makes it easier for your team to maintain and audit flows over time.

- Export results back to systems or create dashboards: Push processed data to Google Sheets for team visibility, update records in your ERP, or create custom dashboards that refresh automatically with the latest Cin7 Core data.

By connecting Cin7 Core with Parabola, you transform your inventory and order management data into powerful automation—enabling real-time visibility, faster reconciliations, and smarter operations across your entire supply chain.

How to authenticate

Cin7 Omni uses Basic Authentication with a username and API key. You'll need to generate an API key from your Cin7 Omni account to connect.

Creating an API key in Cin7 Omni

- Log in to Cin7 Omni as an administrator

- From the user menu in the navigation, open Settings

- Under Integrations & API, select API v1

- Here you'll see your API Username

- Select Add New API Connection

- If you receive an error about connection limits, contact Cin7 Omni support to add your connection

- Give your connection a descriptive App Name (we suggest including "Parabola" to identify this connection) and configure the permissions for this connection based on what data you want Parabola to access

- Once created, copy your Connection Key (API Key) and store it somewhere secure

Connecting in Parabola

- In your Parabola flow, add a Pull from Cin7 step and select the “Omni” service.

- Click Authorize and enter your credentials when prompted:

- Username: Your API Username from Cin7 Omni

- Password: Your Connection Key (API Key)

Once authenticated, select the resource you want to pull (Orders, Products, Inventory, etc.)

Available data

Using the Cin7 Omni integration in Parabola, you can pull the following operational data:

- Branch Transfers: Inter-warehouse transfer records showing inventory movements between locations, including line items, quantities transferred, dispatch dates, and approval status. Perfect for tracking stock redistribution across your fulfillment network.

- Products: Complete product catalog including SKUs, names, descriptions, pricing, dimensions, weights, and cost information. Pull product attributes, categorization, and custom fields to maintain synchronized product data across systems.

- Product Options: Product variant data including size, color, and other option combinations. Retrieve pricing tiers, SKU variants, and inventory levels for each product option to manage complex product catalogs.

- Purchase Orders: Inbound purchase order data with supplier information, order details, line items, quantities, expected costs, and receiving status. Track what's been ordered, what's in transit, and what's been received.

- Quotes: Sales quote records including customer information, quoted products, pricing, terms, and quote status. Monitor quotes from creation through approval and conversion to sales orders.

- Sales Orders: Outbound order information with customer details, order lines, fulfillment status, shipping information, payment status, and order totals. Track orders from placement through fulfillment and delivery.

Common use cases

- Reconcile Cin7 inventory data with 3PL systems, Shopify, or Amazon to identify stock discrepancies, flag negative inventory, and maintain accuracy across all warehouses and sales channels.

- Track purchase orders against received quantities and expected costs to catch receiving discrepancies, supplier delays, or pricing variances before they impact your operations.

- Pull sales orders, branch transfers, and product data to create real-time dashboards showing fulfillment velocity, order status distribution, pending shipments, and warehouse utilization by location.

- Monitor inventory levels across all branches and trigger automated alerts when products fall below reorder thresholds. Combine with sales velocity data to predict stockouts before they happen.

- Consolidate sales orders and quotes across channels to analyze sales performance, quote-to-order conversion rates, average order values, and sales trends by product category or customer segment.

Tips for using Parabola with Cin7 Omni

- Schedule your flows based on operational needs: Run inventory reconciliations hourly during peak fulfillment periods, daily for overnight reporting, or weekly for performance analysis. Align your schedule with your team's workflow.

- Use filters to control data volume: Leverage date ranges, status filters, and branch-specific queries to pull only the data you need. This keeps your flows fast and focused on recent or relevant records.

- Join related data for complete context: Combine sales orders with product data to add descriptions and costs, or join purchase orders with branch transfers to track inventory from supplier to warehouse to fulfillment center.

- Create validation checks for data quality: Use filters and formulas to flag anomalies like orders missing shipping info, inventory adjustments without reason codes, or purchase orders with quantity mismatches between ordered and received.

- Set up alerts for critical thresholds: Configure Slack or email notifications when inventory drops below safety stock, when orders are stuck in processing longer than your SLA, or when purchase orders are overdue for receiving.

- Normalize IDs early in your flow: Standardize product SKUs, order references, and location identifiers at the beginning of your flow so downstream joins to other systems (Shopify, NetSuite, warehouse systems) are consistent and reliable.

By connecting Cin7 Omni with Parabola, you can automate the tedious manual work of exporting data, reconciling spreadsheets, and generating reports—freeing your operations team to focus on strategic decisions instead of data wrangling.

Integration:

Coupa

How to use Parabola's Coupa integration

Parabola's Coupa integration allows teams to pull invoices and purchase orders directly into automated workflows, enabling real-time spend analysis and cross-system reconciliations without manual data exports.

- Automatically retrieve invoice and purchase order data for reconciliation and reporting

- Combine Coupa financial data with ERP, warehouse, and sales channel information

- Track procurement performance and identify billing discrepancies

- Build automated dashboards showing spend by supplier, status, and time period

Learn more about Parabola's Coupa integration below.

How to authenticate

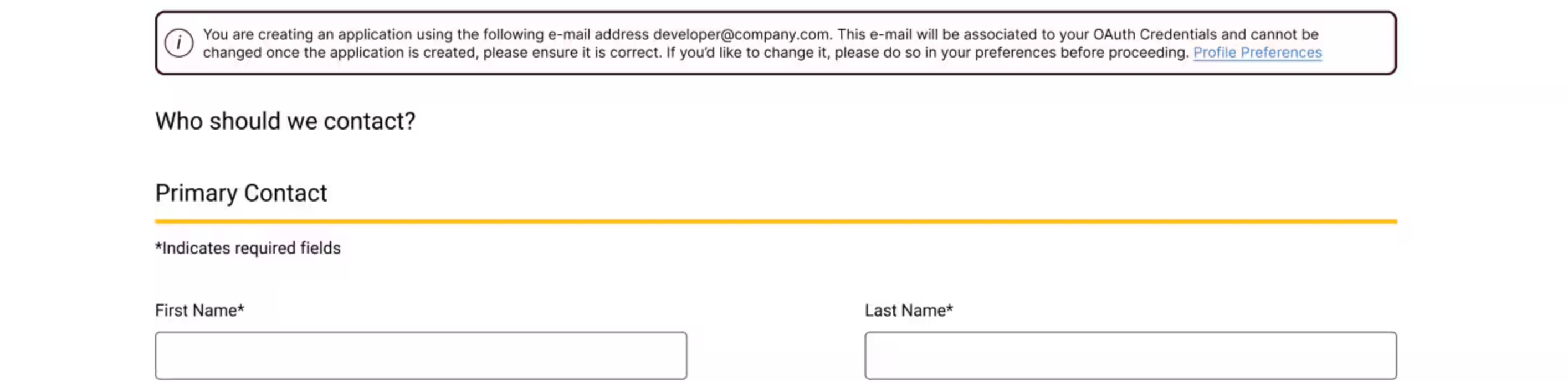

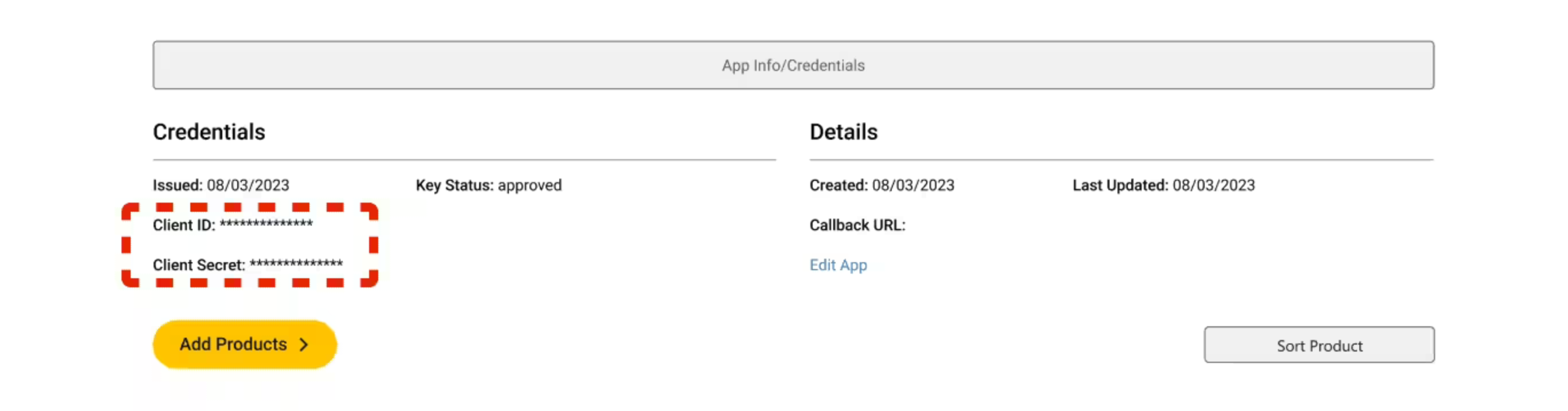

Coupa uses OAuth 2.0 Client Credentials for secure API access. To connect Coupa to Parabola, you'll need to create an OAuth client in your Coupa instance and obtain your credentials.

Creating an OAuth client in Coupa:

- Log in to your Coupa instance as an administrator with integration permissions.

- Navigate to Setup > Oauth2/OpenID Connect Clients. (You can type "oauth" in the search box to find it quickly.)

- Click Create.

- From the Grant Type dropdown, select Client credentials.

- Fill in the required fields:

- Name: Give your client a descriptive name (e.g., "Parabola Integration")

- Login: Your admin username

- Contact First Name, Contact Last Name, and Contact Email: Your contact information

- Select the Scopes you need for your integration:

core.accounting.read– Required to access invoicescore.purchase_order.read– Required to access purchase orders- You can add additional scopes based on your specific needs

- Click Save.

- After saving, you'll see your Client ID (Identifier) and Client Secret. Copy these credentials to a secure location—you'll need them to connect in Parabola.

Connecting in Parabola:

- In your Parabola flow, add a Pull from Coupa step.

- Click Authorize and you'll be prompted to enter:

- Client ID: Your Coupa OAuth client identifier

- Client Secret: Your Coupa OAuth client secret

- Instance: Your Coupa subdomain (e.g., if your Coupa URL is

https://acme.coupahost.com, enteracme) - Scopes: The scopes you selected when creating the client (e.g.,

core.accounting.read core.purchase_order.read). Each scope must be separated by a space.

- Click Connect. Parabola will securely store your credentials and automatically handle token generation and refresh.

- Once authenticated, select the data you want to pull and configure any filters.

Available data

Using the Coupa integration in Parabola, you can pull:

- Invoices: Invoice records including invoice numbers, status (draft, pending approval, approved, paid, voided, disputed, etc.), creation and update timestamps, totals, and file URLs for invoice PDFs. Filter by date ranges, status, or account type.

- Invoice details by ID: Retrieve full details for a specific invoice using its unique identifier.

- Purchase orders: Purchase order records including PO numbers, status (draft, pending approval, approved, issued, closed, canceled, etc.), creation and update timestamps, order totals, and export status. Filter by date ranges, status, export status, or account type.

All data can be filtered by creation date, update date, status, and account type. The integration supports pagination to handle large datasets efficiently.

Common use cases

- Three-way match reconciliation: Pull invoices and purchase orders from Coupa, combine with receiving data from your warehouse or ERP, and automatically flag discrepancies in quantities, pricing, or missing documents.

- Spend analysis and reporting: Aggregate invoice data by supplier, category, or time period to create automated dashboards showing spending trends, budget utilization, and savings opportunities.

- Invoice processing automation: Monitor pending and approved invoices, combine with payment data from your accounting system, and track time-to-payment or identify invoices stuck in approval workflows.

- Purchase order tracking: Track PO status from creation through closure, identify open POs aging beyond expected timelines, and alert procurement teams about delayed orders or missing receipts.

- Supplier performance monitoring: Combine invoice and PO data to calculate on-time delivery rates, analyze invoice accuracy, and identify suppliers with frequent disputes or billing issues.

- Month-end close acceleration: Automate the extraction and reconciliation of invoices and purchase orders against your general ledger or ERP system to reduce manual effort during financial close periods.

Tips for using Parabola with Coupa

- Schedule your flows to run daily or hourly during critical periods like month-end close to keep financial data current and reduce last-minute reconciliation work.

- Use date filters strategically: Filter by

created-atorupdated-atdates to pull only recent records, reducing API load and speeding up your flows. For ongoing reconciliations, pull data from the last 30-90 days. - Ask for just the fields you need: Use the fields setting to pull in the minimal fields that the Flow needs to operate. The less fields you request, the faster the data will be returned by Coupa to Parabola. Large amounts of data can take a long time to pull without reducing the fields.

- Combine with other systems: Join Coupa invoices and purchase orders with data from your ERP (NetSuite, SAP, etc.), warehouse management system, or procurement tools to create comprehensive operational views and catch cross-system discrepancies.

- Add validation checks: Use Parabola's Filter and Formula steps to flag exceptions like invoices without matching purchase orders, POs in "issued" status for over 60 days, or invoices with disputed status.

- Set up alerts: Configure Email or Slack notifications in Parabola to alert finance and procurement teams when specific conditions are met, such as high-value invoices pending approval or POs exceeding budget thresholds.