Connecting via API with with Apache Hadoop enables organizations to automate their distributed data processing operations through the leading open-source big data framework. This powerful connection allows businesses to streamline their large-scale data operations while maintaining flexibility and scalability, all through a robust API that supports complex distributed computing and storage operations.

How do I connect via API?

- Connect to the Hadoop API through Parabola by navigating to the API page and selecting Apache Hadoop

- Authenticate using your Hadoop credentials and configure necessary security settings

- Select the data endpoints you want to access (HDFS, MapReduce, YARN resources)

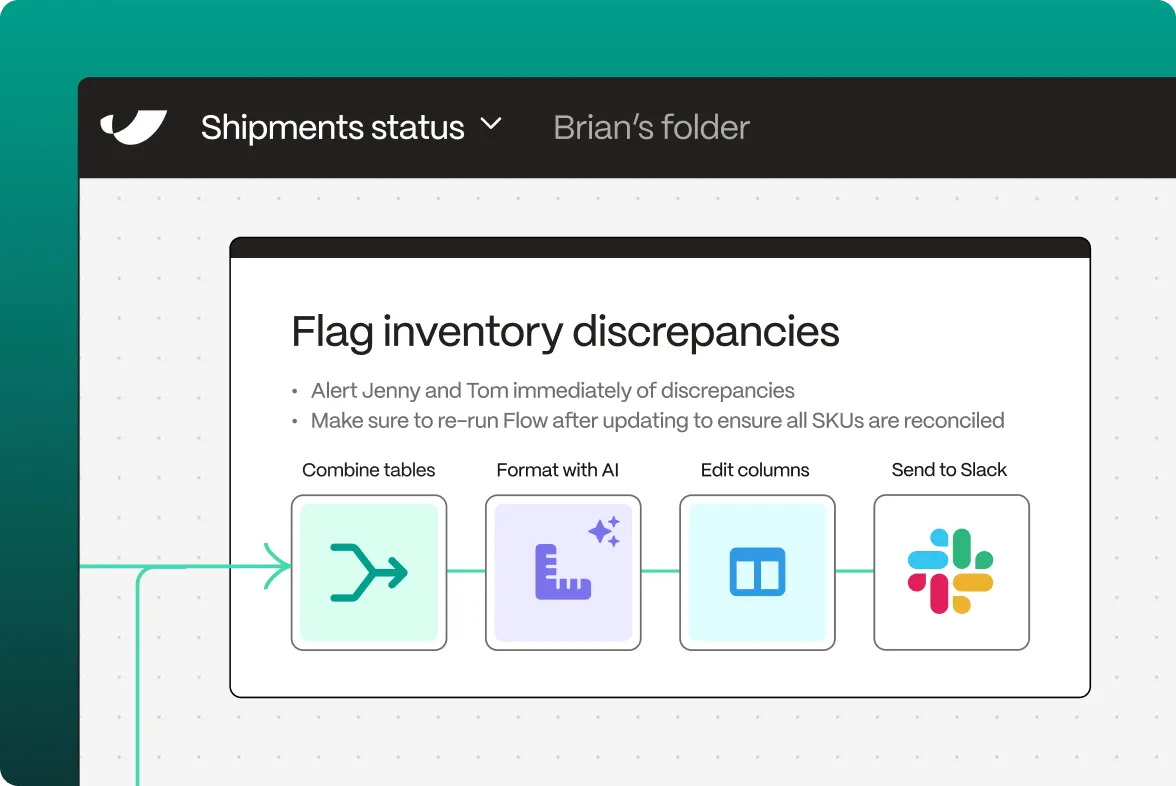

- Configure your flow in Parabola by adding transformation steps to process your data

- Set up automated triggers for distributed processing jobs

What is Apache Hadoop?

Apache Hadoop is an open-source framework designed for distributed storage and processing of large data sets across clusters of computers. As the foundation of many modern big data architectures, Hadoop enables organizations to handle massive amounts of structured and unstructured data using commodity hardware, making large-scale data processing accessible and cost-effective.

What does Apache Hadoop do?

Apache Hadoop provides a comprehensive ecosystem for distributed data storage and processing, enabling organizations to manage and analyze data at scale. Through its API, businesses can automate complex distributed computing operations while maintaining fault tolerance and data reliability. The platform excels in processing large datasets, supporting everything from batch processing to complex analytical workflows across distributed environments.

The API enables programmatic access to Hadoop's core components, including HDFS (Hadoop Distributed File System), MapReduce, and YARN (Yet Another Resource Negotiator). Organizations can leverage this functionality to build automated data processing pipelines, manage distributed storage operations, and coordinate resource allocation across large clusters.

What can I do with the API connection?

Distributed Processing Automation

Through Connecting via API with with Hadoop, data teams can automate complex distributed processing workflows. The API enables scheduled execution of MapReduce jobs, automated data partitioning, and seamless coordination across cluster nodes. This automation ensures efficient resource utilization while minimizing operational overhead.

Storage Management

Organizations can leverage the API to automate their HDFS operations. The system can manage data replication, handle file operations across the distributed environment, and maintain data locality optimizations. This automation helps ensure data availability and reliability while optimizing storage utilization.

Resource Optimization

Operations teams can automate cluster resource management through the API connection. The system can monitor resource utilization, adjust job scheduling parameters, and optimize workload distribution across the cluster. This automation helps maintain optimal performance while ensuring fair resource allocation.

Data Pipeline Integration

Data engineers can automate their data processing pipelines by connecting various data sources and processing steps through the API. The system can coordinate complex workflows, manage dependencies between jobs, and handle error recovery automatically. This integration streamlines data processing while ensuring reliability and scalability.

Monitoring and Maintenance

System administrators can automate their cluster monitoring and maintenance tasks through the API. The system can track cluster health, manage node maintenance windows, and coordinate upgrade processes. This automation reduces administrative overhead while maintaining cluster stability and performance.

Through this API connection, organizations can create sophisticated distributed computing workflows that leverage Hadoop's powerful capabilities while eliminating manual operations and reducing management complexity. The integration supports complex distributed operations, automated resource management, and seamless ecosystem integration, enabling teams to focus on deriving value from their data rather than managing infrastructure.