Workday's API gives you direct access to your human resources, financial, and employee data. By pulling Workday data into Parabola, you can create smarter, more efficient processes for managing your workforce and financial operations.

How to pull data from Workday into Parabola via API

- Connect your Workday account to Parabola through the API page

- Authenticate using your credentials and configure API permissions

- Select desired data streams (employee data, payroll information, financial records, etc.)

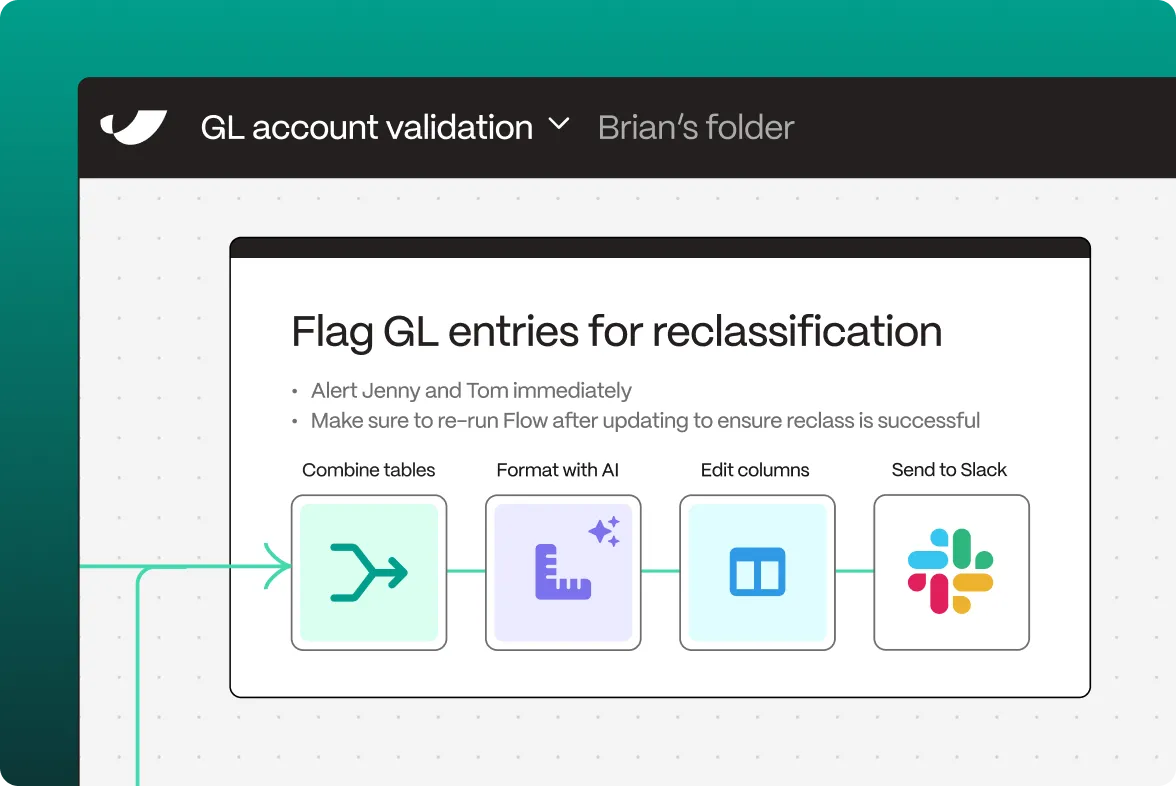

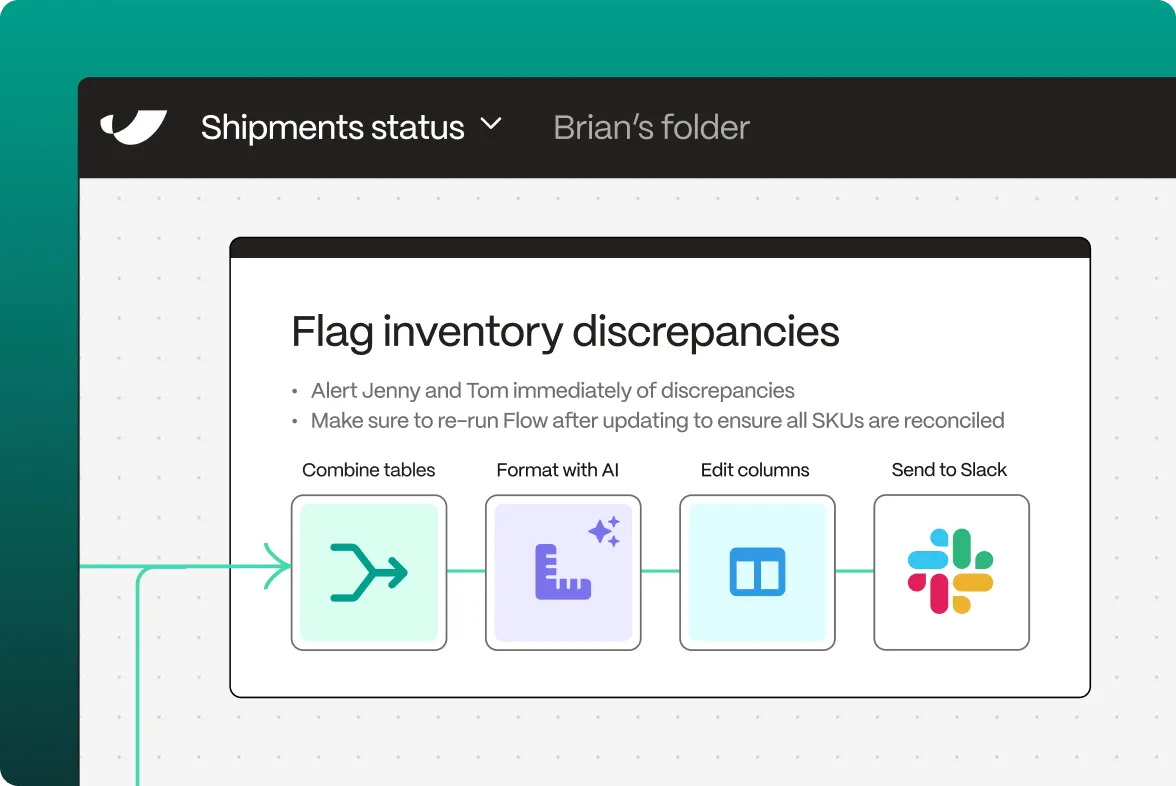

- Configure your flow in Parabola by adding transformation steps

- Set up automated triggers for real-time data processing

What can I do after connecting Parabola to Workday?

Human Resources Management

Access comprehensive employee and organizational data to streamline your HR processes. The API enables you to pull detailed information about your workforce, helping you make informed decisions about staffing, compensation, and organizational structure.

Essential HR data includes:

• Employee profiles and demographics

• Position and compensation details

• Time tracking and attendance

• Organizational hierarchies

Financial Operations

Monitor and analyze your financial data with direct access to Workday's robust financial management system. Track expenses, monitor budgets, and maintain accurate financial records across your organization.

Key financial metrics available:

• Budget tracking and allocation

• Expense management data

• Payment processing status

• Revenue recognition details

Workforce Analytics

Transform your HR and financial data into meaningful insights about your organization. Analyze trends in workforce composition, compensation, and organizational effectiveness to support strategic decision-making.

Monitor essential metrics like:

• Headcount and turnover rates

• Compensation patterns

• Department budget utilization

• Employee performance indicators

What is Parabola?

Parabola is a powerful no-code automation platform that helps businesses work smarter with their data. We make it easy to:

• Pull data from various sources, including APIs like Workday

• Transform and clean data automatically

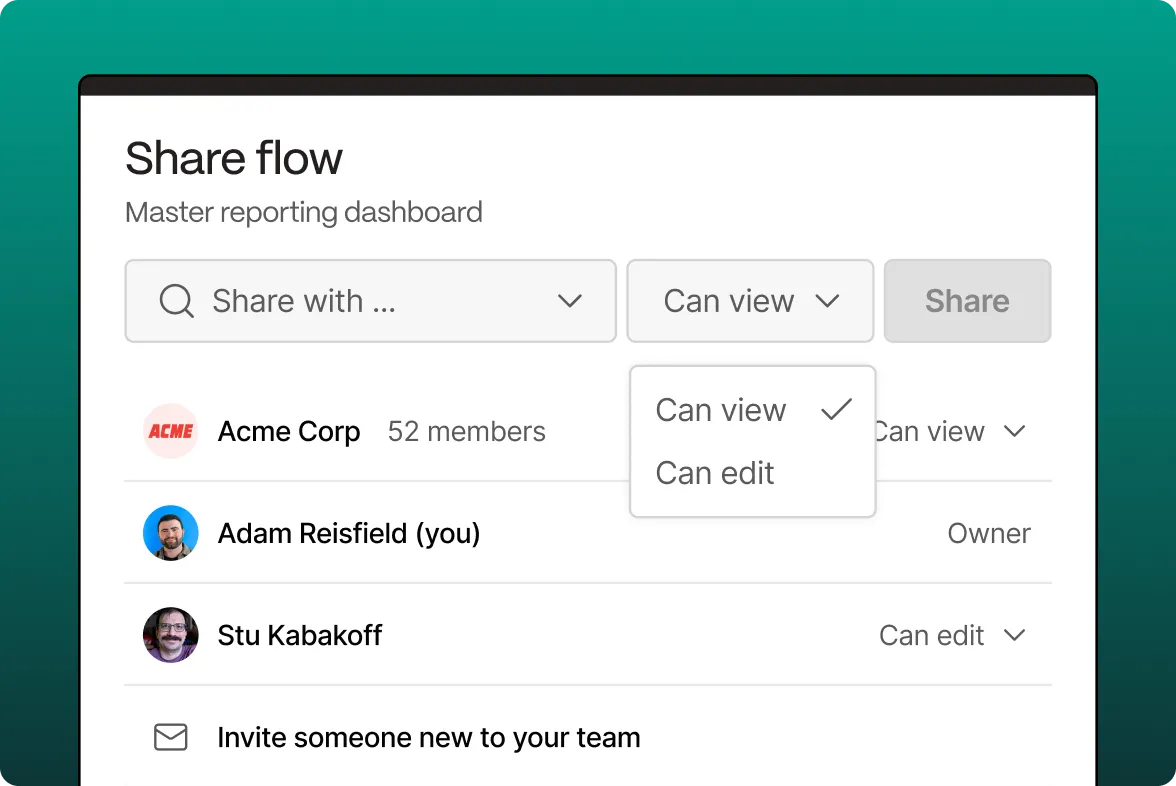

• Create custom workflows without coding

• Schedule automated data updates

• Build powerful business solutions

What is Workday?

Workday is a cloud-based enterprise resource planning (ERP) system that specializes in human capital management and financial management software. It's widely used by organizations looking to streamline their HR and financial operations in a single, integrated platform.

What does Workday do?

Workday brings together essential business operations in one unified system. The platform helps organizations manage their workforce, handle financial transactions, and track business performance through a comprehensive suite of tools.

The platform focuses on key business areas:

• Human resource management

• Payroll processing

• Financial planning

• Talent management

These integrated capabilities help organizations maintain efficient operations while providing valuable insights for strategic decision-making.