Data without consistency is unusable. Every team has faced the chaos of mismatched date formats, inconsistent SKUs, or customer names that don’t align across systems. Standardization efforts are often handled in spreadsheets, where operators clean one field only to find ten more inconsistencies. AI tools finally solve this by automatically enforcing consistency across entire datasets. Options like Parabola, Talend, and OpenRefine bring powerful standardization capabilities within reach.

Turning chaos into clarity

Manual standardization depends on people spotting and fixing issues row by row, a fragile method that rarely holds up at scale. The result is broken dashboards, unreliable integrations, and wasted time chasing down mismatched values.

AI standardization engines detect inconsistencies in real time, automatically unifying formats and aligning records across systems. Currency values are normalized, units of measure are standardized, and IDs sync seamlessly. Instead of firefighting errors every reporting cycle, operators work with clean, trusted data pipelines that scale.

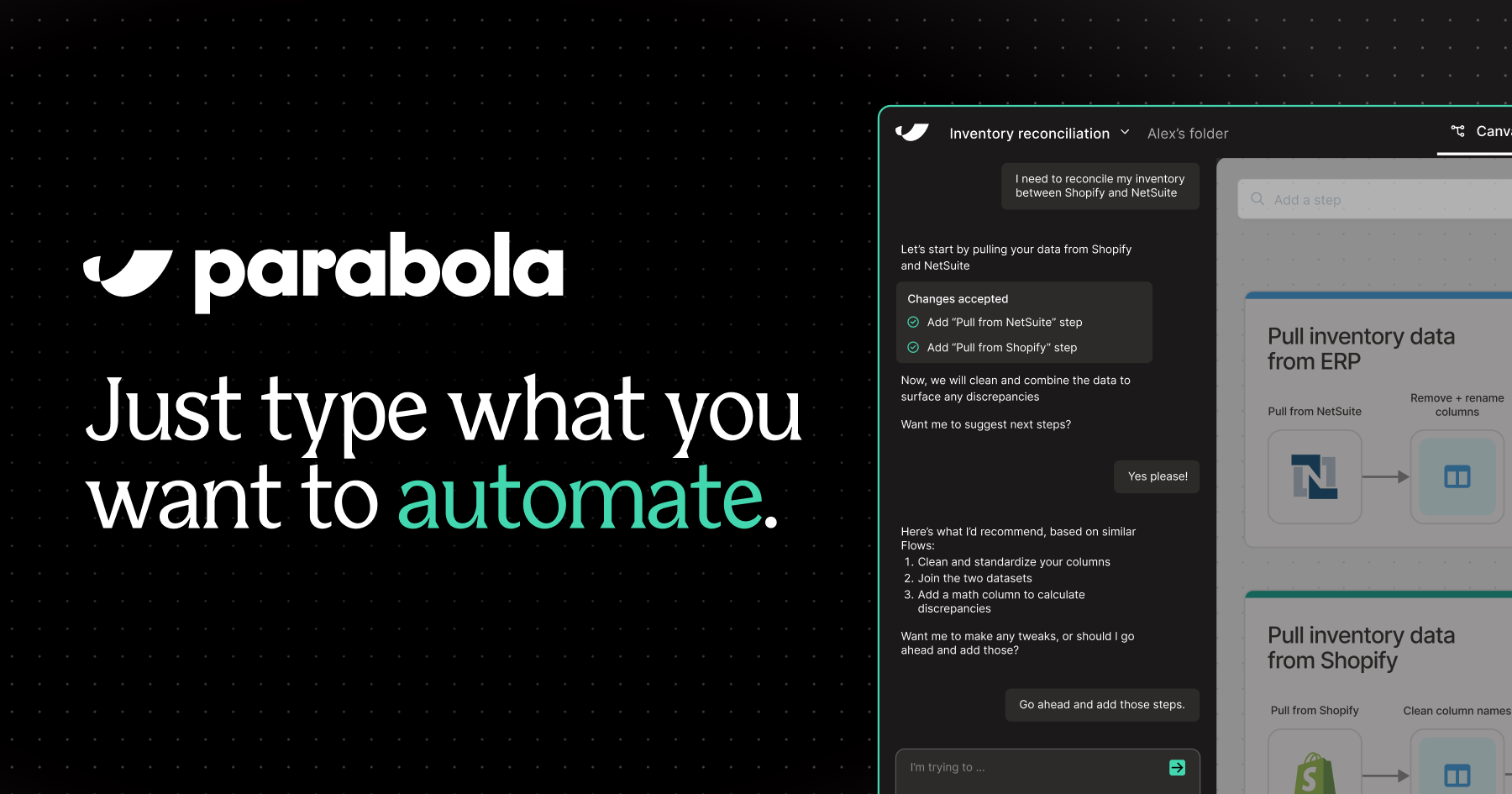

With Parabola, organizations avoid the recurring cost of manual cleanup and unlock reliable reporting, analytics, and automation.

Stop fixing the same errors every week — standardize with AI using Parabola.